Vous pouvez lire le billet sur le blog La Minute pour plus d'informations sur les RSS !

Canaux

7207 éléments (2941 non lus) dans 50 canaux

Dans la presse

(2756 non lus)

Dans la presse

(2756 non lus)

Du côté des éditeurs

(28 non lus)

Du côté des éditeurs

(28 non lus)

Toile géomatique francophone

(110 non lus)

Toile géomatique francophone

(110 non lus)

Géomatique anglophone

(35 non lus)

Géomatique anglophone

(35 non lus)

Géomatique anglophone

Géomatique anglophone

-

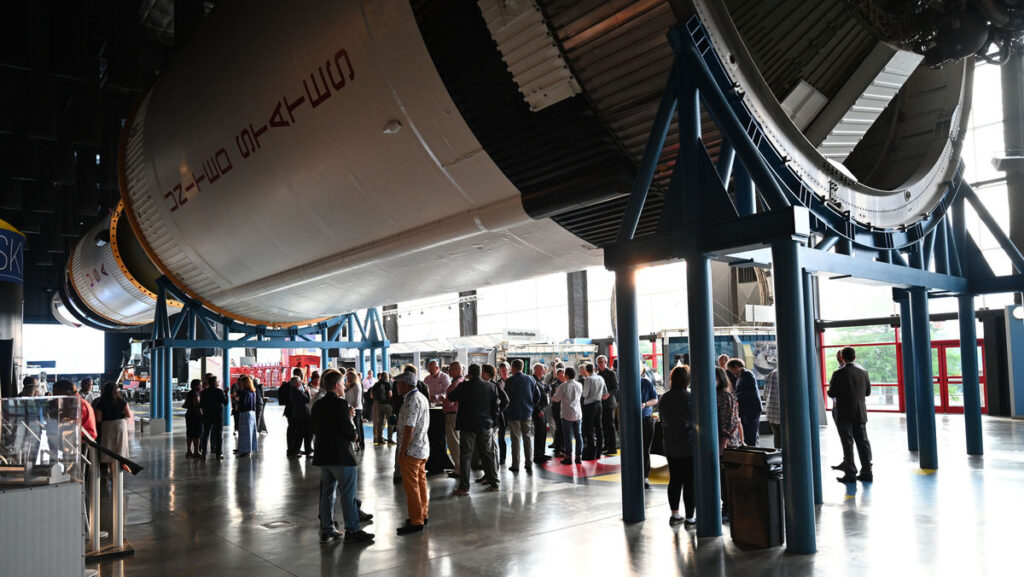

sur GeoSolutions: GeoSolutions USA at National States Geographic Information Council (NSGIC) 25-28 SEP

Publié: 18 September 2023, 5:51pm CEST

You must be logged into the site to view this content.

-

sur Free and Open Source GIS Ramblings: Data engineering for Mobility Data Science (with Python and DVC)

Publié: 16 September 2023, 7:28pm CEST

This summer, I had the honor to — once again — speak at the OpenGeoHub Summer School. This time, I wanted to challenge the students and myself by not just doing MovingPandas but by introducing both MovingPandas and DVC for Mobility Data Science.

I’ve previously written about DVC and how it may be used to track geoprocessing workflows with QGIS & DVC. In my summer school session, we go into details on how to use DVC to keep track of MovingPandas movement data analytics workflow.

Here is the recording of the session live stream and you can find the materials at [https:]]

-

sur Marco Bernasocchi: OPENGIS.ch and Oslandia: A Strategic Partnership to Advance QField and QFieldCloud

Publié: 15 September 2023, 9:00am CEST

We are extremely happy to announce that we have partnered strategically with Oslandia to push the leading #fieldwork app #QField even further.

In the world of fieldwork, accuracy and efficiency are paramount. As GIS specialists, we understand the importance of reliable tools that streamline data collection and analysis processes. That’s why we are thrilled to join forces with Oslandia, a company that shares our passion for open-source development and innovation.

Embracing Open Source DevelopmentAt OPENGIS.ch, we have always been committed to the principles of true open-source development. We firmly believe collaboration and shared knowledge drive progress in the GIS community. With Oslandia, we have found a partner who shares our values and cares as much as we do about the QGIS ecosystem.

QGIS, the world’s most popular open-source geographic information system software, has already significantly impacted the GIS industry, providing users with versatile mapping tools and capabilities and is the base upon which QField is built. As main contributors to #QGIS, both OPENGIS.ch and Oslandia are dedicated to driving its growth and ensuring its availability to all.

Advancing QField and QFieldCloud Together

QField, with almost 1 million downloads, is the leading app for fieldwork tasks. It empowers professionals in various sectors, such as environmental research, agriculture, urban planning, and disaster management, to efficiently collect data and conduct analyses in the field. With our strategic partnership with Oslandia, we are committed to pushing the boundaries of QField even further.

Our joint efforts will ensure that QField will keep setting trends in the industry, surpassing the evolving needs of GIS specialists and empowering them to excel in their fieldwork tasks.

A Synergy of ExpertiseThe collaboration between OPENGIS.ch and Oslandia represents a true synergy of expertise. Our combined capabilities will enable us to tackle complex challenges quickly and deliver cutting-edge solutions that address the unique requirements for seamless #fielwork.

ConclusionAt OPENGIS.ch, we are excited about the opportunities our partnership with Oslandia brings. Together, we will continue championing open-source development, empowering GIS specialists in each sector to perform their fieldwork tasks more effectively and efficiently.

With QField as our flagship app, we are confident that this strategic collaboration will result in even greater advancements, benefiting our target audience of surveying professionals, fieldwork experts, and GIS specialists, as well as casual users who need a user-friendly solution for their projects.

Join us in celebrating this exciting new chapter as we embark on a shared journey towards innovation and excellence in fieldwork applications.

-

sur Oslandia: Strategic partnership agreement between Oslandia and OpenGIS.ch on QField

Publié: 14 September 2023, 9:30pm CEST

Who are we? For those unfamiliar with Oslandia, OpenGIS.ch, or even QGIS, let’s refresh your memory:

For those unfamiliar with Oslandia, OpenGIS.ch, or even QGIS, let’s refresh your memory: Oslandia is a French company specializing in open-source Geographic Information Systems (GIS). Since our establishment in 2009, we have been providing consulting, development, and training services in GIS, with reknown expertise. Oslandia is a dedicated open-source player and the largest contributor to the QGIS solution in France.

Oslandia is a French company specializing in open-source Geographic Information Systems (GIS). Since our establishment in 2009, we have been providing consulting, development, and training services in GIS, with reknown expertise. Oslandia is a dedicated open-source player and the largest contributor to the QGIS solution in France.

As for OPENGIS.ch, they are a Swiss company specializing in the development of open-source GIS software. Founded in 2011, OPENGIS.ch is the largest Swiss contributor to QGIS. OPENGIS.ch is the creator of QField, the most widely used open-source mobile GIS solution for geomatics professionals.

As for OPENGIS.ch, they are a Swiss company specializing in the development of open-source GIS software. Founded in 2011, OPENGIS.ch is the largest Swiss contributor to QGIS. OPENGIS.ch is the creator of QField, the most widely used open-source mobile GIS solution for geomatics professionals.

OPENGIS.ch also offers QFieldCloud as a SaaS or on-premise solution for collaborative field project management.

Some may still be unfamiliar with #QGIS ?

Some may still be unfamiliar with #QGIS ?It is a free and open-source Geographic Information System that allows creating, editing, visualizing, analyzing, and publicating geospatial data. QGIS is a cross-platform software that can be used on desktops, servers, as a web application, or as a development library.

QGIS is open-source software developed by multiple contributors worldwide. It is an official project of the OpenSource Geospatial Foundation (OSGeo) and is supported by the QGIS.org association. See [https:]]

A Partnership? Today, we are delighted to announce our strategic partnership aimed at strengthening and promoting QField, the mobile application companion of QGIS Desktop.

Today, we are delighted to announce our strategic partnership aimed at strengthening and promoting QField, the mobile application companion of QGIS Desktop. This partnership between Oslandia and OPENGIS.ch is a significant step for QField and open-source mobile GIS solutions. It will consolidate the platform, providing users worldwide with simplified access to effective tools for collecting, managing, and analyzing geospatial data in the field.

This partnership between Oslandia and OPENGIS.ch is a significant step for QField and open-source mobile GIS solutions. It will consolidate the platform, providing users worldwide with simplified access to effective tools for collecting, managing, and analyzing geospatial data in the field. QField, developed by OPENGIS.ch, is an advanced open-source mobile application that enables GIS professionals to work efficiently in the field, using interactive maps, collecting real-time data, and managing complex geospatial projects on Android, iOS, or Windows mobile devices.

QField, developed by OPENGIS.ch, is an advanced open-source mobile application that enables GIS professionals to work efficiently in the field, using interactive maps, collecting real-time data, and managing complex geospatial projects on Android, iOS, or Windows mobile devices.

QField is cross-platform, based on the QGIS engine, facilitating seamless project sharing between desktop, mobile, and web applications.

QField is cross-platform, based on the QGIS engine, facilitating seamless project sharing between desktop, mobile, and web applications.

Reactions QFieldCloud ( [https:]] ), the collaborative web platform for QField project management, will also benefit from this partnership and will be enhanced to complement the range of tools within the QGIS platform.

QFieldCloud ( [https:]] ), the collaborative web platform for QField project management, will also benefit from this partnership and will be enhanced to complement the range of tools within the QGIS platform.

At Oslandia, we are thrilled to collaborate with OPENGIS.ch on QGIS technologies. Oslandia shares with OPENGIS.ch a common vision of open-source software development: a strong involvement in development communities, work in respect with the ecosystem, an highly skilled expertise, and a commitment to industrial-quality, robust, and sustainable software development.

At Oslandia, we are thrilled to collaborate with OPENGIS.ch on QGIS technologies. Oslandia shares with OPENGIS.ch a common vision of open-source software development: a strong involvement in development communities, work in respect with the ecosystem, an highly skilled expertise, and a commitment to industrial-quality, robust, and sustainable software development. With this partnership, we aim to offer our clients the highest expertise across all software components of the QGIS platform, from data capture to dissemination.

With this partnership, we aim to offer our clients the highest expertise across all software components of the QGIS platform, from data capture to dissemination. On the OpenGIS.ch side, Marco Bernasocchi adds:

On the OpenGIS.ch side, Marco Bernasocchi adds:

Commitment to open sourceThe partnership with Oslandia represents a crucial step in our mission to provide leading mobile GIS tools with a genuine OpenSource credo. The complementarity of our skills will accelerate the development of QField and QFieldCloud and meet the growing needs of our users.

Ready for field mapping ? Both companies are committed to continue supporting and improving QField and QFieldCloud as open-source projects, ensuring universal access to this high-quality mobile GIS solution without vendor dependencies.

Both companies are committed to continue supporting and improving QField and QFieldCloud as open-source projects, ensuring universal access to this high-quality mobile GIS solution without vendor dependencies. And now, are you ready for the field?

And now, are you ready for the field?So, download QField ( [https:]] ), create projects in QGIS, and share them on QFieldCloud!

If you need training, support, maintenance, deployment, or specific feature development on these platforms, don’t hesitate to contact us. You will have access to the best experts available: infos+mobile@oslandia.com.

If you need training, support, maintenance, deployment, or specific feature development on these platforms, don’t hesitate to contact us. You will have access to the best experts available: infos+mobile@oslandia.com.

-

sur Making The Data Count, Not Just Counting The Data

Publié: 14 September 2023, 6:05pm CEST par Simon Chester

After three years of collaborative development, the release of the first iteration of the IGIF-(M)SDI Maturity Roadmap is a milestone moment in exploiting geospatial data for the inclusive socio-economic development of nations. This Maturity Roadmap – involving the UK Hydrographic Office (UKHO) as lead sponsor, as well as the Open Geospatial Consortium (OGC), the World Bank Group, and the US National Oceanic and Atmospheric Administration (NOAA) – complements existing resources by providing a quantitative toolkit for nations, ministries, departments, agencies, regions, municipalities, and even individual cities or ports, to benchmark their geospatial development against the United Nations’ Integrated Geospatial Information Framework (IGIF) principles. This independent initiative aligns with, and supports, the mission, vision, and goals of the UN-GGIM initiative (Global Geospatial Information Management), who developed the IGIF core principles for all geospatial data considerations.

(Marine) Spatial Data InfrastructureIGIF provides a vision for developing and strengthening geospatial information management, to assist countries in bridging the geospatial digital divide, secure socio-economic prosperity, and leave no community behind. Marine Spatial Data Infrastructure ((M)SDI) is the International Hydrographic Organization (IHO)’s concept for a future ecosystem of marine data services that can enable the IGIF vision to become a reality. Empowered by OGC standards, the interoperable (M)SDI data services can “make it real through technology.” Bringing these elements together in a straightforward and accessible document, the intent of the Maturity Roadmap is to provide a quantitative “quick start” or “stepping stone” for nations beginning an IGIF-aligned (M)SDI implementation.

With its terrestrial heritage, the World Bank SDI Diagnostic Toolkit is augmented with IHO and OGC contributions to maximise its benefits to the marine community, while remaining aligned with the IGIF principles and, therefore, the UN Sustainable Development Goals (SDGs). As a guiding simplification, the involvement of the World Bank is crucial in providing answers for questions around financing (including business cases), alongside the ‘why’ (UN), ‘what’ (IHO), and ‘how’ (OGC). This aspect of measuring socio-economic return is commonly a key hurdle that prevents real-world progress beyond concepts and ideas. The modular IHO and OGC additions ensure interoperability with the World Bank IGIF methodology, which can lead to the financing of approved (M)SDI development projects. Even as an independent tool, undertaking an (M)SDI assessment provides a clear reference point aligned with international best practice. Without such a starting point, progress towards any (M)SDI end-state will be difficult to govern and manage.

Fully interoperable across all geospatial domainsAs part of the OGC’s Federated Marine SDI (FMSDI) initiative, the Maturity Roadmap seeks to promote the inclusive development of an IGIF-Aligned (M)SDI as the marine and maritime community’s contribution to an all-domain National Spatial Data Infrastructure (NSDI) across air, land, sea, space, and cyberspace. Although initially adapted for marine considerations, the Maturity Roadmap is fully interoperable across all geospatial domains and scalable from the national level to regions, municipalities, cities, ports, and government departments or agencies.

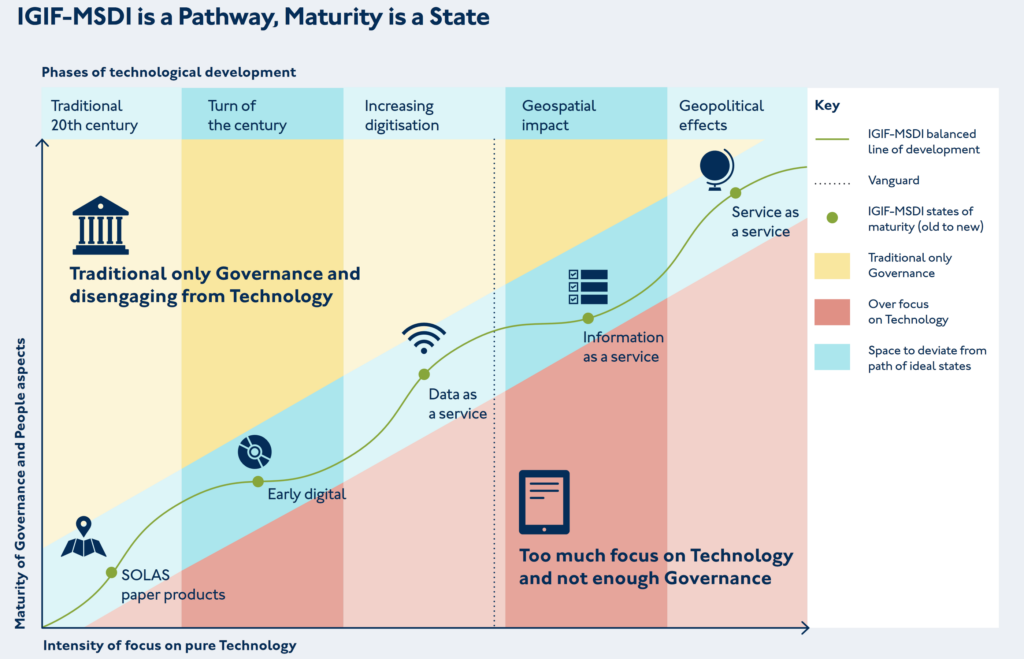

Within the Maturity Roadmap, the concept of an IGIF-(M)SDI Balanced Pathway of Development seeks to promote inclusive geospatial development via two key messages: ‘driving technology, not being driven by technology’ and ‘making the data count, not just counting the data.’

These twin ideas promote the effective governance of technology & standards to meet sovereign national requirements, however expansive or constrained, over the acquisition and possession of the latest technological solutions independent of cost-benefit considerations. OGC contributes to this by providing best practice around the implementation of standards, alongside an active cross-sector global forum to share applied knowledge, cooperate on emerging technologies, and collaborate on standards development. The engagement of the OGC membership at all levels of socio-economic development is vital for realising the cost benefits stemming from the common implementation of technologies across different countries, regions, sectors, and communities, regardless of economic spend.

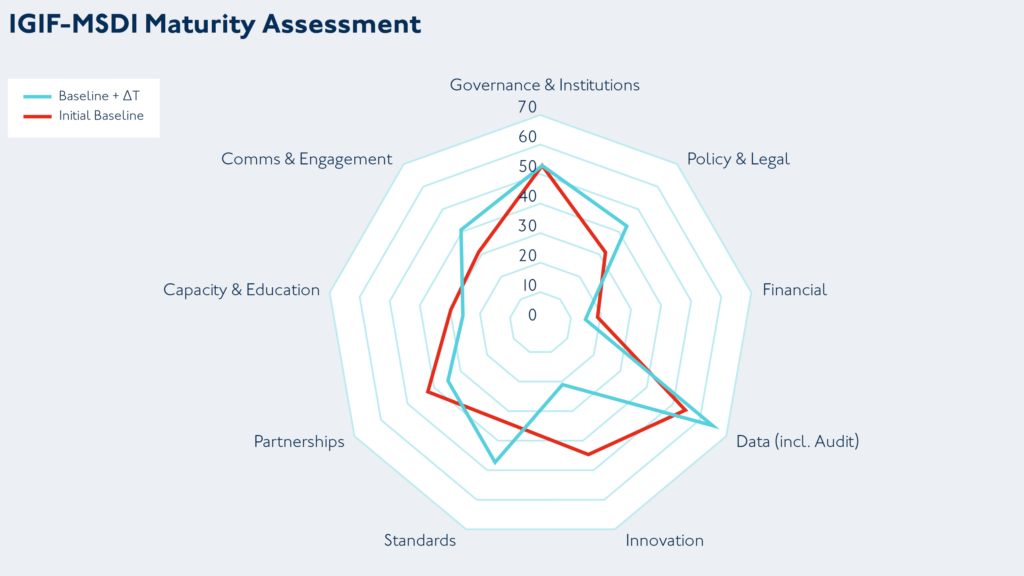

The benefits of benchmarking IGIF-MSDI maturityWhen objectively and independently applied, the benchmarking provided by the IGIF-(M)SDI Maturity Roadmap offers a useful planning and comparison baseline for countries undertaking a public geospatial development programme. The example here is a radar chart output across nine assessment categories corresponding to the IGIF Nine Pathways, for an initial baseline – and a subsequent baseline two years later. The underlying data is from real-world assessments taken under World Bank and partner oversight, which was openly published by the Agency for Land Relations and Cadastre of the Republic of Moldova. Such benchmarking exercises can be executed across different scales (from whole nations to cities or ports) and across different domains (from space to cyberspace), sometimes yielding deep insights into potential opportunities around discovered disparities.

Also included with the Maturity Roadmap is a practically orientated appendix that covers best practice for multi-agency governance, where, during a national geospatial development programme, multiple agencies or departments may have to work closely to operationally deliver joint geospatial outcomes. This may be useful in situations where long-standing traditions and conventions could have created a culture that is not conducive to the tight-knit cooperation needed to develop complex IGIF-(M)SDI solutions. Such solutions require the pooling of expertise, resources, and capabilities that one or even two agencies can not provide alone.

Positive approach to improve ineffective practices is essential to joint IGIF-(M)SDI successOne crucial characteristic for joint IGIF-(M)SDI success is a healthy scepticism and a drive to improve ineffective practices, especially when they have become entrenched as tradition, convention, or “how things have always been done.” I like to counter such perceptions, particularly amongst those that genuinely want to evolve, with the view that “if you always do what you’ve always done, you’ll always get what you’ve always gotten.” Long-term existing practices may have been fine because they met some requirement in a particular environment and once satisfied a need effectively. However, maintaining those same practices now, when society’s expectations and technology have moved forward, can lead to stagnation.

Departments and agencies should foster a keen interest in human behaviour around the use (and misuse) of data or information. Traditional or conventional “hard governance” centres around the assumption that people only make the wrong decisions because they have the wrong information or not enough of it. This traditional view of data governance then coalesces into hard compliance measures and management surveillance, which includes formal audits, regular in-depth reporting, restrictive checklists, and a focus on top-down, non-negotiable command & control. This approach was suited to traditional mass manufacturing of standardised products, but is insufficient by itself for modern data services that are digital-first by design and characterised by near real-time changes.

Soft governance works with the grain of human behaviour to achieve better results by enablement and empowerment, rather than by command and control alone. Principles take precedent over prescription, thus allowing an organisation to leverage the deep insights and frontline experiences of their entire workforce. Shortcut thinking, lack of active engagement and wrong assumptions are some of the key targets for a soft governance approach, which still requires the ultimate backstop of hard governance – but meaningfully targeted and monitored using a risk-based approach. Combining the two approaches can yield outsized and transformative results, which is essential for joint IGIF-(M)SDI success that leaves no community behind.

The IGIF-(M)SDI Maturity Roadmap and related resources are available for free on OGC’s IGIF-(M)SDI Maturity Roadmap webpage.

To best inform future revisions, iterations, and the optimization of the Roadmap, feedback and applied experiences from the geospatial community are sought via OGC Member Meetings, Forums, or directly.

The IGIF-(M)SDI Maturity Roadmap is an independent initiative not endorsed by or officially connected to, but in alignment and support of, the mission, vision, and goals of the United Nations Initiative on Global Geospatial Information Management (UN-GGIM).

Dr. Gerald J Wong is the Data Governance Lead at the United Kingdom Hydrographic Office (UKHO), which is a world-leading centre for hydrography and an executive agency of the Ministry of Defence (MoD). Dr Wong is the Lead Author of the IGIF-(M)SDI Maturity Roadmap, being a specialist in synergising traditional rules-based hard governance with modern and empowering soft governance, which works with the grain of human behaviour to achieve better outcomes.

As an OGC Strategic Member and a sponsor of OGC’s FMSDI initiative, the United Kingdom Hydrographic Office (UKHO) is the UK’s agency for providing hydrographic and marine geospatial data to mariners and maritime organisations across the world. The UKHO is responsible for operational support to the Royal Navy and other defence customers. Supplying defence and the commercial shipping industry, the organisation helps ensure Safety of Life at Sea (SOLAS), protect the marine environment, and support the efficiency of global trade.

Together with other national hydrographic offices and the International Hydrographic Organization (IHO), the UKHO works to develop and raise global standards of hydrography, cartography, and navigation. The UKHO also produces a commercial portfolio of ADMIRALTY Maritime Data Solutions, providing SOLAS-compliant charts, publications, and digital services for ships trading internationally.

The post Making The Data Count, Not Just Counting The Data appeared first on Open Geospatial Consortium.

-

sur geomatico: HOT-OSM para el seísmo de Marruecos

Publié: 14 September 2023, 1:18pm CEST

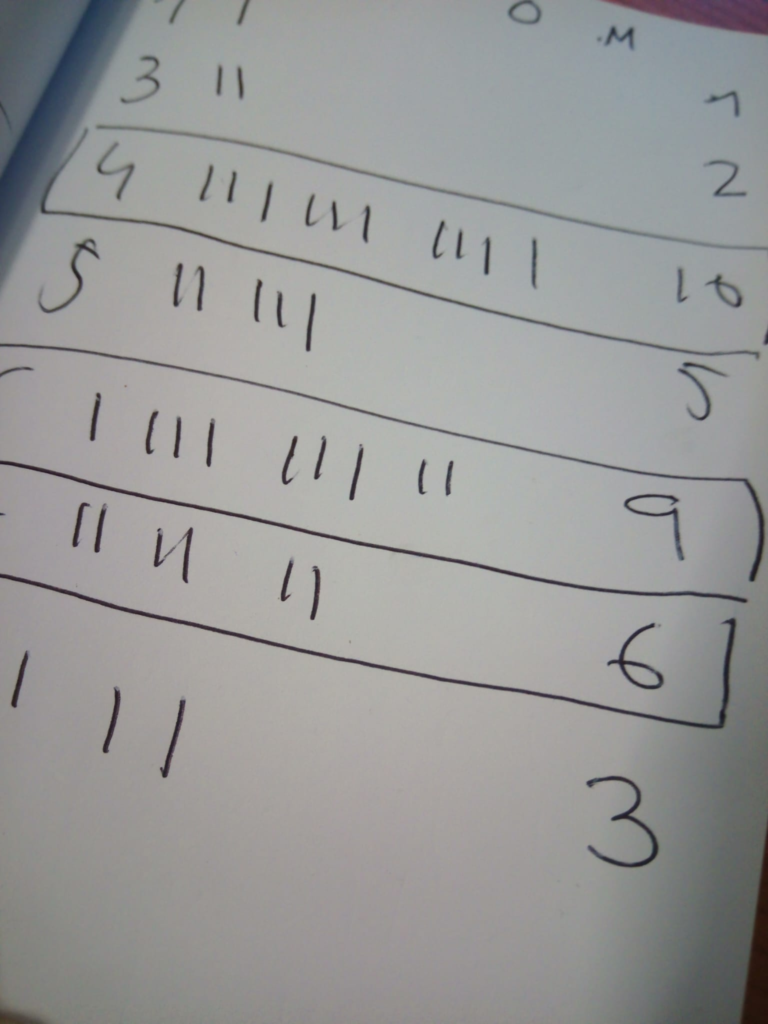

Geomatico dedica un día al mes a colaborar en aquellos proyectos que más nos llaman la atención tecnológica o socialmente. Es lo que llamamos el día del imasdé (I+D), que empieza con todos los trabajadores votando a qué dedicaremos las siguientes horas de trabajo.

Votaciones poco tecnológicas para decidir el día del I+D

Votaciones poco tecnológicas para decidir el día del I+D

Como no podía ser de otra manera, esta jornada del 13 de septiembre la dedicamos al precioso proyecto HOT-OSM (Humanitarian OpenStreetMap Team) que había hecho un llamamiento urgente para ayudar a mapear las zonas afectadas por el dramático terremoto del sur de Marruecos.

Primero hicimos una pequeña introducción a OpenStreetMap (OSM) para profanos para aquella parte del equipo que no tenía experiencia anterior con el proyecto. Vimos los diferentes editores, iD, JOSM y estudiamos un poco las primitivas geométricas que caracterizan el proyecto y por supuesto las Map Features. Ya en HOT, decidimos en que proyecto íbamos a colaborar y nos pusimos a ello.

Seleccionando zona de trabajo en HOT-OSM

Seleccionando zona de trabajo en HOT-OSM

Había que que cartografiar los edificios dentro de las rejillas que seleccionábamos. En el mismo proyecto de HOT, se explicaba claramente como realizar la tarea a partir de JOSM. Así, mediante el plugin de crear edificios, pudimos aportar nuestro granito de arena a la zona.

Puede ser complejo definir distinguir exactamente los contornos de los edificios en Marruecos

Puede ser complejo definir distinguir exactamente los contornos de los edificios en Marruecos

Fue muy gratificante, tanto por la tarea, como por la dinámica del trabajo, el compartir una jornada completa con las compañeras realizando un trabajo “sencillo“ en el que a la vez podíamos estar comentando otros aspectos de nuestro día a día. ¡Viva el día del imasdé y HOT-OSM!

Micho, Marta y Alex trabajando en HOT-OSM pero posando disimuladamente para la foto

Micho, Marta y Alex trabajando en HOT-OSM pero posando disimuladamente para la foto

-

sur Registrations Open for OGC’s October 2023 Open Standards Code Sprint

Publié: 12 September 2023, 5:00pm CEST par Simon Chester

The Open Geospatial Consortium (OGC) invites software developers to the October 2023 Open Standards Code Sprint. The hybrid event will be held on Oct. 30 to Nov. 1, with the face-to-face element held at the Geovation Hub in London. A pre-event webinar will be held on October 12th. Participation is free and open to the public. Registration is available on the OGC Open Standards Code Sprint website. Travel support funding is available.

The Code Sprint is sponsored at the Gold Level by OGC Strategic Member Ordnance Survey (OS), and at the Silver Level by OGC Member the European Union Satellite Centre (SatCen). Additional support comes from OGC Strategic Member the US National Geospatial Intelligence Agency (NGA) and OGC Principal Member the UK Defence Science and Technology Laboratory (Dstl). Additional sponsorship opportunities remain available for organizations to support the geospatial development community while promoting their products or services. Visit the Event Sponsorship page for more information.

The code sprint will be a collaborative and inclusive event to support the development of Open Standards and supporting applications. All OGC Standards are in scope for this code sprint.

OGC code sprints experiment with emerging ideas in the context of geospatial standards, help improve interoperability of existing standards by experimenting with new extensions or profiles, and are used for building proofs-of-concept to support standards development activities and enhancement of software products.

Non-coding activities such as testing, working on documentation, or reporting issues are also welcome during the code sprint. The Code Sprint also provides the opportunity, via its mentor stream, to onboard developers new to OGC Standards.

A one-hour pre-event webinar will take place on October 12 at 14:00 BST (UTC+1). The webinar will outline the scope of work for the code sprint and provide other useful information for participants. Any participants interested in Imagery formats will be invited to stay on after the webinar for a technical overview of the formats in focus for the code sprint. As with the virtual portion of the sprint, the pre-event webinar will take place on OGC’s Discord server.

The Code Sprint will prototype and advance implementations of multiple approved and candidate OGC Standards, for example:

…and more.

In the context of OGC Standards, the Code Sprint will also experiment with the ability to access or provide imagery conforming to NGA’s emerging GEOINT Imagery Media for ISR (GIMI) Profile through implementations of OGC API Standards. The GIMI Profile is based on the ISO/IEC 23008-12 High Efficiently Image File Format (HEIF) and the ISO/IEC 14496-12 ISO Base Media File Format (ISOBMFF) standards. This part of the Code Sprint will also prototype creation of GIMI files from still imagery encoded in JPEG 2000 (with GMLJP2), and motion imagery encoded in H.264 and H.265 formats.

Some Travel Support Funding is available for selected participants. Anyone interested in receiving travel support funding should indicate their interest on the registration form. Requests for funding will need to be received before October 2. They will be notified within 2 weeks of their application whether their application for travel support is approved or not.

The code sprint begins at 09:00 UTC on October 30 with an onboarding session, and ends at 17:00 UTC on November 1. To learn more about future and previous OGC code sprints, visit the OGC Developer Events Wiki or join OGC’s Discord server.

Registration for in-person participation closes at 17:00 UTC on October 25. Registration for remote participation will remain open throughout the code sprint. Registration and further information is available on The Code Sprint website.

The post Registrations Open for OGC’s October 2023 Open Standards Code Sprint appeared first on Open Geospatial Consortium.

-

sur BostonGIS: Why People care about PostGIS and Postgres and FOSS4GNA

Publié: 10 September 2023, 7:22am CEST

Paul Ramsey and I recently had a Fireside chat with Path to Cituscon. Checkout the Podcast Why People care about PostGIS and Postgres. There were a surprising number of funny moments and very insightful stuff.

It was a great fireside chat but without the fireplace. We covered the birth and progression of PostGIS for the past 20 years and the trajectory with PostgreSQL. We also learned of Paul's plans to revolutionize PostGIS which was new to me. We covered many other side-line topics, like QGIS whose birth was inspired by PostGIS. We covered pgRouting and mobilitydb which are two other PostgreSQL extension projects that extend PostGIS.

We also managed to fall into the Large Language Model conversation of which Paul and I are on different sides of the fence on.

Continue reading "Why People care about PostGIS and Postgres and FOSS4GNA" -

sur Camptocamp: The QGIS Hub Plugin

Publié: 8 September 2023, 4:00am CEST

Pièce jointe: [télécharger]

Your direct access to the shared resources of the QGIS community. -

sur GRASS GIS: NSF Grant Awarded to Enhance GRASS GIS Ecosystem

Publié: 6 September 2023, 12:12pm CEST

We, a team of researchers from four U.S. universities, are excited to announce a significant new project to support and expand the global GRASS GIS community. We have been awarded a prestigious grant (award 2303651) from the U.S. National Science Foundation (NSF) to bolster and broaden the software ecosystem of GRASS GIS for a world that increasingly relies on location-based information. The two main goals of the project are: 1) to facilitate the adoption of GRASS GIS as a key geoprocessing engine by a growing number of researchers and geospatial practitioners in academia, governments, and industry; and 2) to expand and diversify the developer community, especially through supporting next-generation scientists to gain expertise to maintain and innovate GRASS software. -

sur GeoSolutions: GeoSolutions to Sponsor FOSS4G North America – 23-25 OCT – Baltimore, MD

Publié: 5 September 2023, 8:19pm CEST

You must be logged into the site to view this content.

-

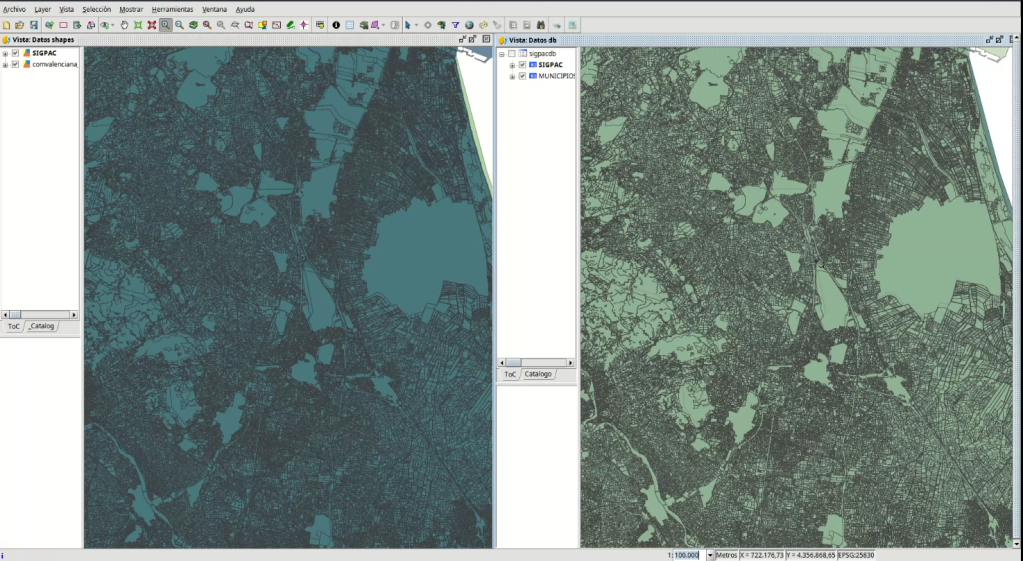

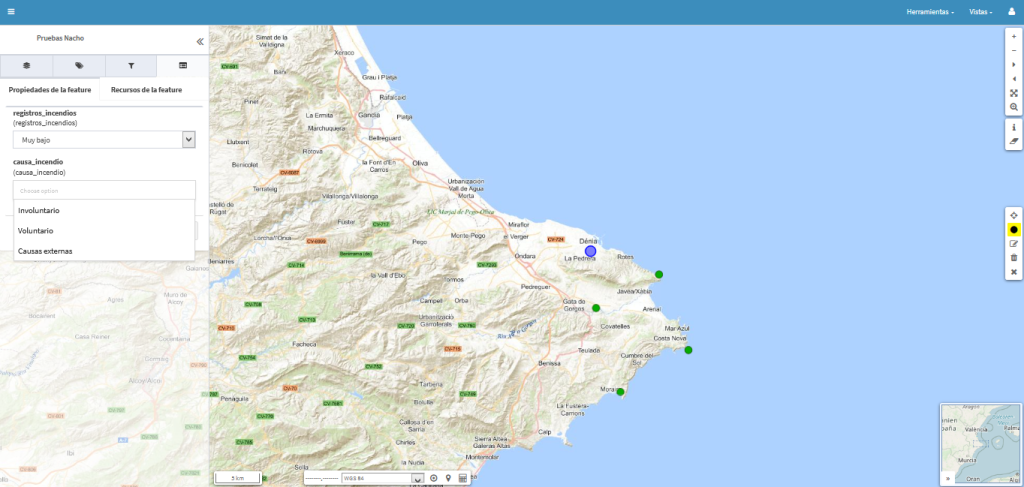

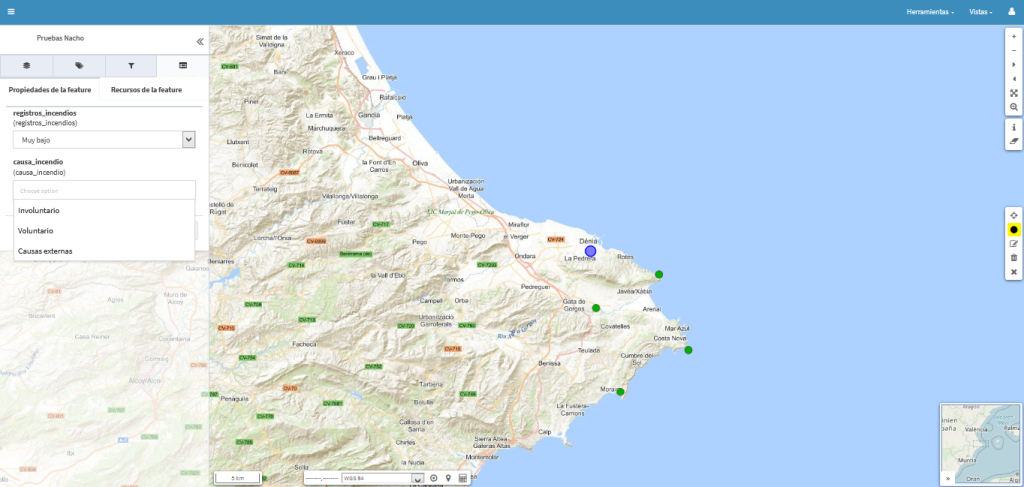

sur Marco Bernasocchi: Analyzing and visualizing large-scale fire events using QGIS processing with ST-DBSCAN

Publié: 5 September 2023, 12:04pm CEST

A while back, one of our ninjas added a new algorithm in QGIS’ processing toolbox named ST-DBSCAN Clustering, short for spatio temporal density-based spatial clustering of applications with noise. The algorithm regroups features falling within a user-defined maximum distance and time duration values.

This post will walk you through one practical use for the algorithm: large-scale fire event analysis and visualization through remote-sensed fire detection. More specifically, we will be looking into one of the larger fire events which occurred in Canada’s Quebec province in June 2023.

Fetching and preparing FIRMS data

Fetching and preparing FIRMS data

NASA’s Fire Information for Resource Management System (FIRMS) offers a fantastic worldwide archive of all fire detected through three spaceborne sources: MODIS C6.1 with a resolution of roughly 1 kilometer as well as VIIRS S-NPP and VIIRS NOAA-20 with a resolution of 375 meters. Each detected fire is represented by a point that sits at the center of the source’s resolution grid.

Each source will cover the whole world several times per day. Since detection is impacted by atmospheric conditions, a given pass by one source might not be able to register an ongoing fire event. It’s therefore advisable to rely on more than one source.

To look into our fire event, we have chosen the two fire detection sources with higher resolution – VIIRS S-NPP and VIIRS NOAA-20 – covering the whole month of June 2023. The datasets were downloaded from FIRMS’ archive download page.

After downloading the two separate datasets, we combined them into one merged geopackage dataset using QGIS processing toolbox’s Merge Vector Layers algorithm. The merged dataset will be used to conduct the clustering analysis.

In addition, we will use QGIS’s field calculator to create a new Date & Time field named ACQ_DATE_TIME using the following expression:

to_datetime("ACQ_DATE" || "ACQ_TIME", 'yyyy-MM-ddhhmm')This will allow us to calculate precise time differences between two dates.

Modeling and running the analysis

Modeling and running the analysis

The large-scale fire event analysis requires running two distinct algorithms:

- a spatiotemporal clustering of points to regroup fires into a series of events confined in space and time; and

- an aggregation of the points within the identified clusters to provide additional information such as the beginning and end date of regrouped events.

This can be achieved through QGIS’ modeler to sequentially execute the ST-DBSCAN Clustering algorithm as well as the Aggregate algorithm against the output of the first algorithm.

The above-pictured model outputs two datasets. The first dataset contains single-part points of detected fires with attributes from the original VIIRS products as well as a pair of new attributes: the CLUSTER_ID provides a unique cluster identifier for each point, and the CLUSTER_SIZE represents the sum of points forming each unique cluster. The second dataset contains multi-part points clusters representing fire events with four attributes: CLUSTER_ID and CLUSTER_SIZE which were discussed above as well as DATE_START and DATE_END to identify the beginning and end time of a fire event.

In our specific example, we will run the model using the merged dataset we created above as the “fire points layer” and select ACQ_DATE_TIME as the “date field”. The outputs will be saved as separate layers within a geopackage file.

Note that the maximum distance (0.025 degrees) and duration (72 hours) settings to form clusters have been set in the model itself. This can be tweaked by editing the model.

Visualizing a specific fire event progression on a mapOnce the model has provided its outputs, we are ready to start visualizing a fire event on a map. In this practical example, we will focus on detected fires around latitude 53.0960 and longitude -75.3395.

Using the multi-part points dataset, we can identify two clustered events (CLUSTER_ID 109 and 1285) within the month of June 2023. To help map canvas refresh responsiveness, we can filter both of our output layers to only show features with those two cluster identifiers using the following SQL syntax: CLUSTER_ID IN (109, 1285).

To show the progression of the fire event over time, we can use a data-defined property to graduate the marker fill of the single-part points dataset along a color ramp. To do so, open the layer’s styling panel, select the simple marker symbol layer, click on the data-defined property button next to the fill color and pick the Assistant menu item.

In the assistant panel, set the source expression to the following:

day(age(to_date('2023-07-01'),”ACQ_DATE_TIME”)). This will give us the number of days between a given point and an arbitrary reference date (2023-07-01 here). Set the values range from 0 to 30 and pick a color ramp of your choice.

When applying this style, the resulting map will provide a visual representation of the spread of the fire event over time.

Having identified a fire event via clustering easily allows for identification of the “starting point” of a fire by searching for the earliest fire detected amongst the thousands of points. This crucial bit of analysis can help better understand the cause of the fire, and alongside the color grading of neighboring points, its directionality as it expanded over time.

Analyzing a fire event through histogram

Having identified a fire event via clustering easily allows for identification of the “starting point” of a fire by searching for the earliest fire detected amongst the thousands of points. This crucial bit of analysis can help better understand the cause of the fire, and alongside the color grading of neighboring points, its directionality as it expanded over time.

Analyzing a fire event through histogram

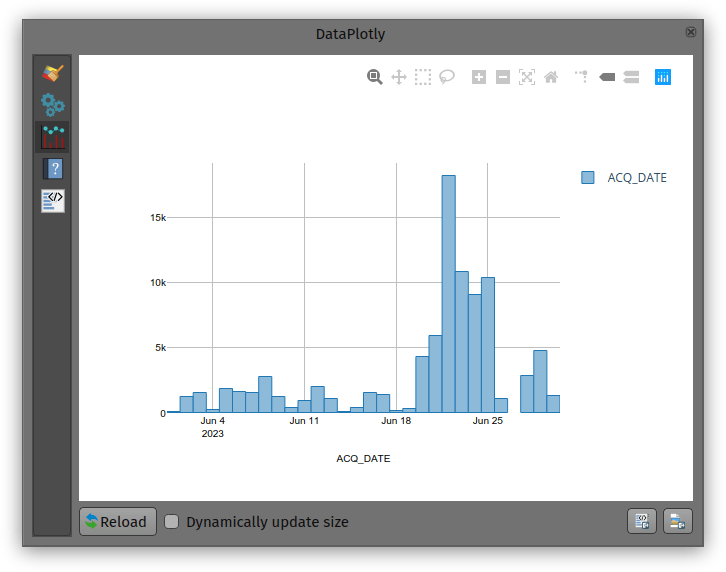

Through QGIS’ DataPlotly plugin, it is possible to create an histogram of fire events. After installing the plugin, we can open the DataPlotly panel and configure our histogram.

Set the plot type to histogram and pick the model’s single-part points dataset as the layer to gather data from. Make sure that the layer has been filtered to only show a single fire event. Then, set the X field to the following layer attribute: “ACQ_DATE”.

You can then hit the Create Plot button, go grab a coffee, and enjoy the resulting histogram which will appear after a minute or so.

While not perfect, an histogram can quickly provide a good sense of a fire event’s “peak” over a period of time.

-

sur QGIS Blog: Plugin Update August 2023

Publié: 3 September 2023, 3:08pm CEST

In August 13 new plugins that have been published in the QGIS plugin repository.

Here’s the quick overview in reverse chronological order. If any of the names or short descriptions piques your interest, you can find the direct link to the plugin page in the table below the screenshot.

Cesium ion Browse and add datasets from Cesium ion Land Use Analyzer A plugin for Land Use spatial analysis tools GNAVS GNSS Navigate and Save Soar – the new atlas Import or export maps via the Soar platform FotovolCAT Spatial analysis automation for solar power station sitting in Catalonia QGISSPARQL-Layer2Triple Layer2Triple osm2topomap A plugin intended to intermediate the process of using OSM data for official (authoritative) Topographc Maps, or rather, databases Plugin Exporter A QGIS plugin for exporting plugins GetBaseLine GetBaseLine Fast Field Filler The plugin was created to quickly fill in the fields in the attribute table. Radiation ToolBox Plugin Plugin for loading data from Safecast and other radiation monitoring devices LocationIQ Geocoding and Maps LocationIQ integration to add geocoding and map tiles to QGIS Proxy Handler Adds prefix proxy addresses to connections -

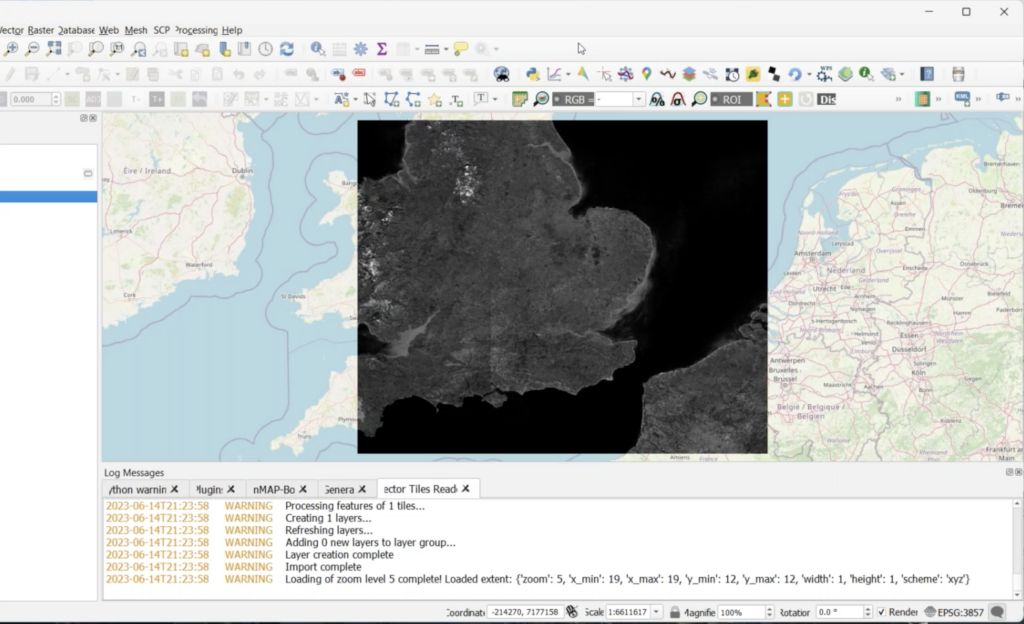

sur From GIS to Remote Sensing: Road to the Semi-Automatic Classification Plugin v.8: Landsat and Sentinel-2 images download and preprocessing, classification

Publié: 2 September 2023, 1:10pm CEST

This is the second post describing the main new features of the new version 8 (codename "Infinity") of the Semi-Automatic Classification Plugin (SCP) for QGIS, which will be released in October 2023.The new version is based on Remotior Sensus, a new Python processing framework.

The tool "Download products" has been updated to download Landsat and Sentinel-2 images from different services. In particular, through the service NASA Earthdata (registration required at [https:]] ) it will be possible to download the Harmonized Landsat and Sentinel-2 which are surface reflectance data product (generated with Landsat 8, Landsat 9, and Sentinel-2 data) with observations every two to three days at 30m spatial resolution (for more information read here). This is therefore a great source for frequent and homogeneous monitoring.Moreover, Copernicus Sentinel-2 images will be searched through the Copernicus Data Space Ecosystem API, while the images are downloaded through the Google Cloud service that provides the free dataset as part of the Google Public Cloud Data program.Other download services that were available in SCP 7 (e.g. Sentinel-1, ASTER images) will be available with future updates.

Read more » -

sur GeoSolutions: Partnership with Ecoplan (Bosnia & Herzegovina)

Publié: 1 September 2023, 7:45pm CEST

You must be logged into the site to view this content.

-

sur Free and Open Source GIS Ramblings: Comparing geographic data analysis in R and Python

Publié: 1 September 2023, 12:14pm CEST

Today, I want to point out a blog post over at

written together with my fellow “Geocomputation with Python” co-authors Robin Lovelace, Michael Dorman, and Jakub Nowosad.

In this blog post, we talk about our experience teaching R and Python for geocomputation. The context of this blog post is the OpenGeoHub Summer School 2023 which has courses on R, Python and Julia. The focus of the blog post is on geographic vector data, meaning points, lines, polygons (and their ‘multi’ variants) and the attributes associated with them. We plan to cover raster data in a future post.

-

sur GeoTools Team: GeoTools 28.5 Released

Publié: 31 August 2023, 2:07pm CEST

The GeoTools team are pleased to announce the release of the latest stable version of GeoTools 28.5 geotools-28.5-bin.zip geotools-28.5-doc.zip geotools-28.5-userguide.zip geotools-28.5-project.zip This release is also available from the OSGeo Maven Repository and is made in conjunction with -

sur GeoTools Team: GeoTools 28.5 Released

Publié: 31 August 2023, 2:01pm CEST

The GeoTools team are pleased to announce the release of the latest stable version of GeoTools 28.5 geotools-28.5-bin.zip geotools-28.5-doc.zip geotools-28.5-userguide.zip geotools-28.5-project.zipThis release is also available from the OSGeo Maven Repository and is made in conjunction with GeoServer 2.22.5. We are grateful to Peter Smythe (AfriGIS) for carrying out the -

sur gvSIG Batoví: edición 2023 del concurso: Proyectos de Geografía con estudiantes y gvSIG Batoví

Publié: 30 August 2023, 10:32pm CEST

Habiendo finalizado con éxito la etapa de capacitación de la iniciativa Geoalfabetización mediante la utilización de Tecnologías de la Información Geográfica, lanzamos la convocatoria a participar de la edición 2023 del concurso: Proyectos de Geografía con estudiantes y gvSIG Batoví. Pueden acceder aquí a la convocatoria y bases.

Todos los años tenemos alguna novedad y este año no es la excepción:

- tenemos el apoyo del Instituto Panamericano de Geografía e Historia (la iniciativa fue seleccionada por el Programa de Asistencia Técnica 2023, Proyecto PAT No. GEOG-04/2023 Geoalfabetización mediante la utilización de Tecnologías de la Información Geográfica)

- este año participa también la Dirección General de Educación Técnico Profesional (UTU)

- la certificación se obtiene participando del curso y del concurso

- contamos con la colaboración de la Universidad Politécnica de Madrid en la organización de la iniciativa

Agradecemos el apoyo de todas las instituciones que hacen posible la realización de esta propuesta.

-

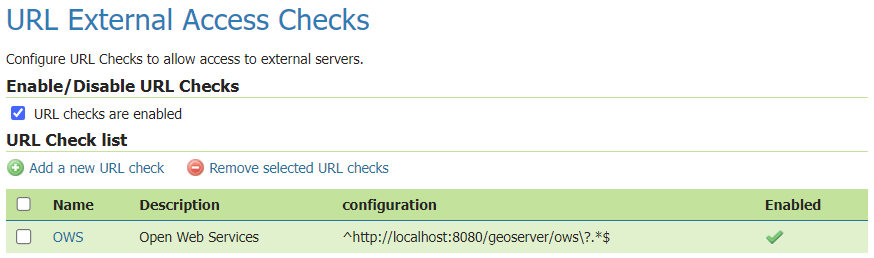

sur GeoServer Team: GeoServer 2.22.5 Release

Publié: 30 August 2023, 4:00am CEST

GeoServer 2.22.5 release is now available with downloads ( bin, war, windows) , along with docs and extensions.

This is a maintenance release of GeoServer providing existing installations with minor updates and bug fixes. GeoServer 2.22.5 is made in conjunction with GeoTools 28.5, and GeoWebCache 1.22.5.

Thanks to Peter Smythe (AfriGIS) for making this release.

2023-09-05 update: GeoServer 2.22.5 has been recompiled and uploaded to SourceForge. The initial upload was accidentally compiled with Java 11 and would not function in a Java 8 environment.

Thanks to Jody Garnett (GeoCat) for this update, and Steve Ikeoka for testing in a Java 8 environment.

Java 8 End-of-lifeThis GeoServer 2.22.5 maintenance release is final scheduled release of GeoServer 2.22.x series, and thus the last providing Java 8 support.

All future releases will require a minimum of Java 11.

Security ConsiderationsThis release addresses security vulnerabilities and is considered an essential upgrade for production systems.

This blog post will be updated in due course with CVE numbers following our coordinated vulnerability disclosure policy.

See project security policy for more information on how security vulnerabilities are managed.

Release notesImprovement:

- GEOS-10856 geoserver monitor plugin - scaling troubles

- GEOS-11048 Improve URL checking

- GEOS-11081 Add option to disable GetFeatureInfo transforming raster layers

- GEOS-11099 ElasticSearch DataStore Documentation Update for RESPONSE_BUFFER_LIMIT

- GEOS-11100 Add opacity parameter to the layer definitions in WPS-Download download maps

Bug:

- GEOS-10874 Log4J: Windows binary zip release file with log4j-1.2.14.jar

- GEOS-10875 Disk Quota JDBC password shown in plaintext

- GEOS-10901 GetCapabilities lists the same style multiple times when used as both a default and alternate style

- GEOS-10903 WMS filtering with Filter 2.0 fails

- GEOS-10932 csw-iso: should only add ‘xsi:nil = false’ attribute

- GEOS-11025 projection parameter takes no effect on MongoDB Schemaless features WFS requests

- GEOS-11035 Enabling OSEO from Workspace Edit Page Results in an NPE

- GEOS-11054 NullPointerException creating layer with REST, along with attribute list

- GEOS-11055 Multiple layers against the same ES document type conflict with each other

- GEOS-11069 Layer configuration page doesn’t work for broken SQL views

Task:

- GEOS-11062 Upgrade [httpclient] from 4.5.13 to 4.5.14

- GEOS-11063 Upgrade [httpcore] from 4.4.10 to 4.4.16

- GEOS-11067 Upgrade wiremock to 2.35.0

- GEOS-11092 acme-ldap.jar is compiled with Java 8

For the complete list see 2.22.5 release notes.

About GeoServer 2.22 SeriesAdditional information on GeoServer 2.22 series:

- GeoServer 2.22 User Manual

- Update Instructions

- Metadata extension

- CSW ISO Metadata extension

- State of GeoServer (FOSS4G Presentation)

- GeoServer Beginner Workshop (FOSS4G Workshop)

- Welcome page (User Guide)

Release notes: ( 2.22.5 | 2.22.4 | 2.22.3 | 2.22.2 | 2.22.1 | 2.22.0 | 2.22-RC | 2.22-M0 )

-

sur gvSIG Team: Curso-Concurso TIGs y gvSIG Batoví. 6ª edición

Publié: 29 August 2023, 10:56am CEST

Nos hacemos eco del lanzamiento de la 6ª edición del Curso-Concurso TIGs y gvSIG Batoví. Este año viene con una importante novedad, Colombia se suma a esta iniciativa uruguaya.

Y se ha comunicado que más de cien docentes de Uruguay y Colombia ya se inscribieron al curso TIGs y gvSIG Batoví… ¡enhorabuena!

-

sur Sean Gillies: Bear training week ~5 recap

Publié: 28 August 2023, 5:54am CEST

The third week of my season's big training block was my biggest yet from the climbing perspective. My runs averaged 220 feet of elevation gain (D+) per mile, which is what the Bear 100 course will demand of me in 5 weeks. Here are last week's numbers.

20 hours, 37 minutes

76.2 miles

16,775 feet D+

Extrapolating that to 100 miles, naively, predicts a 28 hour finish. That would be amazing! There's no way I'm going to finish in 28 hours. I think I'll be able to keep up this week's average pace for 60 miles and then will slow down dramatically after that. We'll see!

Next week I'm giving myself a break from long hilly runs. I'll do daily runs of not much more than an hour, yoga, some strength and conditioning. And I'll be working on my race day planning: gear, drop bags, fueling, etc.

-

sur GRASS GIS: New Docker images for GRASS GIS

Publié: 27 August 2023, 12:42pm CEST

Moving GRASS GIS Docker Images to the OSGeo Repository In the field of open source software development and deployment, the accessibility and maintenance of resources is of paramount importance. To this end, there has been a major change in the repository structure for the GRASS GIS Docker images. In the past years, these Docker images have been maintained and hosted under the mundialis organisation’s repository. The company mundialis has played a crucial role in providing and maintaining these images, ensuring their availability and stability for the wider GIS community. -

sur From GIS to Remote Sensing: Road to the Semi-Automatic Classification Plugin v.8: Band sets, Band calc and Scripts

Publié: 26 August 2023, 2:10am CEST

As already announced, the new version 8 (codename "Infinity") of the Semi-Automatic Classification Plugin (SCP) for QGIS will be released in October 2023.This post describes a few main new features of the SCP, which is still under development, based on a completely new Python processing framework that is Remotior Sensus.

The Main interface will include all the tools, as in SCP version 7. The Band set tab will allow to manage more than one Band set; the interface has been restyled with a table on the left to manage the list of Band sets, and the larger table on the right to display the bands of the active band set.

Read more » -

sur KAN T&IT Blog: XVII Jornadas IDERA: Nuestra Experiencia

Publié: 25 August 2023, 9:56pm CEST

Cada año, desde 2007, la Infraestructura de Datos Espaciales de la República Argentina (IDERA) extiende su invitación a los apasionados de la información geoespacial a unirse a las Jornadas IDERA. Este evento anual se ha convertido en una tradición, y en 2023, se llevó a cabo en la hermosa ciudad de Santa Rosa, provincia de La Pampa, Argentina. Es un hecho que IDERA se enorgullece de propiciar un espacio donde los expertos pueden compartir y celebrar los avances en el campo de la información geoespacial.

El equipo de Kan participó de este evento, que tuvo como objetivo central impulsar la publicación de datos, productos y servicios geoespaciales de manera eficiente y oportuna, con la finalidad de respaldar la toma de decisiones basadas en evidencias. Las XVII Jornadas IDERA fueron el punto culminante de este esfuerzo, transformándose en el evento geoespacial del año en Argentina. Fue un momento invaluable para intercambiar ideas y debatir sobre los avances y desafíos relacionados con la publicación y utilización de información geoespacial abierta, interoperable y accesible para el desarrollo del país.

Bajo el lema “La comunidad de IDERA hacia un marco integrado de información geoespacial”, las XVII Jornadas IDERA proporcionaron un espacio de reflexión sobre las propuestas globales emergentes destinadas a desarrollar, integrar y fortalecer la gestión de información geoespacial. Este enfoque permitirá mejorar las Infraestructuras de Datos Espaciales en los diferentes niveles jurisdiccionales de Argentina.

La agenda de las XVII Jornadas IDERA estuvo repleta de eventos emocionantes y presentaciones interesantes. Los talleres y ponencias que realizamos desde Kan fueron los siguientes:

Presentación institucional de KAN en el espacio de networking

Taller “Potenciá el uso de tus datos geo con Geonode 4”

Presentación de casos de éxito en el grupo de provincias

Taller “Recolección de datos en campo con Kobo” Ponencia “

Desarrollo de un Sistema de Monitoreo y Manejo Integral de Humedales a partir de Información Satelital”

Además aprovechamos para compartir y asistir a otras charlas y muestras de nuestros colegas. Muchísimas gracias IDERA por esta oportunidad única para conectarnos con otros expertos, dejarnos aprender de sus experiencias y contribuir al avance de la comunidad de información geoespacial en Argentina. ¡Nos vemos el próximo año!

-

sur OGC forms new GeoParquet Standards Working Group

Publié: 24 August 2023, 5:00pm CEST par Simon Chester

The Open Geospatial Consortium (OGC) is excited to announce the formation of the OGC GeoParquet Standards Working Group (SWG). The new SWG will work to advance the GeoParquet encoding format for adoption as an OGC Encoding Standard for cloud-native vector data.

GeoParquet adds geospatial types to Apache Parquet, described by Apache as “an open source, column-oriented data file format designed for efficient data storage and retrieval. It provides efficient data compression and encoding schemes with enhanced performance to handle complex data in bulk.” For an introduction to the GeoParquet format, see this blog post.

GeoParquet started over 3 years ago as a community effort by different Open Source Projects and organizations that have committed to its implementation and support.

OGC is advancing a number of Standards to enable cloud-native geospatial ecosystems. GeoParquet fits in the group of data encoding Standards that are highly performant for large, cloud-based data stores, such as Cloud Optimized GeoTIFF for tiled rasters and Zarr for datacubes. GeoParquet will, in time, enable vector datasets to be as readily accessible from the cloud as the other formats already well-used in the community.

The GeoParquet SWG will take the initial efforts incubated in OGC’s GeoParquet GitHub repository as a draft specification from which a candidate Standard will be developed. As with many other recent OGC Standards, the repository will remain open to contributions from outside OGC and documentation will evolve in concert with prototype implementations.

GeoParquet will be another encoding of the OGC Simple Features Standard, and as such will handle all Simple Feature geometries. While other OGC Standards also encode Simple Features, GeoParquet is intended to be optimized for native use in cloud environments. It is expected that GeoParquet will be tested as an encoding to be accessed by the OGC API family of Standards. The new SWG expects to have a candidate Standard ready for review and approval within one year.

The OGC GeoParquet Standards Working Group Charter is publicly available via the OGC Portal.

OGC Members can join the GeoParquet Standards Working Group via the OGC Portal. Non-OGC members are welcomed to contribute via OGC’s GeoParquet GitHub repository or by joining the OGC GeoParquet SWG Mailing List. Non-OGC members who would like to know more about full participation in the SWG are encouraged to contact the OGC Standards Program.

The post OGC forms new GeoParquet Standards Working Group appeared first on Open Geospatial Consortium.

-

sur Stefano Costa: Gli atti del workshop Archeofoss 2022 sono stati pubblicati

Publié: 23 August 2023, 2:38pm CEST

Gli atti del workshop Archeofoss 2022 sono stati pubblicati in open access su Archeologia e Calcolatori. Li trovate qui [www.archcalc.cnr.it] come numero 34.1 della rivista.

Ho curato insieme a Julian Bogdani l’edizione di questo volume ed è quindi motivo di soddisfazione, anche per i tempi rapidi con cui siamo arrivati alla pubblicazione grazie al lavoro collettivo degli autori e autrici, di chi ha fatto il referaggio, della redazione e della casa editrice.

Rimane una mancanza in questo volume rispetto alla ricchezza dei due giorni di incontro, delle sette sessioni tematiche, delle discussioni guidate da chi ha moderato le sessioni, ibride eppure vivacissime. La mancanza in parte è fisiologica ma in parte deriva da un certo numero di autrici e autori che non hanno presentato il proprio contributo per la pubblicazione. Ad esempio, nella sessione sui dati stratigrafici che ho moderato con Emanuel Demetrescu erano stati presentati 7 interventi ma solo 2 sono confluiti come paper nel volume.

Nei prossimi anni dovremo fare di più per fare in modo che gli atti raccolgano ancora più fedelmente il convegno.

Ci ritroveremo con la comunità Archeofoss a Torino nel mese di dicembre 2023.

-

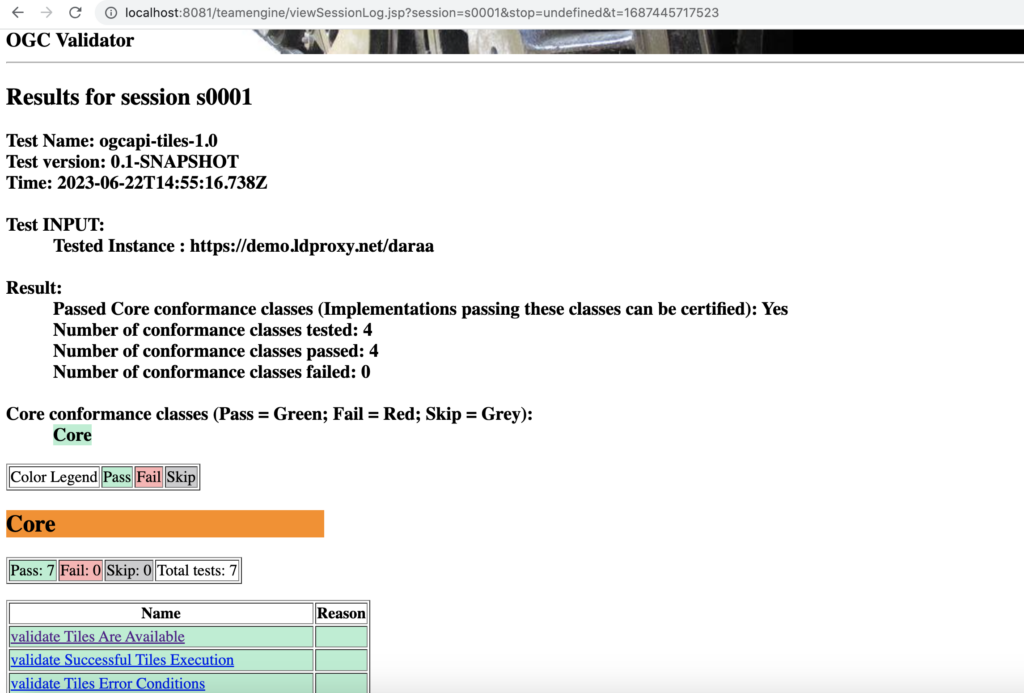

sur QGIS Blog: QGIS server 3.28 is officially OGC compliant

Publié: 22 August 2023, 2:05pm CEST

QGIS Server provides numerous services like WMS, WFS, WCS, WMTS and OGC API for Features. These last years, a lot of efforts were made to offer a robust implementation of the WMS 1.3.0 specification.

We are pleased to announce that QGIS Server LTR 3.28 is now certified against WMS 1.3.0.

This formal OGC certification process is performed once a year, specifically for the Long Term Release versions. But, as every change in QGIS source code is now tested against the formal OGC test suites (using OGC TeamEngine) to avoid any kind of regressions, you can always check any revision of the code against OGC failures in our Github continuous integration results.

All this has been possible thanks to the QGIS’s sustaining members and contributors.

-

sur OGC, UKHO, and partners release the IGIF-(M)SDI Maturity Roadmap

Publié: 21 August 2023, 5:00pm CEST par Simon Chester

The Open Geospatial Consortium (OGC) is pleased to host and release the first iteration of the IGIF-(M)SDI Maturity Roadmap and supporting materials for community consideration and engagement across not only the marine domain, but any geospatial domain connected to the oceans.

Developed as part of OGC’s ongoing Federated Marine Spatial Data Infrastructure (FMSDI) Initiative, the Integrated Geospatial Information Framework – (Marine) Spatial Data Infrastructure (IGIF-(M)SDI) Maturity Roadmap is a quick-start guide for nations and marine organizations that seeks to advance and simplify efforts in Marine SDI and ensure their alignment with the UN-IGIF principles.

“The IGIF-MSDI maturity roadmap is an important step that supports a holistic understanding of data-exchange and -processing environments,” commented OGC Chief Technology Innovation Officer, Ingo Simonis, Ph.D. “The Roadmap enhances and complements the usual technological focus of SDIs with the equally important criteria outlined in the IGIF Principles. With the Roadmap, OGC continues its engagement and research in support of powerful, sustainable, interoperable geospatial ecosystems at all levels, including technology & standards, policies, communities, education, and capacity building efforts.”

One of the key messages of the document is that an (M)SDI is a continual journey and not an “end state” of expensive technological solutions. The document asserts that nations are sovereign in what manner of (M)SDI they genuinely need for their national requirements, and not governed by an externally imposed or presumed level of technological sophistication.

“Working collaboratively with partners at the World Bank, NOAA, OGC, IHO, and private industry, The UK Hydrographic Office (UKHO) believes that the IGIF-(M)SDI Maturity Roadmap will help many Governments begin their IGIF-aligned digital transformation journeys – whether that be within the Marine or Terrestrial domains,” commented Dr. Gerald J Wong, Data Governance Lead, UKHO.

“As an accessible “Quick Start” or “Stepping Stone” toolkit, the core of the IGIF-(M)SDI Maturity Roadmap is formed by the World Bank SDI Diagnostic Toolkit where, with contributions from IHO and OGC, its Terrestrial heritage was augmented to maximize its benefits to the Marine community. The IGIF-(M)SDI Maturity Roadmap is aligned with the UN-IGIF principles and is fully interoperable with Terrestrial interests.”

When properly executed, the resulting MSDI Diagnostic Toolkit provides a quantitative assessment for nations or marine agencies to baseline their MSDI maturity, as aligned to the UN-IGIF principles. The modular IHO and OGC additions ensure interoperability with the World Bank IGIF methodology, which can lead to the financing of approved MSDI development projects. Even as an independent tool, undertaking an MSDI assessment provides a clear reference point that’s aligned with international Best Practice. Without such a starting point, progress towards any MSDI end state will be difficult to govern and manage.

“As the provider of ADMIRALTY navigation products and services worldwide, the UKHO supports nations in unlocking the many and varied benefits of their marine space,” commented James Carey, Deputy Chief Data Officer at the UKHO “We are a strategic member of the Open Geospatial Consortium and proudly lend our expertise to the development of Marine Spatial Data Infrastructure (MSDI), as an enabler of security, prosperity, and environmental stewardship. By fusing marine data with spatial insights it is possible to forge a path to a more interconnected world where oceans inspire growth and communities prosper.”

The IGIF-(M)SDI Maturity Roadmap and related resources are available for free on OGC’s IGIF-(M)SDI Maturity Roadmap webpage.

To best inform future revisions, iterations, and the optimization of the Roadmap, feedback and applied experiences from the geospatial community are sought via OGC Member Meetings, Forums, or directly.

The IGIF-(M)SDI Maturity Roadmap is an independent initiative not endorsed by or officially connected to, but in alignment and support of, the mission, vision, and goals of the United Nations Initiative on Global Geospatial Information Management (UN-GGIM).

The post OGC, UKHO, and partners release the IGIF-(M)SDI Maturity Roadmap appeared first on Open Geospatial Consortium.

-

sur Sean Gillies: Bear training week ~6 recap

Publié: 20 August 2023, 10:14pm CEST

For fun I'm using the bitwise complement operator

~in the title of this post. Race week is week ~0. On Monday, it was 6 weeks to race week. I'm starting to feel fit, close to my 2020-2021 form.The numbers for the week:

16 hours, 54 minutes

71 miles

12,165 feet D+

I've run six days in a row and my shortest run was today's: an hour and 20 minutes. I went out for five hours in Rocky Mountain National Park on Wednesday, two hours in Lory State Park on Friday, and five and a half hours at Horsetooth Open Space on Saturday.

Soaking hot and tired feet in the Big Thompson River below Fern Lake in RMNP.

Below the Westridge Wall in Lory S.P.

Alone on Arthur's Rock, looking NE across the reservoir and plains.

Towers trail tailgating

A bear was active around Towers Trail yesterday, but successfully avoided me. According to some bikers, it crossed the trail behind my back near the top during my first lap. If I'd turned when I heard them shouting, I might have seen it. I know there are bears up there, but have never seen one while I've been on the trail. It's a good time to be filling up on chokecherries, that's for sure.

Next week I'm going to increase my training volume a little more. Instead of two 5.5 hour runs, I'll aim for 3 x 4 hours.

-

sur Free and Open Source GIS Ramblings: I’ve archived my Tweets: Goodbye Twitter, Hello Mastodon

Publié: 20 August 2023, 6:38pm CEST

Today, Jeff Sikes @box464@firefish.social, alerted me to the fact that “Twitter has removed all media attachments from 2014 and prior” (source: [https:]] ). So far, it seems unclear whether this was intentional or a system failure (source: [https:]] ).

Since I’ve been on Twitter since 2011, this means that some media files are now lost. While the loss of a few low-res images is probably not a major loss for humanity, I would prefer to have some control over when and how content I created vanishes. So, to avoid losing more content, I have followed Jeff’s recommendation to create a proper archival page:

It is based on an export I pulled in October 2022 when I started to use Mastodon as my primary social media account. Unfortunately, this export did not include media files.

To follow me in the future, find me on:

Btw, a recent study published on Nature News shows that Mastodon is the top-ranking Twitter replacement for scientists.

To find other interesting people on Mastodon, there are many useful tools and lists, including, for example:

-

sur European Innovation, Global Impact

Publié: 17 August 2023, 12:49pm CEST par Simon Chester

From Europe to the World, OGC’s Collaborative Solutions and Innovation (COSI) Program is proud of its ongoing contributions to European research topics related to geospatial data. The research topics are driven by projects co-funded by the European Commission (EC) and cover many different domains and fields of application, including data spaces, climate, digital building permits, agriculture, digital twins for the oceans, knowledge generation, and beyond. While these topics are high on the European research agenda, the challenges – as well as their solutions – have global application.

These EC-funded projects are organised as small or large consortiums where different organisations cover different aspects of the projects’ objectives. As one such organisation, OGC is proud to play its part in the European Digital Strategy that is helping to ensure a secure and sustainable life for citizens of Europe and beyond.

OGC’s COSI Program conducts and organises its research around a central theme of “Full Spectrum Interoperability and Agile Reference Architecture.” Full Spectrum Interoperability refers to capturing the many different facets of interoperability that exist between systems. Agile Reference Architecture explores how software architectures can be developed and operated in a cost-efficient, agile, and sustainable manner that also maximises interoperability between systems. This research theme is therefore complementary to the European Digital Strategy.

Much of OGC’s current European work was showcased at the OGC European Innovation Days at Data Week Leipzig 2023. This blog post serves to provide an overview of that work and more for those who couldn’t attend – and who don’t want to have to wait for the next OGC European Innovation Days showcase, to be held July 2024 at FOSS4G Europe in Tartu, Estonia.

Data Spaces

Strong progress is being made towards Common European Data Spaces with the projects All Data for Green Deal (AD4GD) and Urban Data Space for Green Deal (USAGE). In both of these projects, OGC is contributing to the development of interoperable, federated systems that support information dissemination and knowledge generation. Such systems will use OGC Standards to enable interoperability at several technical and administrative levels and optimise the value chain that transforms raw data into decision-ready information.

AD4GD’s mission is to co-create and shape the European Green Deal Data Space as an open hub for FAIR (Findable, Accessible, Interoperable, and Reusable) data and standards-based services that support the key priorities of pollution, biodiversity, and climate change. The focus is on interoperability concepts that will bridge the semantic and technology gaps that are currently preventing stakeholders and application domains from accessing multi-disciplinary and multi-scale data. These gaps are also impeding the full exploitation of processing services and processing platforms at different levels, including Cloud, HPC and edge computing. AD4GD recently published this blog post summarising its second plenary meeting, which was co-located with Data Week Leipzig 2023.

The Horizon Europe USAGE project aims to provide solutions and mechanisms for making city-level environmental and climate data FAIR – and thus available to everyone. Leveraging standards for data and service interoperability, such solutions combine innovative governance mechanisms, consolidated arrangements, AI-based tools, and data analytics to streamline the sharing, access, and use of authoritative and crowdsourced city-level Earth Observation (EO) and Internet of Things (IoT) data.

In both of these projects, OGC Standards will play a fundamental part in enabling the resulting FAIR solutions. The main research challenge is developing Building Blocks for common data problems. These Building Blocks bring together data models, examples, code snippets, and schemas, and undergo continuous testing to make them easily accessible and usable by developers, modellers, and users. The goal is to identify and describe common patterns that exist across communities. This will lead to enhanced interoperability within and between data spaces.

Interested in learning more about Data Spaces? OGC will host a session on European common data spaces at the 2023 INSPIRE Conference this November.

Climate

OGC’s current crop of climate-related projects seek to support FAIR climate services and streamline the value chain that transforms raw data into decision-ready information.

Specifically, as part of the Climate Intelligence (CLINT) project, OGC is developing blueprints for transforming scientific algorithms into climate application packages that can be deployed, regardless of their backend, in the Copernicus Climate Change Service (C3S) Climate Data Store (CDS). More widely, the CLINT project seeks to develop Machine Learning (ML) techniques and algorithms that climate scientists can use to process the large climate datasets required for predicting and identifying the causes of extreme events such as heatwaves, warm nights, droughts, and tropical cyclones.

Focusing on the health impacts of climate change, the CLIMOS (Climate Monitoring and Decision Support Framework for Sand Fly-borne Diseases Detection and Mitigation with COst-benefit and Climate-policy MeasureS) project aims to mitigate the emergence, transmission, and spread of pathogens by sand flies. The project is establishing an Early Warning System and decision support tools for more accurate climate and health models. It will also provide predictions of infection risk and spread, as well as adaptation options. OGC is addressing the interoperability challenges faced when combining health, environmental, Earth observation, and climate model data.

Digital Building Permits

OGC is bringing its geospatial expertise to the digitalisation of building permits across two projects: ACCORD and CHEK. Albeit with a different focus, both projects aim to transform what is currently a largely manual process into a semi-automated one that allows building applications to be submitted in digital form.

In support of sustainability and resource conservation, the ACCORD (Automated Compliance Checks for Construction, Renovation, or Demolition works) has a strong focus on regulations analysis and the use of ontologies and linked data to automate the compliance checks. ACCORD will develop a semantic framework for European digital building permit processes, regulations, data, and tools. This framework will drive the formalisation of rules and the integration of existing compliance tools as Standards-based microservices, for example using OGC APIs. The solutions and tools being developed by ACCORD will provide consistency, interoperability, and reliability with national regulatory frameworks, processes, and standards.

The CHEK (Change toolkit for digital building permit) project is looking at the entire workflow for the digitalisation of building permits and is facilitating the introduction of digital building permit procedures for municipalities by developing flexible, adaptable solutions that take into account all the rules and conditions of the procedure. As with ACCORD, CHEK is using a Standards-based microservices approach to its architecture. CHEK will also develop training for municipalities, which will be made available through the Location Innovation Academy (see below).

As part of CHEK, OGC is investigating how needs-based data models can be derived dynamically as profiles of common conceptual models. The goal is to leave behind the basic problems of standardised data models. Due to their ambition to comprehensively represent a domain, they tend to be over-specified. On the other hand, to adapt to the needs of different use cases, they allow too much flexibility in implementation and modelling details. OGC is currently focused mostly on the transformation of administrative data using ontologies generated from CityGML and CityJSON.

Agriculture

The DEMETER project, which is now coming to an end, has helped to digitally transform Europe’s agri-food sector. DEMTER adopted advanced IoT technologies, data science, and smart farming to ensure the long-term viability and sustainability of the agriculture sector. One of the key results is the production of an Agriculture Information Model (AIM). The AIM is a data model and ontology for agriculture applications that ensures semantic interoperability between data and components involved in agri-food applications. To further enhance the AIM, OGC has now formed the Agriculture Information Model Standards Working Group (AIM SWG). The OGC AIM will provide a common language for agriculture applications to harmonise and improve data and metadata exchange by defining the required data elements, including concepts, properties, and relationships relevant to agriculture applications, as well as their associated semantics/meaning for information exchange.

Oceans and the Blue Economy

The Iliad Digital Twins of the Ocean project is developing a federated, multidimensional representation of the maritime and oceanic ecosystem. As with many of these projects, OGC Standards will be used to enhance the value chain as data sourced from smart IoT, satellite Earth Observations, and Citizen Science is transformed into decision-ready information and knowledge. As such, it fits perfectly into the OGC focus theme of “marine spaces.”

Iliad is developing several Digital Twin pilots in a number of key areas, including: wind energy; renewable energy from the ocean (currents, waves & floating solar); fisheries & aquaculture; marine traffic & harbour safety; pollution; met ocean data (hind, now & forecasts); biodiversity assessments & monitoring; and insurance for marine & maritime activities.

OGC is involved in defining the standards-based Data Transfer Object (DTO) data management APIs built on the OGC APIs framework, as well as ensuring semantic interoperability between the APIs, Citizen Science, and thematic domains. Finally, we are leading standardisation and best practice tasks to enable the solution to fit within the ecosystem of the Digital Twin of the Earth.

Location Innovation Academy

The recently launched Location Innovation Academy is a free online training program based on the knowledge and ideas generated by the GeoE3 project. The free online academy empowers users to improve the accessibility, interoperability, and integration of their geospatial data and services. The academy is currently targeted towards national mapping agencies, meteorological institutions, and other organisations producing or using geospatial data from different countries. The Academy aims to help overcome the interoperability gaps that still exist between European countries.

The growing online training package currently includes three different courses for developing skills in: Data Management; Service Management; and Data and Service Integration.

The Location Innovation Academy also serves as the experimentation platform for a future OGC Academy that will help learners access and exploit the enormous amounts of knowledge generated by OGC. The Location Innovation Academy is hosted by OGC at academy.ogc.org. In support of the academy, OGC is also a Pact For Skills Member.

The Academy continues to be the main component of the DIS4SME project. DIS4SME aims to provide SMEs with high quality specialised training courses on data interoperability across different areas, including location data.

By Europe, for the WorldAs a participant in projects funded by the European Commission, OGC’s COSI Program – with its complementary research theme of “Full Spectrum Interoperability and Agile Reference Architecture” – is proud to develop valuable solutions that support the European Digital Strategy and help ensure a secure and sustainable life for not only the citizens of Europe, but the entire world.

The next OGC European Innovation Days showcase will be held July 2024 at FOSS4G Europe in Tartu, Estonia.

OGC is also hosting a session on European common data spaces at the 2023 INSPIRE Conference this November.

To stay up to date on all things OGC, including European projects, funding opportunities, Standards development, events, and more, subscribe to the OGC Newsletter.

The post European Innovation, Global Impact appeared first on Open Geospatial Consortium.

-

sur QGIS Blog: Plugin Update June & July 2023

Publié: 16 August 2023, 10:27pm CEST

In this summer plugin update, we explore 51 new plugins that have been published in the QGIS plugin repository.

Here’s the quick overview in reverse chronological order. If any of the names or short descriptions piques your interest, you can find the direct link to the plugin page in the table below the screenshot.