Vous pouvez lire le billet sur le blog La Minute pour plus d'informations sur les RSS !

Canaux

3759 éléments (18 non lus) dans 55 canaux

Dans la presse

(1 non lus)

Dans la presse

(1 non lus)

-

Décryptagéo, l'information géographique

Décryptagéo, l'information géographique

-

Cybergeo

Cybergeo

-

Revue Internationale de Géomatique (RIG)

Revue Internationale de Géomatique (RIG)

-

SIGMAG & SIGTV.FR - Un autre regard sur la géomatique

(1 non lus)

SIGMAG & SIGTV.FR - Un autre regard sur la géomatique

(1 non lus) -

Mappemonde

Mappemonde

Du côté des éditeurs

(1 non lus)

Du côté des éditeurs

(1 non lus)

-

Imagerie Géospatiale

-

Toute l’actualité des Geoservices de l'IGN

Toute l’actualité des Geoservices de l'IGN

-

arcOrama, un blog sur les SIG, ceux d ESRI en particulier (1 non lus)

-

arcOpole - Actualités du Programme

arcOpole - Actualités du Programme

-

Géoclip, le générateur d'observatoires cartographiques

-

Blog GEOCONCEPT FR

Blog GEOCONCEPT FR

Toile géomatique francophone

(16 non lus)

Toile géomatique francophone

(16 non lus)

-

Géoblogs (GeoRezo.net)

-

Conseil national de l'information géolocalisée

Conseil national de l'information géolocalisée

-

Geotribu

(1 non lus)

Geotribu

(1 non lus) -

Les cafés géographiques

(3 non lus)

Les cafés géographiques

(3 non lus) -

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

-

Icem7

Icem7

-

Séries temporelles (CESBIO)

(2 non lus)

Séries temporelles (CESBIO)

(2 non lus) -

Datafoncier, données pour les territoires (Cerema)

Datafoncier, données pour les territoires (Cerema)

-

Cartes et figures du monde

(3 non lus)

Cartes et figures du monde

(3 non lus) -

SIGEA: actualités des SIG pour l'enseignement agricole

SIGEA: actualités des SIG pour l'enseignement agricole

-

Data and GIS tips

Data and GIS tips

-

Neogeo Technologies

(2 non lus)

Neogeo Technologies

(2 non lus) -

ReLucBlog

ReLucBlog

-

L'Atelier de Cartographie

L'Atelier de Cartographie

-

My Geomatic

-

archeomatic (le blog d'un archéologue à l’INRAP)

archeomatic (le blog d'un archéologue à l’INRAP)

-

Cartographies numériques

(5 non lus)

Cartographies numériques

(5 non lus) -

Veille cartographie

Veille cartographie

-

Makina Corpus

-

Oslandia

Oslandia

-

Camptocamp

Camptocamp

-

Carnet (neo)cartographique

Carnet (neo)cartographique

-

Le blog de Geomatys

Le blog de Geomatys

-

GEOMATIQUE

GEOMATIQUE

-

Geomatick

Geomatick

-

CartONG (actualités)

CartONG (actualités)

Open Geospatial Consortium (OGC)

-

15:00

15:00 OGC Approves Model for Underground Data Definition and Integration as Official Standard

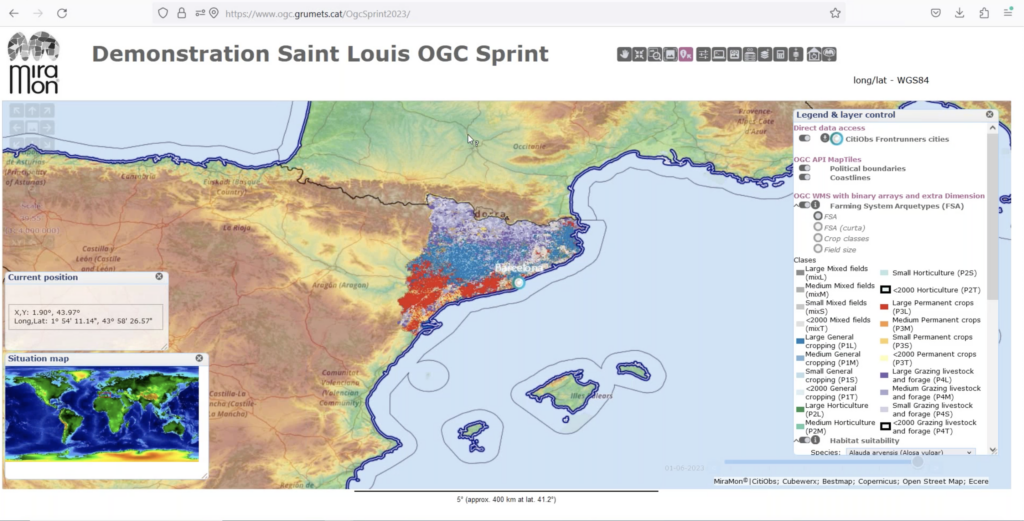

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is excited to announce that the OGC Membership has approved version 1.0 of the OGC Model for Underground Data Definition and Integration (MUDDI) Part 1: Conceptual Model for adoption as an official OGC Standard. MUDDI serves as a framework to make datasets that utilize different information for underground objects interoperable, exchangeable, and more easily manageable.

MUDDI represents real-world objects found underground. It was designed as a common basis to create implementations that make different types of subsurface data – such as those relating to utilities, transport infrastructure, soils, ground water, or environmental parameters – interoperable in support of a variety of use cases and in different jurisdictions and user communities. The case for better subsurface data and an explanation of the usefulness of the MUDDI data model is made in this MUDDI For Everyone Guide.

Certainly, a key focus application domain, and one of the main motivations for creating MUDDI, is utilities and the protection of utilities infrastructure. Indeed, OGC’s MUDDI Model was successfully used in pilot testing for the National Underground Asset Register (NUAR), a program led by the UK Government’s Geospatial Commission. More information on NUAR can be found here.

MUDDI aims to be comprehensive and provides sufficient level of detail to address many different application use cases, such as:

- Routine street excavations;

- Emergency response;

- Utility maintenance programs;

- Large scale construction projects;

- Disaster planning;

- Disaster response;

- Environmental interactions with infrastructure;

- Climate Change mitigation; or

- Smart Cities programs.

The MUDDI Conceptual Model provides the implementation community with the flexibility to tailor the implementations to specific requirements in a local, regional, or national context. The standardization targets are specific MUDDI implementations in one or more encodings such as GML (Geographic Markup Language), SFS (Simple Features SQL), Geopackage or JSON-FG encodings that are expected to be standards in future parts of the MUDDI standards family. Both GML and JSON-FG are supported by the OGC API – Features Standard. SFS is supported by a number of database systems.

MUDDI consists of a core of mandatory classes describing built infrastructure networks (such as utility networks) together with a number of optional feature classes, properties, and relationships related to the natural and built underground environment. The creation of implementations targeted to defined use cases and user communities also allows the extension of the concepts provided in MUDDI.

The early work for crafting this OGC Standard was undertaken in the OGC Underground Infrastructure Concept Study, sponsored by Ordnance Survey, Singapore Land Authority, and The Fund for the City of New York – Center for Geospatial Innovation.

The Concept Study was followed by the Underground Infrastructure Pilot and MUDDI ETL-Plugfest workshop, as well as close collaboration with early implementers of MUDDI, such as the UK Geospatial Commission. Several implementations of MUDDI were thoroughly tested in the OGC Open Standards Code Sprint in October/November 2023. The results are described in the OGC 2023 Open Standards Code Sprint Summary Engineering Report and a blog post entitled Going underground: developing and testing an international standard for subsurface data, which describes the experiences and lessons learned from the Geospatial Commission’s attendance at the Code Sprint.

Version 1.0 of the MUDDI Conceptual Model Standard is the outcome of those initiatives, as well as the work and dedication of the MUDDI Standards Working Group, which led the development of the Standard, including:

- Editors:

- Alan Leidner, New York City Geospatial Information System and Mapping Organization (GISMO)

- Carsten Roensdorf, Ordnance Survey

- Neil Brammall, Geospatial Commission, UK Government

- Liesbeth Rombouts, Athumi

- Joshua Lieberman

- Andrew Hughes, British Geological Survey, United Kingdom Research and Innovation

- Contributors:

- Dean Hintz, Safe Software

- Allan Jamieson, Ordnance Survey

- Chris Popplestone, Ordnance Survey

OGC Members interested in staying up to date on the progress of this Standard, or contributing to its development, are encouraged to join the MUDDI Standards Working Group via the OGC Portal. Non-OGC members who would like to know more about participating in this SWG are encouraged to contact the OGC Standards Program.

As with any OGC standard, the open MUDDI Part 1: Conceptual Model Standard is free to download and implement. Interested parties can view and download the standard from OGC’s Model for Underground Data Definition and Integration (MUDDI) Standard Page.

The post OGC Approves Model for Underground Data Definition and Integration as Official Standard appeared first on Open Geospatial Consortium.

-

22:05

22:05 Public Services on the Map: A Decade of Success

sur Open Geospatial Consortium (OGC)The Netherlands’ Cadastre, Land Registry, and Mapping Agency (Kadaster) maintains the nation’s register of land and property rights, ships, aircraft, and telecom networks. It’s also responsible for national mapping and the maintenance of the nation’s reference coordinate system and serves as an advisory body on land-use issues and national spatial data infrastructures. In its public service role, Kadaster handles millions of transactions a day.

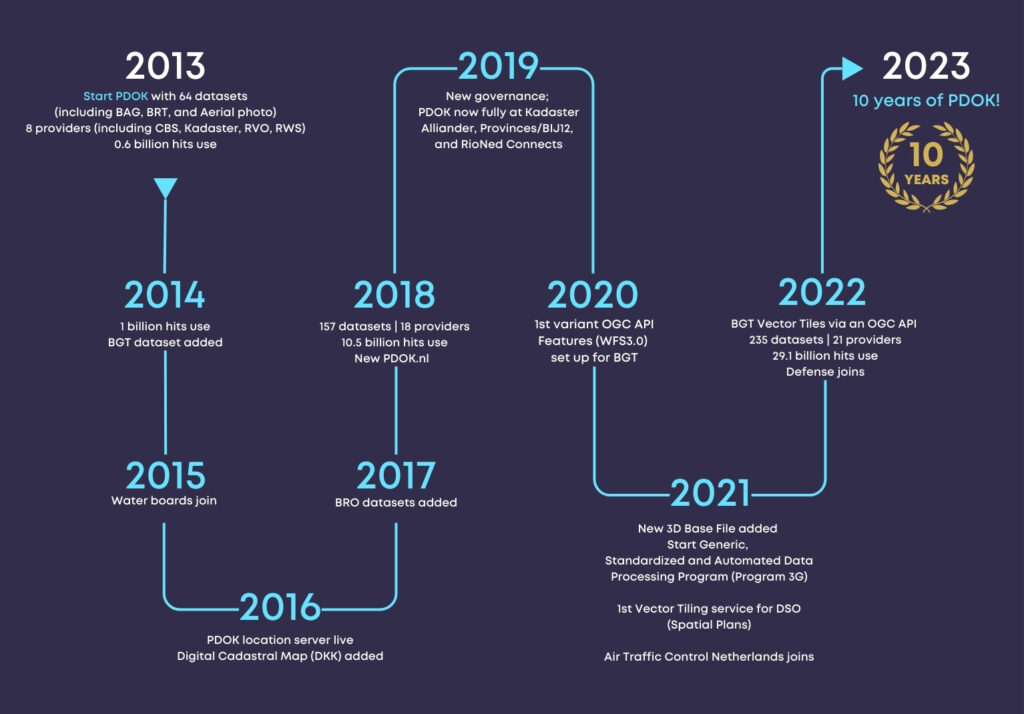

Celebrating 10 years: PDOK’s evolution. (click to enlarge)

Celebrating 10 years: PDOK’s evolution. (click to enlarge)

PDOK was launched in 2013 to make the Dutch government’s geospatial datasets Findable, Accessible, Interoperable, and Reusable (FAIR) as mandated by the EU’s INSPIRE Directive. OGC Standards have been foundational in ensuring that the country’s Spatial Data Infrastructure (SDI) is scalable, available, and responsive.

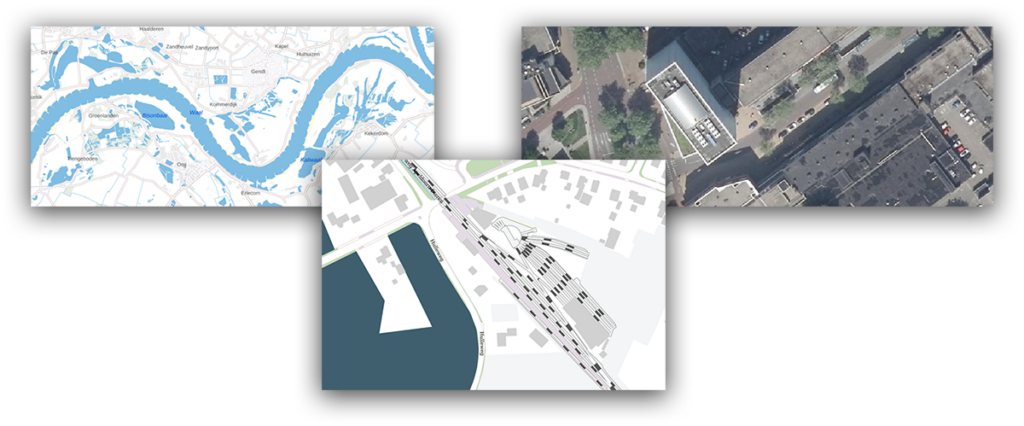

PDOK serves base maps and Earth Observation data for the whole country. It includes the Key Topography Register (Basisregistratie Topografie, BRT), aerial imagery (updated annually at 8 and 25 cm GSD), and the Large Scale Topography Register (Basisregistratie Grootschalige Topografie, BGT). The BGT is a detailed digital base map (between 1:500 and 1:5,000) that depicts building footprints, roads, water bodies, railways lines, agricultural land, and parcel boundaries. PDOK also serves a 3D dataset of buildings and terrain created from topography (BGT), building footprints (from the Building and Addresses Register or BAG), and height information (from aerial photography).

When PDOK was launched in 2013, it hosted 40 datasets and handled 580 million server requests annually. Today it hosts 210 datasets and handles 30 billion server requests annually—representing a 5,000% increase in service requests being handled by the platform.

Examples of PDOK basemaps and imagery.

Standards Enabling Success

Examples of PDOK basemaps and imagery.

Standards Enabling Success

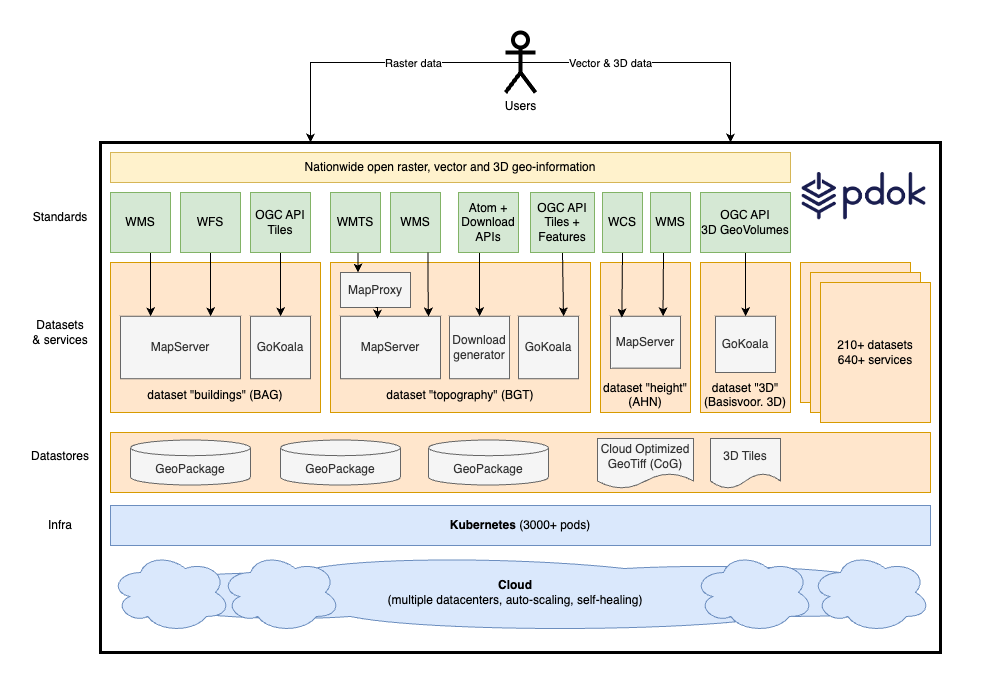

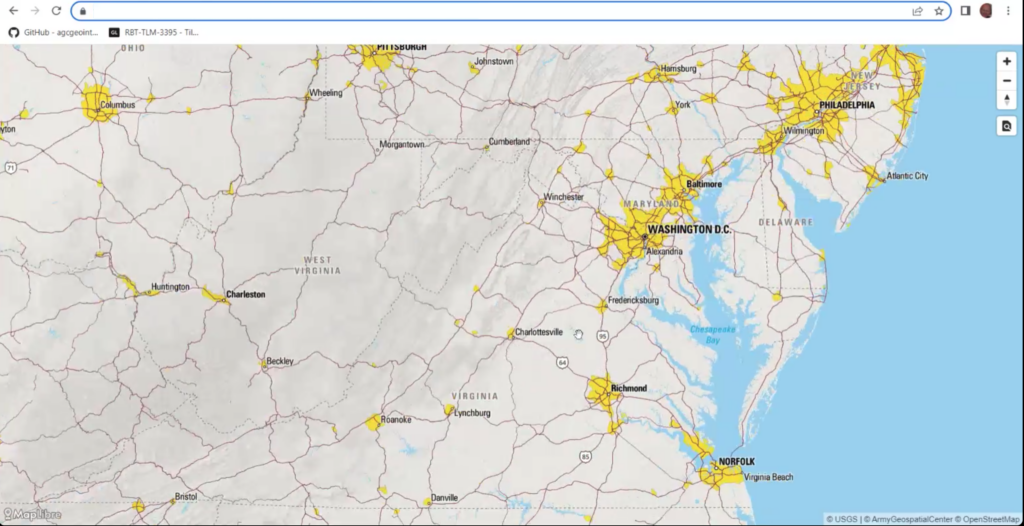

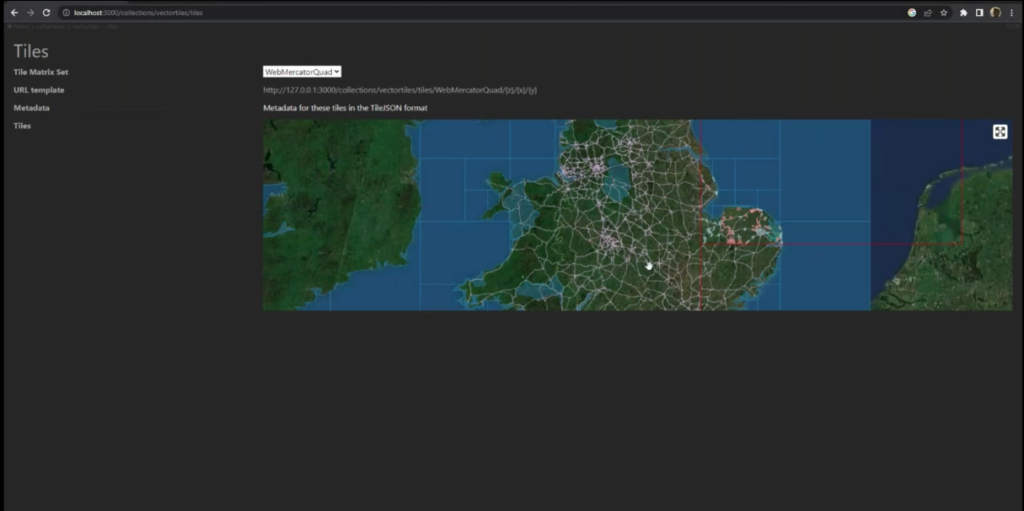

The majority of datasets on PDOK are made available using OGC’s popular Web Map Service (WMS) and Web Feature Service (WFS) standards. The BRT is available in three coordinate reference systems (EPSG 28992, EPSG 25831, and EPSG 3857) using the Web Map Tile Service (WMTS) standard.

Kadaster is in the process of transitioning to the newer OGC API Standards, including OGC API – Tiles and OGC API – Features. The most frequently used key registers, like the Building and Addresses Register (BAG), the Large Scale Topography Register (BGT), and the Key Topography Register (BRT) are in the process of being migrated to these newer OGC APIs. The focus is on using OGC API – Tiles, specifically Vector Tiles. For query services the OGC API – Features Standard is used. Although Earth Observation data doesn’t currently use the newer APIs, aerial imagery is available through WM(T)S services, stored as OGC Cloud Optimized GeoTiffs (CoG). 3D Building data is encoded as OGC 3D Tiles and served using the OGC API – 3D GeoVolumes standard.

The PDOK architecture and OGC Standards. (click to enlarge)

The PDOK architecture and OGC Standards. (click to enlarge)

By using the newer OGC API Standards, Kadaster has found it significantly easier to handle the large volume of data in its national-scale 3D building dataset (which can be used between scales of 1:500 and 1:10,000) providing an unparalleled, high-resolution perspective of the country’s built environment.

OGC APIs also make it easier to track compute and memory usage on servers, allowing Kadaster to better realize platform scalability and optimize performance.

PDOK uses the OGC API – 3D GeoVolumes to serve the 3D building dataset. (click to enlarge)

A Smooth Transition to OGC APIs

PDOK uses the OGC API – 3D GeoVolumes to serve the 3D building dataset. (click to enlarge)

A Smooth Transition to OGC APIs

PDOK processes billions of user requests every month. Transitioning from OGC Web Services Standards to OGC APIs has meant Kadaster is able to efficiently handle the volume of requests it handles today – and can expect to handle in the future.

It took Kadaster little over a month to build their implementation of OGC API – Tiles. Following a short learning curve they were able to build out implementations of OGC API – Features and API – 3D GeoVolumes even faster. By making the move to deliver its geospatial tiles and feature data through an API conformant to the OGC API Standards, Kadaster continues to make government data Findable and Accessible.

PDOK implements what Kadaster calls the 3G principle—Generic (Generic), Geautomatiseerd (Automated) en Gestandaardiseerd (Standardized). This has led to simplified data processing, the automation of many manual processes, a standardization in services, and high standards in service design and operation – while at the same time remaining infrastructure independent. It has streamlined the adoption of new OGC Standards and made it better able to respond to changing user requirements.

Kadaster and OGCBeing an OGC Member allows Kadaster to leverage the collective knowledge of a global network of geospatial experts, while providing Kadaster with opportunities to contribute back to a community driven to use the power of geography and technology to solve problems faced by people and the planet.

Kadaster has recently contributed to the OGC Community by participating in an OGC Code Sprint. These hybrid online/in-person events give participants the opportunity to work on implementations of new or emerging OGC Standards.

Through OGC, Kadaster has also shared their experiences and challenges at OGC Member Meetings, contributing to the ongoing evolution of OGC Standards, Collaborative Solutions and Innovation (COSI) Program Initiatives, and, more broadly, advanced the “state of the art” of geospatial technologies.

The below video provides an overview of PDOK.

The post Public Services on the Map: A Decade of Success appeared first on Open Geospatial Consortium.

-

18:13

18:13 OGC announces Christy Monaco as new Chief Operating Officer

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is excited to announce that Ms. Christy Monaco will soon join OGC’s senior leadership as the Consortium’s Chief Operating Officer (COO). Christy commences her new role on September 1, 2024.

Christy brings to OGC a deep understanding of the geospatial industry, including historical and current trends, gained from her experience leading operations, technological transformation, organizational change, and resource management in the government and non-profit sectors of the geospatial and intelligence communities.

“I am thrilled to be joining Peter and the amazing team at OGC,” commented Christy Monaco. “For thirty years, OGC has brought together the geospatial community to ensure interoperability of data and technology during a period of tremendous growth and change. In our increasingly interconnected world, new opportunities abound for OGC, its partners, and stakeholders to address rapidly evolving global challenges and drive innovation. I’m truly excited to take on this new role as we shape the future of geospatial technology together.”

As OGC’s COO, Christy will use her experience with federal agencies, partnership-building, event management, and member success to help grow and shape the Consortium as it looks to its next chapter and works with new and established partners and stakeholders to bring accelerated, practical, and implementable solutions to the world of geospatial.

“We are very excited to have Christy join our executive team,” commented Peter Rabley, OGC CEO. “Christy will work with me and the rest of the team to enhance and grow the support and value that OGC provides to our members through our Policy & Advocacy, Innovation, and Standards.”

Previous to her appointment at OGC, Christy was the Vice President of Programs at the U.S. Geospatial Intelligence Foundation (USGIF), where she led content development for the Foundation’s events and programs, including the Foundation’s annual GEOINT Symposium. Christy also oversaw the Foundation’s education & professional development initiatives and marketing & communication activities.

About Christy Monaco

Ms. Christy Monaco is the new Chief Operating Officer (COO) at the Open Geospatial Consortium (OGC), starting September, 2024. Previous to joining OGC, Christy was the Vice President of Programs at the U.S. Geospatial Intelligence Foundation (USGIF), a role she held since December of 2020. She previously served for over 30 years in the U.S. Intelligence Community, during which she held a number of positions including with the Office of Naval Intelligence (ONI), the Defense Intelligence Agency (DIA), and the National Geospatial-Intelligence Agency (NGA). A government executive for over half of her federal career, in 2022 she was recognized by President Biden as a Meritorious Executive in the Defense Intelligence Senior Executive Service for her contributions at NGA. Christy currently resides in Alexandria, Virginia.The post OGC announces Christy Monaco as new Chief Operating Officer appeared first on Open Geospatial Consortium.

-

14:00

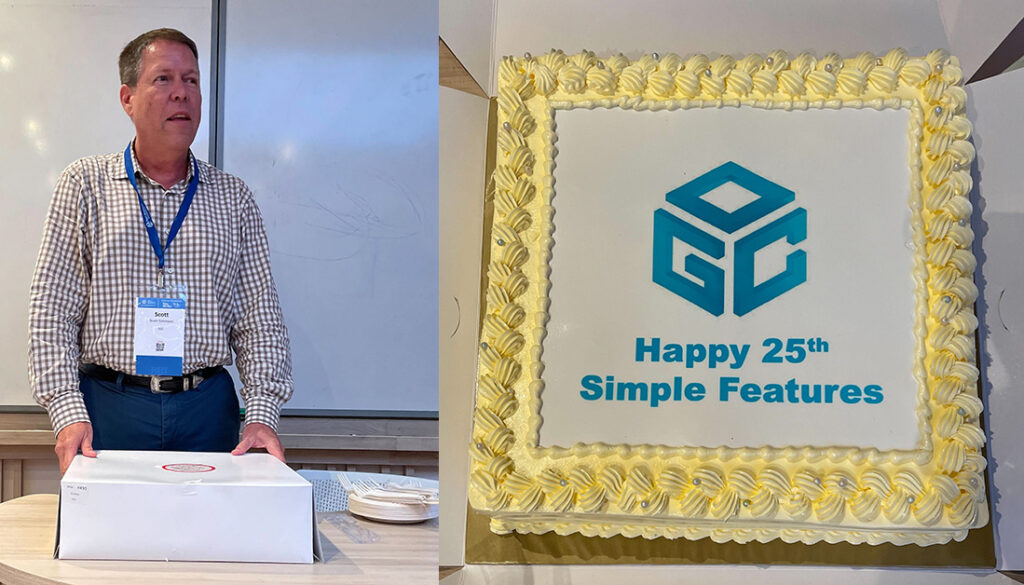

14:00 A recap of the 129th OGC Member Meeting, Montreal, Canada

sur Open Geospatial Consortium (OGC)The 129th OGC Member Meeting was held in Montreal, Canada, from June 17-21, 2024. The meeting was hosted by OGC Strategic Member Natural Resources Canada, with additional support from Esri Canada, CAE, Safe Software, and Bentley Systems. The week saw more than 200 leaders and experts from industry, academia, and government come together—with a further 100 online—to learn about the latest innovations from OGC Members, advance Standards, network, and shape the future of geospatial technology. The meeting was also notable as it marked the beginning of OGC’s 30 year anniversary celebrations, which will culminate at an event in Washington, D.C., this December.

Under the theme “Standards enabling collaboration for global challenges” the 129th Member Meeting focused on discussing, aligning, supporting, and promoting geospatial solutions that address challenges as far reaching as climate change and related disasters, and application domains as diverse as marine, Artificial Intelligence/Machine Learning (AI/ML), and beyond.

Alongside the many OGC Standards and Domain Working Group meetings, there also included a Methane Summit, a Sensor Summit, a two-day meeting of the Canada Forum, a Quantum Computing ad hoc, a Metadata Workshop, and the kickoff of Testbed-20.

Friday’s OGC Executive Planning Committee Meeting was hosted by CAE at their nearby factory, and included a memorable tour of their factory floor. Social occasions included a Monday evening reception and a Wednesday night dinner was held at Northern Italian restaurant, Le Richmond. During the dinner, Andreas Matheus from Secure Dimensions GmbH was presented the 2024 Gardels Award for his persistent efforts to ensure best practices in security and API design in OGC. Congratulations, Andreas!

Andreas Matheus (L) is presented the 2024 Gardels Award by OGC Chief Standards Officer, Scott Simmons (R).

Andreas Matheus (L) is presented the 2024 Gardels Award by OGC Chief Standards Officer, Scott Simmons (R).

In addition to the challenges and domains covered by the meeting theme, two additional areas of discussion that stood out during the week: Digital Twins & Sensors, and Ethics in Geospatial.

Digital Twins & Sensors. OGC members are increasingly demonstrating the use of digital twins of the built environment (e.g., city and building models) as the context and framework in which sensors are managed. Sensed information in the real world can be displayed on digital representations of that digital reality to enable better understanding of environmental conditions, human activity, and system responses to those factors. The Urban Digital Twins DWG, 3D Information Management DWG, and the Autonomy, Sensors, Things, Robots, and Observations DWG are each actively discussing and collaborating on this integration.

Ethics in Geospatial. The OGC Executive Planning Committee has established a new subgroup on Ethics in Geospatial to set strategy for how OGC might address/respond to the ethical use of geospatial data or the use of those data for ethical purposes. The group will organize an initial meeting this July. If you would like to contribute to the conversation, you can sign up to the OGC Ethics in Geospatial email list here.

Building on the success of the demonstration at the 128th Member Meeting in Delft, The Netherlands, members of the OGC GeoPose and Points of Interest Standards Working Groups, in collaboration with the Open AR Cloud team, XR Masters, and Augmented City, expanded the GeoPose demonstration to include a native browser developed by OGC member Ethar, Inc. With the Ethar implementation, the team successfully demonstrated that a GeoPose can be encoded into a QR code. You can read more about the demonstration on the Open AR Cloud blog post, which includes a video showing how GeoPose can be used for anchoring content indoors and how it can be recognized by different Augmented Reality Clients.

Opening PlenaryThe Opening Plenary was hosted by OGC’s Dr. Rachel Opitz and featured a keynote presentation from Eric Loubier, Director General of the Canada Centre for Mapping and Earth Observation at Natural Resources Canada. Mr. Loubier highlighted the use of geospatial data and technologies to address the needs of Canadian citizens, with examples including flood and wildfire risk.

Introductory messages were then delivered by Louis-Martin Losier from Bentley Systems, Gordon Plunkett from Esri Canada, Hermann Brassard from CAE, and Dean Hintz from Safe Software. Finally, Prashant Shukle, Chair of the OGC Board of Directors, presented on “Canada and the OGC – 30 years of impact,” which reflected on the important parts that Canadian organizations have played in OGC’s 30 year history.

Special tribute was then paid to an instrumental figure in OGC’s history who sadly passed away in May this year, Dr. John Herring. John was OGC’s first Gardels Award recipient back in 1999, and also received an OGC Lifetime Achievement Award in 2021.

Chair of the OGC Board of Directors, Prashant Shukle, presents “Canada and the OGC – 30 years of impact” during the opening session.

Future Directions

Chair of the OGC Board of Directors, Prashant Shukle, presents “Canada and the OGC – 30 years of impact” during the opening session.

Future Directions

This Meeting’s Future Directions session focused on Artificial Intelligence in Geoinformatics. Four presentations were made to provide updates since this topic was last addressed.

- Leveraging AI for Geospatial Reality Data: Louis-Martin Losier, Jeroen Vermeulen, Arnaud Durante (Bentley Systems)

- Spatial Web: AI agents operating in hyperspace: George Percivall (GeoRoundtable / IEEE GRSS)

- A User-Centric Process to Build AI-Powered Geospatial Applications: Pablo Fuentes (makepath)

- Bringing Geospatial Awareness to Large Language Models Using Spatial Knowledge Graphs: Nathan McEachen (TerraFrame)

The four presenters then engaged in a fascinating panel discussion moderated by Dr. Gobe Hobona of OGC. OGC Members can access the presentations and a recording on this page in the OGC Portal.

Meeting Special SessionsThe Methane Summit focused on the observation, measurement, monitoring, and spatial analysis of methane emissions. Consistent reporting of these emissions in a spatial context is key to effective decision making regarding reduction of emissions and their environmental consequences. Hence, the session also resulted in the proposal of a new OGC Standards Working Group (SWG) for EmissionML as a means to encode emission data. OGC Members can access the presentations and a recording on this page in the OGC Portal.

Directly following the Methane Summit was a linked Sensor Summit to talk more specifically about sensor model and data standardization. The focus of this Summit was on new and emerging Standards activities in OGC, including SensorThings API version 2.0, OGC API – Connected Systems and its dependent updates to the SensorWeb Standards, and the UK SAPIENT sensor architecture. OGC Members can access the presentations and a recording on this page in the OGC Portal.

The OGC Canada Forum held sessions on both Monday and Tuesday. Day 1 included an opening and the Forum Overview, followed by a panel on “The Transformative Role of Industry in National Geospatial Strategy and Spatial Data Infrastructure.” Day 2 started with an Oxford debate on the impact of standards on innovation and then included a panel on “National Geospatial Strategy in a Time of Rapid Change and Growing Challenges.” The day wrapped up with a discussion on partnerships and a closing. OGC Members can access the presentations and a recording on this page in the OGC Portal.

(L-R) Claudine Couture, Linda van den Brink, Bradford Dean, Eldrich Frazier, and Eric Loubier discussing “National Geospatial Strategy in a Time of Rapid Change and Growing Challenges” during the Canada Forum.

(L-R) Claudine Couture, Linda van den Brink, Bradford Dean, Eldrich Frazier, and Eric Loubier discussing “National Geospatial Strategy in a Time of Rapid Change and Growing Challenges” during the Canada Forum.

The Quantum Computing ad hoc session was a follow-up from the previous Member Meeting to assess whether a new OGC Working Group should be established to assess the geospatial role of quantum computing technologies. OGC Members can access the presentations and a recording on this page in the OGC Portal.

On Friday of the meeting week, a full-day Metadata Workshop was organized by the Metadata and Catalogs Domain Working Group (DWG). The first half of the workshop set the stage with presentations on current and emerging metadata Standards as well as their practical use. The second half of the summit was focused on the candidate OGC GeoDCAT Standard, how GeoDCAT fits within OGC’s building block approach to Standards, and preparation for a metadata-centric OGC Code Sprint later in 2024. OGC Members can access the presentations and a recording on this page in the OGC Portal.

The next OGC Testbed has now begun! The Testbed-20 kickoff session helped participants better understand sponsor requirements and introduced both parties to the process by which OGC will manage the Testbed. As the largest Research & Development (R&D) Initiatives conducted under OGC’s COSI Program, OGC Testbeds exist at the cutting edge of technology, actively exploring and evaluating future geospatial technologies to solve today’s problems.

Testbeds provide an opportunity to engage with and lead the latest research on geospatial system design, concept development, and rapid prototyping. They also provide a business opportunity for stakeholders to mutually define, refine, and evolve service interfaces and protocols in the context of hands-on experience and feedback. The solutions developed in Testbeds eventually move into the OGC Standards Program, where they are reviewed, revised, and potentially approved as new international Open Standards that can reach millions of individuals.

Testbed-20 will drive innovation at the foundations of geospatial data ecosystems. While working across four tasks, participants will collaborate to create mechanisms that improve Integrity, Provenance, and Trust (IPT) in geospatial data systems and workflows; transform Standards for GEOINT Imagery Media for Intelligence, Surveillance, and Reconnaissance (GIMI); enhance the usability of, and investigate new applications for, GeoDataCubes; and explore options for High-Performance Geospatial Computing Optimized Formats.

The OGC Executive Planning Committee during their visit to the CAE Factory.

Closing Plenary

The OGC Executive Planning Committee during their visit to the CAE Factory.

Closing Plenary

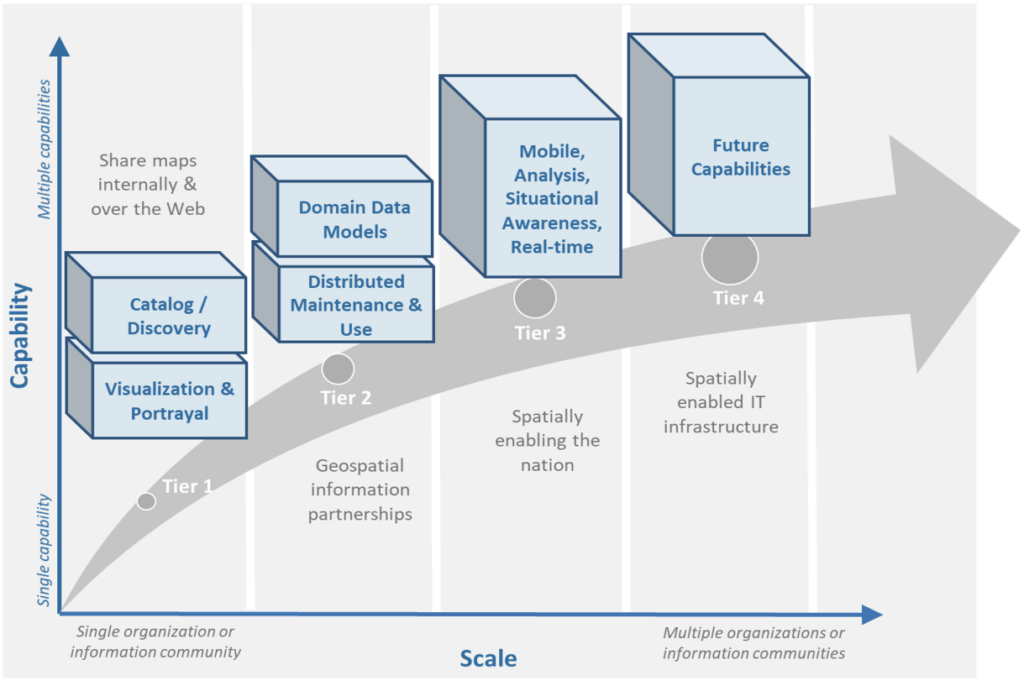

Wrapping up the week, I opened Thursday’s Important Things session with a rapid, 7-minute summary of the entire meeting week. This presentation was followed by some introductory remarks from Ryan Ahola of Natural Resources Canada to identify issues with Spatial Data Infrastructures (SDIs) and domains requiring geospatial standardization. Member discussion focused primarily on ensuring that SDI planning and management be aware of the larger data ecosystems in which the SDI must exist. This topic then led into a more detailed discussion on the mechanics of communicating how users should look to transition from the legacy OGC Web Services to OGC API Standards, where appropriate. OGC Members can access the presentations and a recording, including of the 7-minute overview, on this page in the OGC Portal. Additionally, OGC Members can access as session notes on the “Important-Things-2024-06” Etherpad.

The Closing Plenary then saw a keynote from Jong Tae Ahn of the Republic of Korea’s Ministry of Land, Infrastructure and Transport that briefed the long-term roadmap for spatial information standardization in Korea. The remainder of the session was dedicated to advancing Standardization activities in OGC. Slides and content from a large number of Working Group sessions were included. OGC Members can access the presentations and a recording on this page in the OGC Portal.

Thank you to our communityAs always, it was such a pleasure seeing our community come together to talk about their recent achievements, learn from each other, and drive innovation and Standards development forward. I sincerely thank our members for investing their time and energy, as well as their dedication to making OGC the world’s leading and most comprehensive community of location experts.

The next OGC Member Meeting is scheduled for November 4-8 in Goyang in the Seoul Capital Area of the Republic of Korea. Join members of the OGC Community to learn about the latest happenings at OGC, advance geospatial standards, network with the leaders of geospatial, and see what’s coming next. Registration will open soon on ogcmeet.org. To hear about it when it opens, feel free to subscribe to the OGC Newsletter – a digest of all things OGC, sent every two weeks.

The post A recap of the 129th OGC Member Meeting, Montreal, Canada appeared first on Open Geospatial Consortium.

-

15:00

15:00 Andreas Matheus receives OGC’s 2024 Gardels Award

sur Open Geospatial Consortium (OGC)Last night at the 129th Open Geospatial Consortium (OGC) Member Meeting, held in Montreal, Canada, Andreas Matheus was presented the OGC’s prestigious Kenneth D. Gardels Award. The Gardels Award is presented each year to an individual who has made exemplary contributions to OGC’s consensus standards process. The Gardels Award was conceived to memorialize the spirit of a man who dreamt passionately of making the world a better place through open communication and the use of information technology to improve the quality of human life.

Andreas Matheus, Managing Director at Secure Dimensions GmbH, was selected by previous Gardels Award winners as the 2024 recipient because of his persistent efforts to ensure best practices in security and API design in OGC.

A member of the nominating committee commented that Andreas has “been the go-to security expert for almost as long as OGC has existed.” Another committee member noted that Andreas’ “dedication on security and citizen science has been constant” and that he is “fully focused on promoting OGC solutions and other Standards for security.”

“The OGC Board of Directors thanks Andreas for his work as a chair and active member of many OGC Working Groups and for providing his insight and expertise to several OGC Standards and Collaborative Solutions and Innovation (COSI) initiatives,” commented Prashant J. Shukle, Chair of the OGC Board of Directors. “Your tireless voice of expertise on security matters continues to be critical to OGC activities and exemplifies the values associated with the Gardels Award.”

Andreas Chairs the OGC Security, Citizen Science, and Blockchain & Distributed Ledgers Domain Working Groups as well as the GeoXACML and OWS Common – Security Standards Working Groups. Andreas is also the principal editor for several OGC Testbed Engineering Reports and both the GeoXACML and OWS Web Services Security Standards.

In all this work, Andreas exemplifies the highest values of OGC, and has demonstrated the principles, humility, and dedication in designing, supporting, and promoting spatial technologies to address the needs of humanity that characterized Kenn Gardels’ career and life.

About the OGC Gardels Award

The Kenneth D. Gardels Award is a gold medallion presented each year by the Board of Directors of the Open Geospatial Consortium, Inc. (OGC) to an individual who has made exemplary contributions to OGC’s consensus standards process. Award nominations are made by members – the prior Gardels Award winners – and approved by the Board of Directors. The Gardels Award was conceived to memorialize the spirit of a man who dreamt passionately of making the world a better place through open communication and the use of information technology to improve the quality of human life.

Kenneth Gardels, a founding member and a director of OGC, coined the phrase “Open GIS.” Kenn died of cancer in 1999 at the age of 44. He was active in popularizing the open source Geographic Information System (GIS) ‘GRASS’, and was a key figure in the Internet community of people who used and developed that software. Kenn was well known in the field of GIS and was involved over the years in many programs related to GIS and the environment. He was a respected GIS consultant to the State of California and to local and federal agencies, and frequently attended GIS conferences around the world.

Kenn is remembered for his principles, courage, and humility, and for his accomplishments in promoting spatial technologies as tools for preserving the environment and serving human needs.

More information on the OGC Gardels Award, including previous winners, can be found at: ogc.org/about-ogc/ogc-awards/gardels-awards/The post Andreas Matheus receives OGC’s 2024 Gardels Award appeared first on Open Geospatial Consortium.

-

15:00

15:00 Standards Enabling Collaboration For Global Challenges

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is a membership organization dedicated to solving problems faced by people and planet through our shared belief in the power of geography. OGC is one of the world’s largest data and technology consortia and—at 30 years old—one of its longest standing. OGC works with new and established partners and stakeholders to develop and apply accelerated, practical, and implementable solutions to today’s biggest issues, from climate resilience, emergency management, and risk management & insurance, to supply chain logistics, transportation, and health care and beyond.

OGC holds regular member meetings across the globe where geospatial professionals convene to develop standards and advance innovation initiatives led by OGC Members. Most sessions are open to the public and offer valuable opportunities to network with leaders from industry, academia, and government, define future technology trends, and contribute to the open geospatial community.

OGC’s 129th Member Meeting will be held in Montreal, Canada, from June 17–22, 2024. The event kicks off OGC’s 30th anniversary celebrations and carries the theme ‘Standards Enabling Collaboration for Global Challenges.’ Support for the meeting comes from OGC Strategic Member Natural Resources Canada, with additional support from Esri Canada, CAE, Safe Software, and dinner sponsor Bentley Systems.

Eric Loubier, Director General of the Canada Centre for Mapping and Earth Observation at Natural Resources Canada (NRCan) will open the week with a keynote, followed by a 30-year Canadian retrospective by OGC Board Chair Prashant J. Shukle.

“The 129th Member Meeting provides a great opportunity to hear from our incredible Canadian partners and community,” said OGC CEO Peter Rabley. “Some of OGC’s earliest—if not our first—and longest-running supporters have been Canadians and Canadian firms. Our keynote from Eric Loubier and the exciting 30-year Canadian retrospective by Prashant Shukle will serve well to kick off the week’s exciting sessions and discussions.”

“Throughout the 30 years of OGC’s history, Canadians have played a foundational role,” said OGC Board Chair Prashant J. Shukle. “My friend and mentor Dr. Bob Moses, who founded PCI Geomatics, was one of OGC’s first funders and a long-time supporter of OGC. As an emergency room doctor, Bob saw the power of new technologies and data. Critically, he understood that technologies had to work together seamlessly and effectively to really address complex problems.

“Like Bob, many other Canadians instantly saw the powerful role and impact that OGC could have, and I am constantly amazed at their leadership and vision. It is my privilege to honor those Canadians who have gone almost unnoticed here in Canada, but who have fundamentally changed how the world uses technology across so many industries.”

Other highlights of the week will include a Methane Summit, a meeting of the OGC Canada Forum, the popular Future Directions session (this meeting’s topic is AI), as well an abundance of working group sessions on diverse topics such as marine, climate & disaster resilience, and beyond.

The Methane Summit is organized by Steve Liang, Professor and Rogers IoT Research Chair at the University of Calgary and Founder and CTO of OGC Member SensorUp. Steve is spearheading this summit to tackle the critical global challenge of monitoring and tracking methane emissions. The event will feature speakers from McGill University and Environment and Climate Change Canada (ECCC), who will discuss the challenges and opportunities of data management in methane emissions management. Attendees will also be introduced to the Methane Emissions Modeling Language (MethaneML)—a new tool designed to enhance the accuracy and efficiency of methane emissions tracking & reduction. This summit promises to be a significant step forward in our collective efforts to address climate change through innovative data solutions.

The meeting of the OGC Canada Forum is scheduled for June 17 & 18. The Canada Forum is open to all Canadian organizations, regardless of OGC membership status. The sessions have the aim of facilitating collaboration to address Canada’s geospatial needs through capacity building, innovation, standards, and economic growth. Cameron Wilson, Project Manager at Natural Resources Canada (NRCan), will delve into the history, progress, and future priorities of the forum, highlighting key issues crucial for the Canadian community.

Another highlight of the Forum will be a debate addressing the topic: In an era of ever-increasing data availability, there is a pressing need for digital interoperability to solve today’s biggest problems through rapid innovation. Standards only slow this down and are therefore no longer necessary. Debaters include Ed Parsons, Geospatial Technologist at Google, the aforementioned Steve Liang, Will Cadell, CEO of Sparkgeo, and Bilyana Anicic, President of Aurora Consulting. This session promises to offer diverse perspectives on the role that standards can, should, or won’t play in today’s rapidly evolving geospatial landscape.

This meeting’s Future Directions session, held Tuesday morning, is all about AI, with presentations and a panel from Bentley Systems, GeoRoundtable/IEEE GRSS, makepath, and TerraFrame.

Participation in the 129th OGC Member Meeting is welcomed both in-person and remotely. This event is an exciting opportunity to engage in sessions that celebrate three decades of geospatial collaboration and innovation. Attendees will have the chance to learn from, and network with, leading experts from around the world.

Register now for the 129th OGC Member Meeting to be part of OGC’s continued efforts to advance location data and technology and collaboratively address critical global challenges.

The post Standards Enabling Collaboration For Global Challenges appeared first on Open Geospatial Consortium.

-

15:00

15:00 Registrations Open for OGC’s July 2024 Open Standards Code Sprint

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) invites developers and other contributors to the July 2024 Open Standards Code Sprint, to be held from July 10-12 in London and online. Participation is free and open to the public, with travel support funding available to select participants. Sponsorship opportunities are also available.

The Code Sprint is sponsored by OGC Principal Member Google at the Silver Level, with additional Strategic Member support from Natural Resources Canada (NRCan).

This will be a collaborative and inclusive event to support the development of open Standards and applications that implement those Standards. All OGC Standards are in scope for this Code Sprint, including OGC API Standards. The Sprint will feature three special tracks on Data Quality & Artificial Intelligence, Validators, and the Map Markup Language (MapML).

OGC Code Sprints experiment with emerging ideas in the context of geospatial Standards and help improve interoperability of existing Standards by experimenting with new extensions or profiles. They are also used for building proofs-of-concept to support standards development activities and the enhancement of software products.

Non-coding activities such as testing, working on documentation, or reporting issues are welcome during the Code Sprint. OGC Sprints also provide an opportunity to onboard developers that are new to OGC Standards, through the sprints’ mentor streams.

Organizations are invited to sponsor the Code Sprint. A range of packages are available offering different opportunities for organizations to support the geospatial development community while promoting their products or services. Visit the Event Sponsorship page for more information.

A one-hour pre-event webinar will take place at 14:00 BST (UTC+1) on June 13. The webinar will outline the scope of work for the Code Sprint and provide an overview of the specifications that will be its focus. The pre-event webinar will take place on OGC’s Discord server.

Travel Support Funding is available for selected participants. Participants interested in receiving travel support funding should indicate their interest on the registration form. Any participant applying for funding will need to submit their registration form by June 30. Applicants will be notified within 2 weeks of their application whether travel support will be available to them or not.

The code sprint will begin with an onboarding session at 09:00 (UTC+1) on July 10, and ends at 17:00 (UTC+1) on July 12. Registration for in-person participation closes at 17:00 (UTC+1) on July 3. Registration for remote participation will remain open throughout the Code Sprint.

Registration is available on the July 2024 Code Sprint website. To learn more about future and previous OGC code sprints, visit the OGC Developer Events Wiki, the OGC Code Sprints Website, or join OGC’s Discord server.

The post Registrations Open for OGC’s July 2024 Open Standards Code Sprint appeared first on Open Geospatial Consortium.

-

10:45

10:45 OGC announces Peter Rabley as new CEO

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is excited to announce the appointment of Peter Rabley as OGC’s Chief Executive Officer (CEO). The announcement was made last night at the Geospatial World Forum 2024 in Rotterdam, The Netherlands.

Peter brings to OGC a wealth of experience from the private, governmental, and not-for-profit sectors, including in venture financing, and in developing and implementing scale-up strategies for international not-for-profits.

“I am excited and honored to be appointed as OGC’s CEO,” said Peter Rabley. “OGC is well positioned to build on its incredible 30 year legacy by responding to the ever-increasing rate of change seen in technology and society alike. Opportunities and challenges have never been more apparent and I see tremendous potential for growth in new markets around the globe. This is the time of geospatial.”

“I am particularly excited to have Peter leading OGC,” commented Prashant Shukle, Chair of the OGC Board of Directors. “Peter has a proven track record in the public, private, and not-for-profit areas of the geospatial industry, and closely aligns with what our key stakeholders and partners were telling us they wanted in a CEO. The OGC Board took the time to get this selection right, and we are very excited about how Peter fits with our plans for a reinvigorated and repositioned OGC.”

Peter’s appointment to CEO is timely, with it coming during OGC’s 30th anniversary year—a time when OGC is taking stock of its successes while modernizing to respond to a global economy that increasingly uses geospatial technologies across so many domains and applications.

The OGC is one of the world’s largest data and technology consortia and one of its longest standing. Under Peter’s leadership, OGC will work with new and existing partners and stakeholders to bring accelerated, practical, and implementable solutions to our community.

About Peter Rabley

Peter Rabley is a technology executive, investor and geographer. He has spent the last thirty years creating and operating geospatial businesses that map the earth to improve lives and protect the resources of our planet. Prior to OGC, Peter Rabley co-founded PLACE, a non-profit data trust that makes mapping more accessible and affordable so that decision makers have the data they need to improve the places around them. At PLACE, Peter was responsible for strategy and managing the organization’s investment portfolio. Before PLACE, Peter was a venture partner at leading impact investing firm Omidyar Network, where he led the Property Rights initiative.

Peter has built various businesses including ILS, an enterprise software firm that provided property taxation, registration and mapping solutions to governments globally. After its acquisition by Thomson Reuters, he became Vice President for Global Business Development and Strategy at Thomson Reuters.

Peter remains on the board of PLACE. He also serves on the board of the Radiant Earth Foundation, Meridia, and Microbuild. He is a Fellow of the Royal Geographical Society and the Royal Society of Arts. Peter graduated from the University of Miami with a B.A. in Geography and Economics and an M.A. in Geography. Peter Rabley (L) on stage at Geospatial World Forum 2024 with OGC Board Members (L-R) Zaffar Sadiq Mohamed-Ghouse, Rob van de Velde, Chair Prashant J. Shukle, Frank Suykens, and Deborah Davis.

Peter Rabley (L) on stage at Geospatial World Forum 2024 with OGC Board Members (L-R) Zaffar Sadiq Mohamed-Ghouse, Rob van de Velde, Chair Prashant J. Shukle, Frank Suykens, and Deborah Davis.The post OGC announces Peter Rabley as new CEO appeared first on Open Geospatial Consortium.

-

23:32

23:32 A recap of the 128th OGC Member Meeting, Delft, The Netherlands

sur Open Geospatial Consortium (OGC)Two records were broken in Delft this March: not only was OGC’s 128th Member Meeting our biggest ever – with over 300 representatives from industry, government, and academia attending in-person (and over 100 virtual) – but the meeting also saw a record number of motions for votes on new Standards, highlighting the very productive activities undertaken by the OGC community in recent months.

Held in Delft, Netherlands from 25-28 March, 2024, OGC’s 128th Member Meeting brought together global standards leaders and geospatial experts looking to learn about the latest happenings at OGC, advance geospatial standards, and see what’s coming next. Hosted by TU Delft and sponsored by Geonovum with support from GeoCat and digiGO, the overarching theme for the meeting was GeoBIM for the Built Environment.

As well as the many Standards and Domain Working Group meetings, the 128th Member Meeting also included a Land Administration Special Session, a Geospatial Reporting Indicators ad hoc, a Data Requirements ad hoc, the OGC Europe Forum, an Observational Data Special Session, two Built Environment sessions subtitled The Future of LandInfra and What Urban Digital Twins Mean to OGC, a public GeoBIM Summit, a Quantum Computing ad hoc, and the usual Monday evening reception & networking session and Wednesday night dinner.

The dinner was held at the historic Museum Prinsenhof Delft, former residence of William of Orange, and – grimly – where he was assassinated. On a lighter note, during the dinner Ecere Corporation, represented by Jérôme Jacovella-St-Louis, received an OGC Community Impact Award for their tireless work in driving OGC Standards forward. Congratulations Ecere and Jérôme!

Attendees of the Wednesday night dinner at OGC’s 128th Member Meeting.

Attendees of the Wednesday night dinner at OGC’s 128th Member Meeting.

Also during the meeting members of the OGC GeoPose and POI SWGs in collaboration with the Open AR Cloud team and XR Masters, and support from Geonovum demonstrated interoperable AR browsers. The two AR browsers (one native app, MyGeoVerse by XR Masters, and spARcl, a WebXR-based browser) were able to share/see the same POIs while being geolocalized using the Augmented City Visual Positioning Service (VPS). The VPS receives requests from (and returns) ‘GeoPoses’ to any OGC-compliant app that has implemented the GeoPose standard. The project details are described on this page and captured in this two minute video on YouTube.

In tandem with the theme of GeoBIM, two other technology areas stood out during the meetings: Data Spaces, and the transition from OGC Web Services to OGC APIs.

Data SpacesThere has lately been a dramatic increase in the number of discussions on Data Spaces, both in and outside of OGC, as the concept becomes more commonplace in European projects and policy. As per the European Union Data Spaces Support Centre, a Data Space is defined as “an infrastructure that enables data transactions between different data ecosystem parties based on the governance framework of that data space.” In other words, the “space” in a Data Space defines a context for which the data are useful: a container, governance, and access. Given the ubiquitous use of geospatial data, directly and indirectly, an understanding of the Data Space ecosystem may be key to participate in emerging data infrastructures.

OGC Web Services to OGC API transitionThe full set of capabilities offered by the OGC Web Services Standards (e.g., Web Map Service (WMS), Web Feature Service (WFS), etc.) is now reflected in OGC API Standards that have either been published, are approaching final approval vote, or are being actively developed. Over the coming months, OGC will establish a process and resources to aid in the transition to the more modern Standards, while ensuring that the user community recognizes that the legacy web services are still functional and valuable.

Friso Penninga, CEO of Geonovum, opens the week with an introduction to Geonoum and Delft.

Opening Session

Friso Penninga, CEO of Geonovum, opens the week with an introduction to Geonoum and Delft.

Opening Session

The week opened with a welcome from Friso Penninga, CEO of Geonovum, who outlined not only the work of Geonovum, but also the culture of Delft. Following this, Prof. Jantien Stoter of 3Dgeoinfo, TU Delft highlighted activities at TU Delft in the meeting theme area and previewed Wednesday’s GeoBIM Summit. Pieter van Teeffelen of digiGO then presented a Dutch platform for digital collaboration in the built environment. Finally, Jeroen Ticheler of GeoCat explained challenges in social welfare and the work of the GeoNetwork to address these challenges.

Next, it was time to settle a long-running dispute in OGC: who has the better chocolate, Switzerland or Belgium? After a blind taste-test, the audience voted Belgium as the winner. Is the debate now settled? Only time – and perhaps our taste buds – shall tell.

The chocolate contest was followed by a demonstration of the Netherlands Publieke Dienstverlening Op de Kaart (PDOK) geodata platform, which integrates a number of published and draft OGC API Standards.

Special tribute was then paid to two instrumental figures in OGC’s history who passed away in November 2023: Jeff de la Beaujardiere (OGC Gardels and Lifetime Achievement Awards recipient) and Jeff Burnett (former OGC Chief Financial Officer).

Prof. Jantien Stoter of 3Dgeoinfo, TU Delft, offered a preview of Wednesday’s GeoBim Summit during the Opening Session.

Today’s Innovation, Tomorrow’s Technology, and Future Directions

Prof. Jantien Stoter of 3Dgeoinfo, TU Delft, offered a preview of Wednesday’s GeoBim Summit during the Opening Session.

Today’s Innovation, Tomorrow’s Technology, and Future Directions

As always, the popular Today’s Innovation, Tomorrow’s Technology, and Future Directions session runs unopposed on the schedule so that all meeting participants can attend. At this Member Meeting, the session was presented by Marie-Françoise Voidrot and Piotr Zaborowski of OGC’s Collaborative Solutions and Innovation Program (COSI). The session focused on several projects that OGC is participating in and are developing capabilities that will be of use to all members. Many of these projects are sponsored by the European Union to advance interoperable science and data management and are supporting OGC resources such as the OGC RAINBOW registry, GeoBIM integration, and learning and developer resources, such as the Location Innovation Academy.

OGC Members can access the presentations and a recording from the session on this page in the OGC Portal. An overview of some of the European projects highlighted during the session, entitled European Innovation, Global Impact, was published on the OGC Blog last year. Piotr Zaborowski, Senior Director, of OGC’s Collaborative Solutions and Innovation Program, presents some of the European Union funded projects in which OGC is involved.

Meeting Special Sessions

Piotr Zaborowski, Senior Director, of OGC’s Collaborative Solutions and Innovation Program, presents some of the European Union funded projects in which OGC is involved.

Meeting Special Sessions

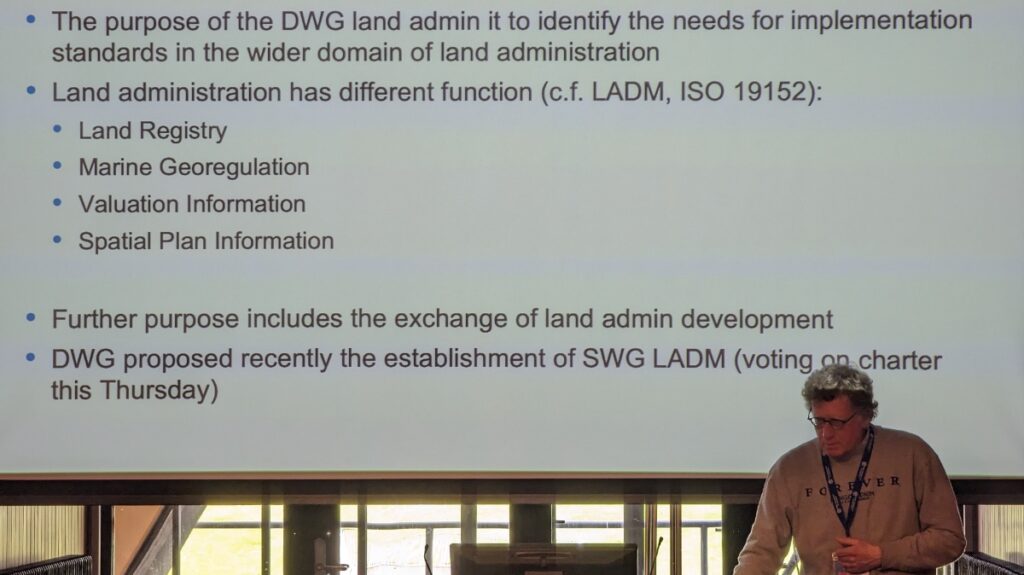

The Land Administration Special Session was dedicated to updating OGC membership on the progress of Land Administration activities amongst members and in ISO/TC 211, where the Land Administration Domain Model (LADM) is managed. A new Land Administration Domain Model Standards Working Group (SWG) is being considered to create Part 6 of LADM: the encoding of Parts 1-5 in one or more formats. OGC Members can access the presentations and a recording here (Session 1) and here (Session 2) in the OGC Portal.

Geospatial Reporting Indicators have been discussed in the OGC Climate Resilience Domain Working Group (DWG) in the context of Land Degradation. However, the means to exchange indicator information reporting the degree of land degradation (or influencing factors) is not standardized in the community. OGC members are therefore proposing a new SWG to develop such standardized reporting indicators, possibly as extended functionality of work in Analysis Ready Data (ARD). OGC Members can access the presentations and a recording on this page in the OGC Portal.

An ad hoc session on Geospatial Data Requirements was held to assess the chartering of a new SWG. The purpose of this proposed SWG is to develop a Standard for describing what geospatial data a project or task needs to collect, store, analyze, and present to achieve the project objectives. OGC Members can access the presentations and a recording on this page in the OGC Portal.

The OGC Europe Forum met on Tuesday with presentations focused on Data Spaces: understanding the European Commission principles of data spaces and how OGC members can engage in the topic. The Forum featured speakers from the International Data Spaces Association (IDSA), ISO/TC211, and the European Commission’s Joint Research Centre (JRC) as well as an open panel discussion. OGC Members can access the presentations and a recording on this page in the OGC Portal.

Peter van Oosterom, from TU Delft and the co-chair of the OGC Land Administration DWG, presents during the Land Administration Special Session.

Peter van Oosterom, from TU Delft and the co-chair of the OGC Land Administration DWG, presents during the Land Administration Special Session.

The Observational Data Special Session was organized to identify the various OGC Working Groups that are building Standards or assessing the collection & use of observational data. The session clarified the commonalities between these WGs so they can be considered in future development of observational data Standards. OGC Members can access the presentations and a recording on this page in the OGC Portal.

Two sessions were held to discuss and prioritize the next steps for OGC activities concerning standardization in the Built Environment. The first of these sessions focused on the future development of the LandInfra suite of Standards: whether priority should be made on new encodings, new Parts, or other tasks. OGC Members can access the presentations and a recording on this page in the OGC Portal.

The second Built Environment session considered the topic of what Urban Digital Twins mean to OGC. Presentations on human engagement as sensors and the place for digital twins in data spaces were followed by a preview of the OGC Urban Digital Twins Discussion Paper and a panel of OGC specialists to discuss where Urban Digital Twins are most important in OGC. OGC Members can access the presentations and a recording on this page in the OGC Portal.

The public GeoBIM Summit emphasized the theme of this meeting: there is a great amount of continuity between the geospatial and Building Information Modeling (BIM) communities and their respective technologies and practices. Numerous rapid presentations highlighted activities using OGC and BIM Standards and use-cases for interoperability. OGC Members can access the presentations and a recording on this page in the OGC Portal. Presentations and recordings will soon be made available to the public on the GeoBIM Summit event page.

The Quantum Computing ad hoc session included open discussion from OGC Members working with or researching the application of quantum computing technology on geospatial issues. Future ad hoc meetings are planned to move towards the development of an OGC Quantum Computing Working Group.

Closing PlenaryThe Closing Plenary normally includes two sessions: an open discussion of Important Things suggested by members, followed by motions, votes, and presentations from members to advance the work of the Consortium. However, with the Member Meeting being compressed down to four days (as Friday was a regional holiday) the Important Things discussion was not held. As such, I opened the Closing Plenary with a rapid, 7-minute summary of the entire meeting week, which included Slides and content from a large number of the week’s Working Group sessions, available to OGC Members on this page in the OGC Portal. The Closing Plenary included a record number of motions for votes on new Standards, highlighting the very productive activities of OGC members in recent months.

Thank youOur 128th Member Meeting was our biggest yet. As always, it’s a pleasure to see hundreds of OGC Members get together to discuss, collaborate, and drive technology and standards development forward. Once again, a sincere thank you to our members for investing their time and energy, as well as their dedication to making OGC the world’s leading and most comprehensive community of location experts.

Be sure to join us at Centre Mont-Royal in Montreal, Canada, June 17-21, 2024, for our 129th Member Meeting. Registration is open now on ogcmeet.org. Sponsorship opportunities remain available – contact OGC for more info.

To receive a digest of the latest OGC news in your inbox every two weeks, be sure to subscribe to the OGC Newsletter.

The post A recap of the 128th OGC Member Meeting, Delft, The Netherlands appeared first on Open Geospatial Consortium.

-

11:43

11:43 Ecere Corporation receives OGC Community Impact Award

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) has announced Ecere Corporation, represented by Jérôme Jacovella-St-Louis, as the latest recipient of the OGC Community Impact Award. The award was presented last night at the Executive and VIP Dinner of the 128th OGC Member Meeting in Delft, The Netherlands.

The Community Impact Award is given by OGC to highlight and recognize those members of the OGC Community who, through their exceptional leadership, volunteerism, collaboration, and investment, have had a positive impact on the wider geospatial community.

Gobe Hobona, OGC Director of Product Management, Standards, commented: “Jérôme and colleagues from Ecere have been active participants of most OGC Code Sprints over the past five years, as well as contributors to several activities of Standards Working Groups. Their willingness to assist other participants during Code Sprints has helped to get many participants up to speed and to boost the work of the Code Sprints’ community of experts.

“Jérôme has also been a steadfast advocate of OGC Standards for symbology and portrayal, as demonstrated by his engagement of the cartographic community on the next generation of OGC web mapping and portrayal Standards. This engagement has facilitated the collaboration between the OGC and its partner communities in the cartographic domain.”

“Jérôme has been a key enabler for the implementation of OGC Standards,” added Joana Simoes, OGC Developer Relations. “Besides providing extremely complete implementations in GNOSIS, which can be used by others as a reference, he has always been available to help other projects – both online through Github and in-person during Code Sprints. Many OSGeo projects have improved their standards support thanks to GitHub issues filed or answered by Jérôme.”

Notably, Jérôme is also the Co-Chair of five OGC Standards Working Groups: OGC API – Common SWG, Coverages SWG, Styles & Symbology Encoding SWG, Discrete Global Grid Systems SWG, and OGC API – Tiles SWG. In addition to this, Jérôme is also Co-Editor of the OGC API – Maps, OGC API – Processes – Part 3: Workflows, OGC CDB 2.0 – Part 2: GeoPackage Data Store, and OGC API – 3D GeoVolumes Standards.

Ecere Corporation has, and continues to, make an impact within the OGC Community through their active leadership, collaboration, and engagement across numerous OGC Code Sprints, Collaborative Solutions and Innovation Program (COSI) Initiatives, Working Groups, and Member Meetings.

The OGC Community Impact award highlights the importance of collaboration, volunteering time and energy, advancing technologies and Standards, raising awareness, and helping solve critical issues across the geospatial community. Jérôme and Ecere Corporation exemplify all of these qualities in their tireless work during Code Sprints and across so many Standards Working Groups.

The post Ecere Corporation receives OGC Community Impact Award appeared first on Open Geospatial Consortium.

-

14:00

14:00 Registration opens for OGC Innovation Days Europe 2024

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is excited to announce that registration is open for OGC Innovation Days Europe. Held in conjunction with FOSS4G Europe 2024, on July 1-2, in Tartu, Estonia, the event will focus on themes of Climate and Disaster Resilience, One Health, and the corresponding Data Spaces. Registration is available here.

OGC Innovation Days Europe seeks to address stakeholders’ and policymakers’ need for digital solutions that can meet their requirements efficiently and in a user-friendly way. To this end, the event will bring internationally leading software development and data management experts together with non-technical experts – such as urban planners, policy makers, economists, and other beneficiaries of geospatial information – to exchange information and learn from each other. The stakeholders’ needs will be discussed and understood in depth while newly developed prototypes will be presented.

OGC is co-organizing Innovation Days Europe with the General Authority for Survey and Geospatial Information (GEOSA, Saudi Arabia) and FOSS4G-Europe.

The European IT landscape is changing rapidly. Several initiatives are underway, such as developing Data Spaces and Digital Twins, supporting the Green Deal, or otherwise trying to integrate the largely heterogeneous IT landscape. What do these mean for the leading challenges of our century? How can these predominantly technologically oriented efforts help with climate change and disaster resilience? Do they support Land Degradation Neutrality?

OGC Innovation Days Europe provides a platform to discuss how current technical efforts can be combined effectively with the necessary governance and policy aspects. What decision processes do we need? What agreements are required for efficient decision processes or homogeneous reporting models for, for example, Climate Change or Land Degradation Neutrality?

Thematic focus: Climate and Disaster Resilience, One Health, and Data Spaces.Achieving climate and disaster resilience and sustainability requires reliable location information on the environment, land, and climate. Generating this information dedicated to the users’ needs is challenging. It needs highly optimized spatial data infrastructures and climate resilience information systems operated within a targeted policy framework and driven by an efficient policy model. Interoperable data pipelines and well-organized data spaces with their data assessment applications, combined with analysis, visualization, and reporting tools, are required to gain the knowledge to enroll climate strategies and actions.

OGC Innovation Days Europe will foster a dialogue between technical experts, decision-makers, and policy experts, with a focus on climate & disaster resilience, one health, the role of data spaces, and the need for modernized knowledge exchange and analysis environments.

OGC Innovation Days Europe supports the shift in IT solutions from being purely technology-oriented to a more holistic approach that better accounts for the various needs of the stakeholders. The event will identify these needs and thus make an essential contribution to improving the direction of future technological developments, supporting operational setups that are driven by efficient governance models and that operate within a solution-oriented policy framework.

OGC Innovation Days Europe 2024 is sponsored by OGC, the General Authority for Survey and Geospatial Information, Saudi Arabia, and the European Commission through the Horizon projects CLINT (GA-101003876), AD4GD (GA-101061001), USAGE (GA-101059950), Iliad (GA-101037643), EuroGEOSec (GA-101134335), CLIMOS (GA-101057690) and other projects of the Climate-Health Cluster.

OGC Innovation Days Europe will run in conjunction with FOSS4G Europe 2024 from July 1-2, in Tartu, Estonia. Learn more and register on the FOSS4G Europe 2024 website.

The post Registration opens for OGC Innovation Days Europe 2024 appeared first on Open Geospatial Consortium.

-

11:13

11:13 Geo-BIM for the Built Environment

sur Open Geospatial Consortium (OGC)OGC holds three Member Meetings each year – one each in Europe, the Americas, and Asia Pacific – where OGC’s Standards Working Groups (SWGs), Domain Working Groups (DWGs), Members, and other geospatial experts meet to progress Standards, provide feedback on initiatives being run by the OGC Collaborative Solutions and Innovation (COSI) Program, hear about the latest happenings at OGC, network with the leaders of the geospatial community, and see what’s coming next. The meetings are open to the public, though there are some closed sessions, and provide a great way to start to get to know – and get involved with – the OGC Community.

The next OGC Member Meeting, OGC’s 128th, will be held at TU Delft on March 25-28, 2024. Fitting for TU Delft and the Netherlands, the theme of the meeting will be ‘Geo-BIM For the Built Environment.’ Meeting sponsorship is generously provided by TU Delft and Geonovum, with support from GeoCAT and digiGO. In the lead up to the event, Geonovum produced a short video highlighting what to expect in Delft.

“In many respects, the Netherlands is the world leader in the use of advanced integration of building data and detailed urban mapping, as well as the use of Open Standards and Open APIs to interface with those data to provide citizen services,” commented Scott Simmons, OGC’s Chief Standards Officer.

“More specifically, TU Delft is a world-leading research institute in Geo-BIM integration, while Geonovum, another meeting sponsor, has done so much to promote the effective use of Open Standards in government & e-government, including documenting proof that Open Standards make things work better, faster, and cheaper.”

Under the theme, the OGC Member Meeting will include several sessions related to the built environment and the integration of Geospatial and Building Information Models (BIM). Such sessions will include: a Geo-BIM Summit that non-members are encouraged to attend; a Built Environment Joint Session; and a Land Administration Special Session.

On top of these, there will be a meeting of the OGC Europe Forum, sessions on Geospatial Reporting Indicators, Observational Data, the ‘Today’s Innovations, Tomorrow’s Technologies’ Future Directions Session, networking opportunities, and the usual host of OGC Working Group meetings on topics ranging from APIs to agriculture, and the sea-floor to space. Most sessions are open to the public, with special encouragement for non-OGC-members to attend the Geo-BIM Summit and any of the Domain Working Group meetings. As always, the opening session will consist of presentations from local organizations that showcase the world-leading geospatial technologies seen in The Netherlands.

Dr. Rune Floberghagen, Head of the Science, Applications and Climate Department in the Directorate for Earth Observation Programmes, ESA, was one of the local experts that presented at the opening session of the 125th Member Meeting in Frascati, Italy.

Geo-BIM Summit

Dr. Rune Floberghagen, Head of the Science, Applications and Climate Department in the Directorate for Earth Observation Programmes, ESA, was one of the local experts that presented at the opening session of the 125th Member Meeting in Frascati, Italy.

Geo-BIM Summit

The Geo-BIM Summit will run on Wednesday afternoon and will focus on the integration of geographic data and Building Information Models (BIM).

“In the Building Information Modeling (BIM) world, geographical data about the environment of the construction is becoming increasingly important,” said Prof. Dr. Jantien Stoter, Chair of the OGC 3D Information Management DWG, and part of the organizing committee for the Geo-BIM session. “Similarly, in the Geospatial domain, the need for detailed information about buildings is also growing. There are many initiatives to develop solutions that better facilitate this integration, including projects that realize this integration for a specific use case; automated conversion of data between the two domains; methods to georeference BIM files in a standardized and straightforward way; and profiles for standards that establish the integration for specific use-cases. The Geo-BIM session will present such initiatives in order to address the question of “what more is needed to improve the integration?” This will further shape the OGC & buildingSMART Road Map on integration of both domains, and contribute to the best practices currently under development.”

Built Environment Joint SessionTwo sessions on the Built Environment will run on Wednesday morning. The first is entitled “The Future of Land Infra” and will discuss what more we need to do in the standardization of built infrastructure data, in terms of updating, improving or adding to the OGC Standards Portfolio, and will include input from several OGC activities: the Integrated Digital Built Environment (IDBE) subcommittee, LandAdmin DWG, Model for Underground Data Definition and Integration (MUDDI) SWG; and the Geotech Interoperability Experiment, as well as the wider OGC Collaborative Solutions and Innovation (COSI) Program.

The next session is entitled “What Urban Digital Twins mean to OGC” and will focus on the wider work that OGC is doing in the space and how the Digital Twins DWG can help harmonize it. The session will also include a cross-working-group discussion on the in-progress OGC Urban Digital Twins Position Paper, as well as a presentation by Binyu Lei, researcher at the Urban Analytics Lab at the National University of Singapore, entitled “Humans as Sensors in Urban Digital Twins.”

Attendees at the 123rd Member Meeting in Madrid, Spain.

Land Admin Special Session

Attendees at the 123rd Member Meeting in Madrid, Spain.

Land Admin Special Session

Following the opening session and keynotes on Monday is a Land Admin special session run by the OGC Land Administration (Land Admin) DWG.

“Worldwide, effective, and efficient land administration is an ongoing concern, inhibiting economic growth and property tenure,” commented Eva-Maria Unger, co-chair of the OGC Land Admin DWG. “Only a limited number of countries globally have a mature land information system.”

The Land Admin Special Session will also be the first meeting of the soon-to-be-formed OGC Land Administration Domain Model (LADM) SWG.

“This is a fairly significant session as we’re going to use it to kick off an effort to create an encoding Standard for Land Administration data that’s globally relevant,” said Scott Simmons. “It’s great to be holding it in Delft because Kadaster, the Netherlands’ Cadaster, Land Registry and Mapping Agency, is one of the world leaders in providing expertise on use of Land Administration and Land Administration modernization across not just Europe but for developing nations around the world. So it’s an opportunity to bring together their expertise along with representatives from all levels of sophistication of land management around the world.”

Peter van Oosterom continued: “The new SWG will work on the Land Administration Domain Model (LADM) Implementation Standard in OGC, which will be included as part 6 of the existing ISO 19152 series of Standards. This sounds modest, but is actually a big step forward. The LADM Standards today are only Conceptual Models, which means that countries implementing them have to find their own solutions for developing their country file, technical encodings, code list values, processes/workflows, etc.

“An open LADM Implementation Standard will not only reduce implementation costs by providing free, proven technical encodings, but would also enable solution vendors to provide commercial off-the-shelf software that works across multiple countries/jurisdictions, rather than having to adapt it to each unique approach.”

Networking, social, and other sessionsIn addition to these sessions, the 128th OGC Member Meeting will also see meetings of over 50 different working groups or committees covering almost the full breadth of geospatial applications and domains, as well as several social and networking events.

Registration remains open, as do sponsorship opportunities. Non-members are encouraged to attend. To see the full agenda, visit ogcmeet.org.

As well as the host of technical content and informative presentations, OGC Member Meetings also include networking events, such as the Wednesday night dinner.

As well as the host of technical content and informative presentations, OGC Member Meetings also include networking events, such as the Wednesday night dinner.The post Geo-BIM for the Built Environment appeared first on Open Geospatial Consortium.

-

12:58

12:58 Enterprise Products: A Collaborative Journey with OGC

sur Open Geospatial Consortium (OGC)In the ever-evolving realm of energy infrastructure, the success of an organization is often determined by its ability to adapt, innovate, and collaborate effectively. Enterprise Products Partners, L.P. has exemplified these qualities through their ongoing relationship with the Open Geospatial Consortium (OGC).

Under the leadership of Gary Hoover, Enterprise Products’ team of geospatial technology experts has helped develop an open, international geospatial Standard for the pipeline industry, PipelineML, and deployed disruptive open-source geospatial technology.

Doing so helped Enterprise Products optimize the management of their extensive 50,000-mile pipeline network, and supports the larger goal of safe and sustainable energy infrastructure within the oil and gas sector.

Pioneering the PipelineML StandardEnterprise Products became a voting member of OGC in 2013. Shortly after, Data Architect John Tisdale rolled up his sleeves and got to work, learning OGC’s consensus-based Standards process and serving as a charter member and co-chair of the PipelineML Standards Working Group (SWG). In 2019, the PipelineML Conceptual and Encoding Model Standard was approved by the OGC Membership, making it an official OGC Standard.

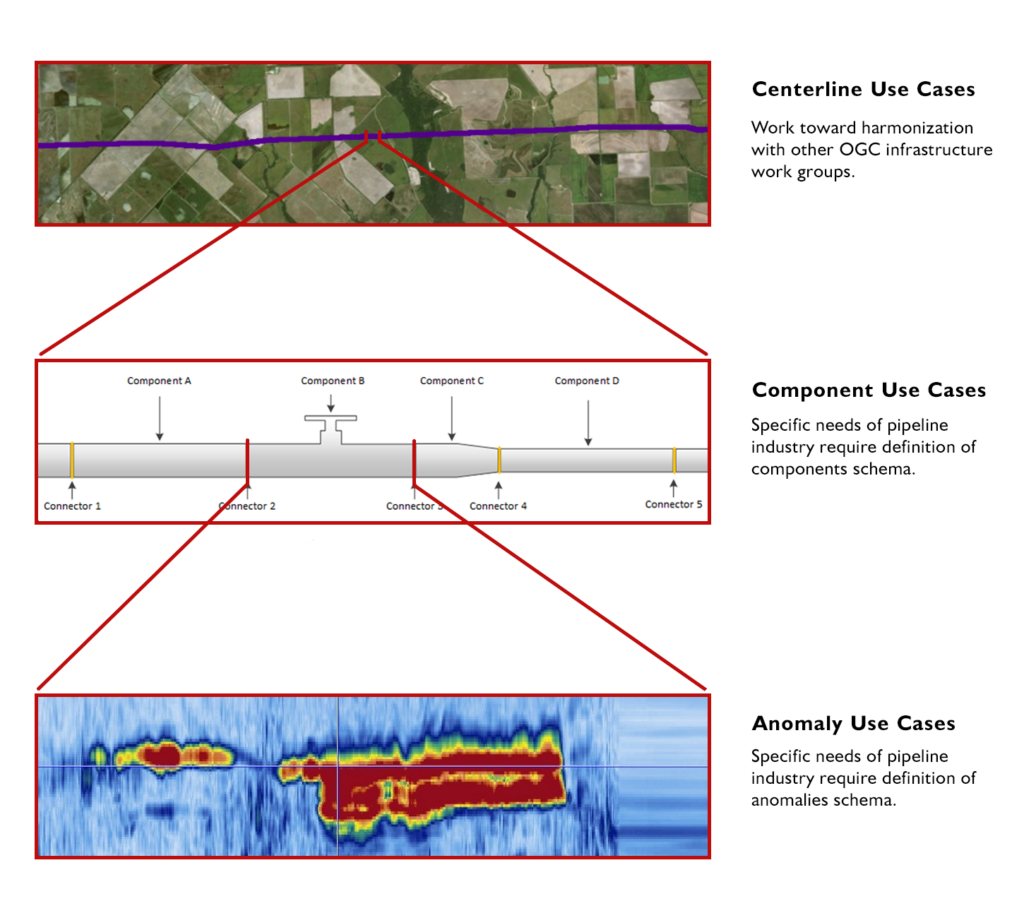

The PipelineML Standard – collaboratively developed by Enterprise Products and contributors from across the US, Canada, Belgium, Norway, Netherlands, UK, Germany, Australia, Brazil, and Korea – defines concepts that support the interoperable interchange of data related to oil and gas pipeline systems. PipelineML addresses two critical business use cases specific to the pipeline industry: new construction surveys and pipeline rehabilitation.