Vous pouvez lire le billet sur le blog La Minute pour plus d'informations sur les RSS !

Canaux

6111 éléments (1845 non lus) dans 50 canaux

Dans la presse

(1664 non lus)

Dans la presse

(1664 non lus)

-

Cybergeo

(1603 non lus)

Cybergeo

(1603 non lus) -

Mappemonde

(60 non lus)

Mappemonde

(60 non lus) -

Dans les algorithmes

(1 non lus)

Dans les algorithmes

(1 non lus)

Du côté des éditeurs

(24 non lus)

Du côté des éditeurs

(24 non lus)

-

Toute l’actualité des Geoservices de l'IGN

(15 non lus)

Toute l’actualité des Geoservices de l'IGN

(15 non lus) -

arcOpole - Actualités du Programme

arcOpole - Actualités du Programme

-

arcOrama

(9 non lus)

arcOrama

(9 non lus) -

Neogeo

Neogeo

Toile géomatique francophone

(110 non lus)

Toile géomatique francophone

(110 non lus)

-

Géoblogs (GeoRezo.net) (5 non lus)

-

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

-

Séries temporelles (CESBIO)

(2 non lus)

Séries temporelles (CESBIO)

(2 non lus) -

Datafoncier, données pour les territoires (Cerema)

Datafoncier, données pour les territoires (Cerema)

-

Cartes et figures du monde

Cartes et figures du monde

-

SIGEA: actualités des SIG pour l'enseignement agricole

SIGEA: actualités des SIG pour l'enseignement agricole

-

Data and GIS tips

Data and GIS tips

-

ReLucBlog

ReLucBlog

-

L'Atelier de Cartographie

L'Atelier de Cartographie

-

My Geomatic

-

archeomatic (le blog d'un archéologue à l’INRAP)

archeomatic (le blog d'un archéologue à l’INRAP)

-

Cartographies numériques

Cartographies numériques

-

Carnet (neo)cartographique

Carnet (neo)cartographique

-

GEOMATIQUE

GEOMATIQUE

-

Évènements – Afigéo

(12 non lus)

Évènements – Afigéo

(12 non lus) -

Afigéo

(12 non lus)

Afigéo

(12 non lus) -

Geotribu

(50 non lus)

Geotribu

(50 non lus) -

Conseil national de l'information géolocalisée

(9 non lus)

Conseil national de l'information géolocalisée

(9 non lus) -

Icem7

Icem7

-

Makina Corpus (1 non lus)

-

Oslandia

(1 non lus)

Oslandia

(1 non lus) -

CartONG

(2 non lus)

CartONG

(2 non lus) -

GEOMATICK

(6 non lus)

GEOMATICK

(6 non lus) -

Geomatys

(3 non lus)

Geomatys

(3 non lus) -

Les Cafés Géo

(1 non lus)

Les Cafés Géo

(1 non lus) -

L'Agenda du Libre

(3 non lus)

L'Agenda du Libre

(3 non lus) -

Conseil national de l'information géolocalisée - Actualités

(3 non lus)

Conseil national de l'information géolocalisée - Actualités

(3 non lus)

Géomatique anglophone

(35 non lus)

Géomatique anglophone

(35 non lus)

-

All Points Blog

All Points Blog

-

Directions Media - Podcasts

Directions Media - Podcasts

-

Navx

Navx

-

James Fee GIS Blog

-

Maps Mania

(19 non lus)

Maps Mania

(19 non lus) -

Open Geospatial Consortium (OGC)

Open Geospatial Consortium (OGC)

-

Planet OSGeo

(16 non lus)

Planet OSGeo

(16 non lus)

Géomatique anglophone

Géomatique anglophone

-

12:42

12:42 GeoTools Team: GeoTools 28.4 Released

sur Planet OSGeoThe GeoTools team is pleased to share the availability GeoTools 28.4: geotools-28.4-bin.zip geotools-28.4-doc.zip geotools-28.4-userguide.zip geotools-28.4-project.zip This release is also available from the OSGeo Maven Repository and is made in conjunction with GeoServer 2.22.4. Release notes - GeoTools - 28.4 Bug GEOT-7266 WMTSCapabilities throws NPE for missing title GEOT-7345 WFS -

10:47

10:47 gvSIG Team: La Unión Europea avanza en la regulación de la Inteligencia Artificial

sur Planet OSGeo

La Inteligencia Artificial (IA) es una tecnología innovadora con el potencial de transformar diversas industrias y mejorar la vida de las personas. En su búsqueda por regular esta tecnología y garantizar su uso responsable, la Unión Europea ha propuesto el primer marco normativo de la UE para la IA, como parte de su estrategia digital.

Esta regulación no solo tiene implicaciones generales, sino que también afecta a campos específicos, como la geomática. En este contexto, en gvSIG estamos analizando distintas posibilidades para integrar tecnologías de IA en la Suite gvSIG.

La combinación de la geomática y la IA promete avances notables en la toma de decisiones basada en datos, la gestión del territorio y el análisis espacial.

La regulación de la IA en la UE sienta un precedente a nivel mundial y muestra el compromiso de la Unión Europea de liderar en el desarrollo y uso responsable de esta tecnología. Esta regulación también se aplica a proyectos como gvSIG. El futuro ya está aquí.

Más información: [https:]]

-

10:33

10:33 gvSIG Team: The European Union advances in the regulation of Artificial Intelligence

sur Planet OSGeo

Artificial Intelligence (AI) is an innovative technology with the potential to transform various industries and improve people’s lives. In its quest to regulate this technology and ensure its responsible use, the European Union has proposed the first regulatory framework for AI in the EU as part of its digital strategy.

This regulation not only has general implications but also affects specific fields such as geomatics. In this context, at gvSIG, we are exploring different possibilities to integrate AI technologies into the gvSIG Suite. The combination of geomatics and AI promises remarkable advancements in data-driven decision-making, land management, and spatial analysis.

The regulation of AI in the EU sets a global precedent and demonstrates the European Union’s commitment to lead in the development and responsible use of this technology. This regulation also applies to projects like gvSIG. The future is already here.

More information: [https:]]

-

4:00

4:00 GeoServer Team: GeoServer 2.22.4 Release

sur Planet OSGeoGeoServer 2.22.4 release is now available with downloads ( bin, war, windows) , along with docs and extensions.

This is a maintenance release of GeoServer providing existing installations with minor updates and bug fixes.

GeoServer 2.22.4 is made in conjunction with GeoTools 28.4.

Thanks to Peter Smythe (AfriGIS) and Jody Garnett (GeoCat) for making this release. This is Peter’s first time making a GeoServer release and we would like to thank him for volunteering.

Release notesNew Feature:

- GEOS-10949 Control remote resources accessed by GeoServer

Improvement:

- GEOS-10973 DWITHIN delegation to mongoDB

Bug:

- GEOS-8162 CSV Data store does not support relative store paths

- GEOS-10906 Authentication not sent if connection pooling activated

- GEOS-10936 YSLD and OGC API modules are incompatible

- GEOS-10969 Empty CQL_FILTER-parameter should be ignored

- GEOS-10975 JMS clustering reports error about ReferencedEnvelope type not being whitelisted in XStream

- GEOS-10980 CSS extension lacks ASM JARs as of 2.23.0, stops rendering layer when style references a file

- GEOS-10981 Slow CSW GetRecords requests with JDBC Configuration

- GEOS-10993 Disabled resources can cause incorrect CSW GetRecords response

- GEOS-10994 OOM due to too many dimensions when range requested

- GEOS-10998 LayerGroupContainmentCache is being rebuilt on each ApplicationEvent

- GEOS-11015 geopackage wfs output builds up tmp files over time

- GEOS-11024 metadata: add datetime field type to feature catalog

Task:

- GEOS-10987 Bump xalan:xalan and xalan:serializer from 2.7.2 to 2.7.3

- GEOS-11008) Update sqlite-jdbc from 3.34.0 to 3.41.2.2

- GEOS-11010 Upgrade guava from 30.1 to 32.0.0

- GEOS-11011 Upgrade postgresql from 42.4.3 to 42.6.0

- GEOS-11012 Upgrade commons-collections4 from 4.2 to 4.4

- GEOS-11018 Upgrade commons-lang3 from 3.8.1 to 3.12.0

- GEOS-11020 Add test scope to mockito-core dependency

Sub-task:

- GEOS-10989 Update spring.version from 5.2.23.RELEASE to 5.2.24.RELEASE

For the complete list see 2.22.4 release notes.

About GeoServer 2.22 SeriesAdditional information on GeoServer 2.22 series:

- GeoServer 2.22 User Manual

- Update Instructions

- Metadata extension

- CSW ISO Metadata extension

- State of GeoServer (FOSS4G Presentation)

- GeoServer Beginner Workshop (FOSS4G Workshop)

- Welcome page (User Guide)

Release notes: ( 2.22.4 | 2.22.3 | 2.22.2 | 2.22.1 | 2.22.0 | 2.22-RC | 2.22-M0 )

-

1:12

1:12 GRASS GIS: Developing and updating GRASS GIS addons

sur Planet OSGeoThe r.mblend experience In 2017, I had the opportunity to implement a DEM blending algorithm that had been theorised earlier by my colleague João Leitão. It was somewhat natural to develop it as a GRASS add-on, since I have long relied on it for map algebra and other geo-spatial computations. That is how the r.mblend add-on was born. Five years passed without me committing again to r.mblend. In the meantime many important things changed, GRASS version 8 was released and code management moved from SVN to git/GitHub. -

16:25

16:25 Tom Kralidis: new pygeoapi podcast with MapScaping

sur Planet OSGeoFor those interested in pygeoapi, the project was recently featured on MapScaping (available on Apple Podcasts, Google Podcasts, Stitcher and Spotify). The MapScaping folks were great to work with and I’d like to thank them for making this happen and asking all the right questions. Enjoy! pygeoapi – A Python Geospatial Server -

12:00

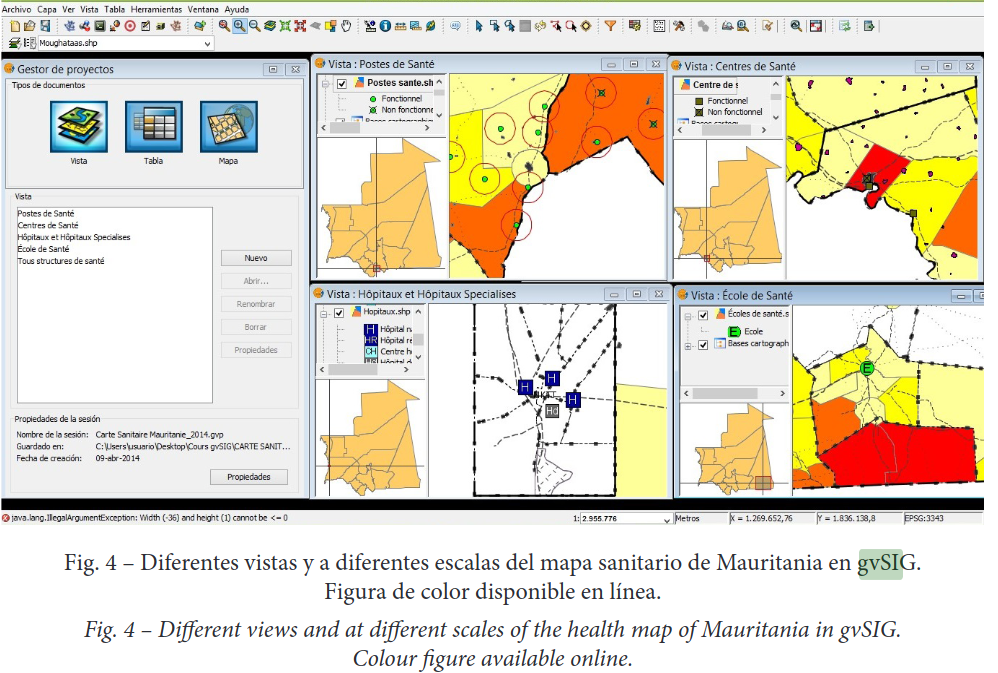

12:00 gvSIG Team: El Mapa Sanitario, una herramienta para la planificación y ordenación en salud: El caso de Mauritania

sur Planet OSGeo

Los llamados mapas de salud son un instrumento para la promoción, democratización y derecho a la información pública sobre salud. También han sido utilizados para contextualizar y focalizar las desigualdades en el sector de la salud pública y otros sectores relacionados. Os traemos un artículo que tiene como objetivo explicar la elaboración del mapa sanitario de Mauritania y su valor en la planificación y reorganización de la oferta de servicios de salud en todos los niveles de la pirámide sanitaria del país, con el fin de responder a las crecientes demandas de información generadas por diversas organizaciones, pero principalmente por el sector sanitario mauritano.

Los sistemas de información geográfica fueron un apoyo importante para este trabajo… y el software seleccionado para elaborarlo fue gvSIG Desktop.

El artículo, disponible en castellano, lo podéis encontrar aquí: [https:]]

-

11:03

11:03 gvSIG Team: Comparación de información catastral antigua y actual con gvSIG Desktop

sur Planet OSGeo

En un artículo recientemente publicado en el Boletín de la Asociación Española de Geografía se muestra el uso de gvSIG para detectar los cambios en los usos del suelo-tipos de cultivo desde 1930 a la actualidad.

Título: Cartografía antigua catastral para la detección de cambios de cultivo: los mapas topográficos parcelarios de Alboraya (1930–2013)

Artículo disponible en abierto: [https:]] -

10:49

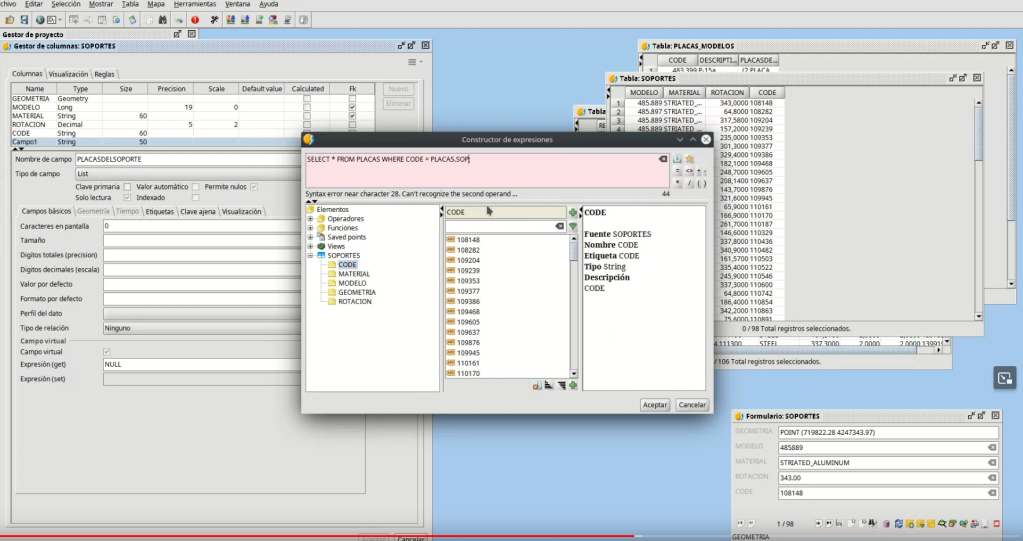

10:49 gvSIG Team: Curso-Taller de trabajo con modelos de datos de gvSIG Desktop

sur Planet OSGeo

Las pasadas Jornadas Internacionales de gvSIG contaban en su programa con un taller de trabajo con modelos de datos de gvSIG Desktop.

La grabación del taller no está disponible, pero sí un vídeo previo que grabamos de preparación del taller y que hemos decidido compartir por si es de utilidad.

Es un mini-curso de 1 hora de duración que os puede servir para ir más allá de lo habitual en el manejo de datos en un SIG. Muy recomendable a poco que manejéis modelos de datos algo complejos.

Talleres con formación tan avanzada no son tan habituales ni fáciles de acceder… así que mi recomendación es que no os lo perdáis:

-

10:40

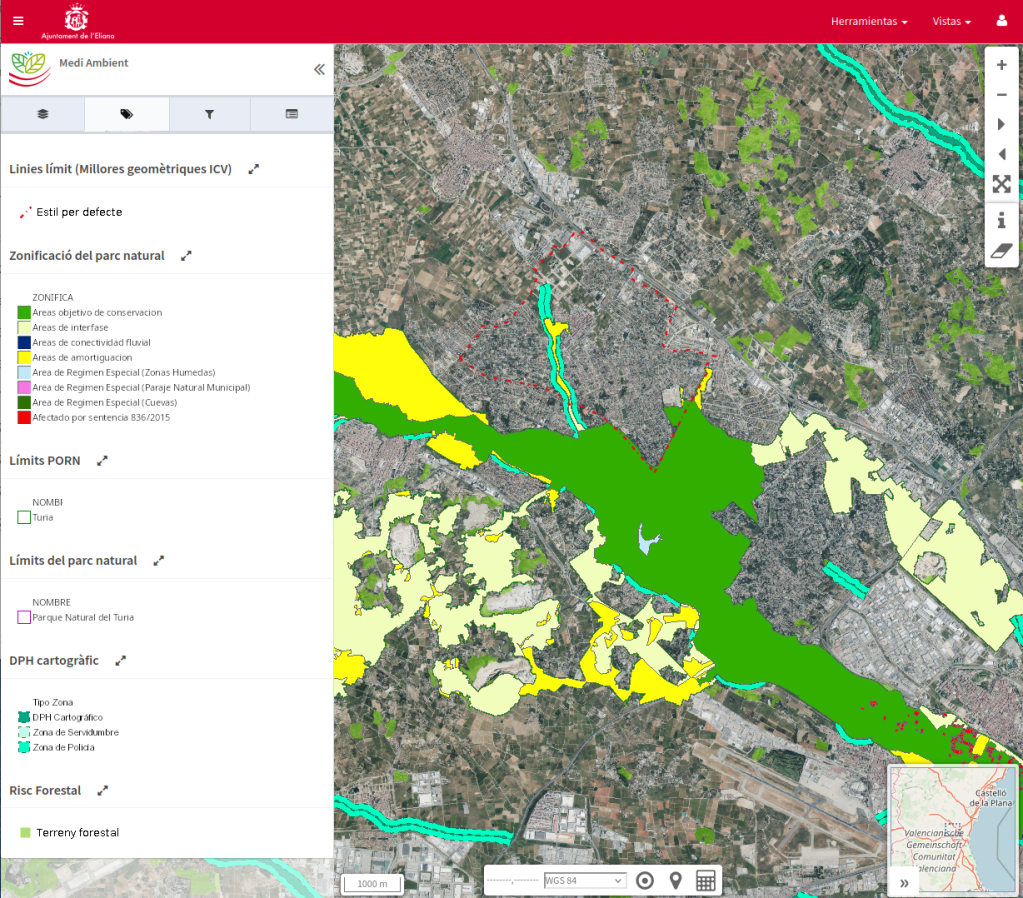

10:40 gvSIG Team: Geoportales públicos de L’Eliana

sur Planet OSGeo

Uno de los ayuntamientos que acaba de sumarse al cada vez más numeroso conjunto de administraciones locales que tienen su IDE con gvSIG Online es el de L’Eliana. Otro ejemplo más de cómo no solo las grandes ciudades pueden disponer de soluciones y tecnologías avanzadas para gestionar su información con dimensión geográfica.

De partida, más allá del número creciente de geoportales privados y orientados a la gestión interna, hay ya cuatro visores de mapas públicos disponibles, cada uno impulsado desde su departamento correspondiente:

- Medio Ambiente. Permite la consulta del “Plan de ordenación de los recursos naturales (PORN)” del Parque Natural del Túria y de la cartografía de riesgos, tanto de inundaciones como forestal.

- Urbanismo. Podemos acceder, entre otras capas, a información como la clasificación y calificación urbanística o al catálogo de bienes y espacios protegidos del municipio.

- Servicios Municipales. Disponible para consulta información tan relevante como los servicios de saneamiento, distribución de agua y luminarias.

- Deportes. Cartografía de rutas saludables.

La página web principal es:

Y podéis acceder a los primeros geoportales públicos publicados en:

-

4:00

4:00 Camptocamp: SwitzerlandMobility - a new website with a unique map and content features

sur Planet OSGeoPièce jointe: [télécharger]

For the new version of their website, SwitzerlandMobility wanted to create a unique concept of classical information mashed up with geographic information on maps.

-

17:00

17:00 OGC CEO Dr. Nadine Alameh receives 24th Annual Women in Technology Leadership Award

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is pleased to announce that OGC CEO, Dr. Nadine Alameh, has received the 24th Annual Women in Technology Leadership Award in the Non-Profit and Academia category, which honors women who demonstrate exemplary leadership traits and have achieved success in a non-profit organization or educational institution.

The Women in Technology’s Annual Leadership Awards seek to “recognize and honor female leaders whose achievements, mentorship and contributions to the community align with the WIT mission of advancing women in technology from the classroom to the boardroom” by honoring and celebrating female professionals who have found success in entrepreneurial, STEM, government, and corporate industries while inspiring colleagues, partners, and their community.

“These awards are a cornerstone of the WIT mission to advance women in technology from the classroom to the boardroom,” said Amber Hart, President of WIT and the Co-Owner/Founder, The Pulse of GovCon, in a press release for the awards. “Recognizing the success of these women provides a vision for current and future leaders of what is possible with determination and focus and highlights the role of mentorship and sponsorship in building a successful and meaningful career.”

In the nomination letter for the award, Chair of the OGC Board, Jeff Harris, Vice Chair, Prashant Shukle, and Board member, Zaffar Sadiq Mohamed-Ghouse, said that the OGC board nominated Nadine for her “outstanding global leadership of the Open Geospatial Consortium and its 500 members through a period of profound change.

“When Nadine assumed the role of Chief Executive Officer, she articulated a new vision and role for the OGC – one where it had to be relevant at the speed of change. To that end, she has worked tirelessly in pursuit of this vision as she mobilized some of the world’s largest technology companies, developed and developing national governments, and small- and medium-sized businesses and startups. That she delivered in a not-for-profit entity and with a steely resolve to ensure the global public interest – not only speaks of the scale of her achievements but also its impact on the global public good.”

The 24th Annual Leadership Awards were presented at a Gala on Thursday, May 18, 2023, at the Hyatt Regency in Reston, VA, USA.

The post OGC CEO Dr. Nadine Alameh receives 24th Annual Women in Technology Leadership Award appeared first on Open Geospatial Consortium.

-

10:21

10:21 gvSIG Team: Un vistazo a la Infraestructura de Datos Espaciales de Cullera (IDE)

sur Planet OSGeo

La IDE de Cullera se inició en el área de urbanismo, pero rápidamente se ha extendido a todas las áreas y departamentos municipales. En la actualidad cuenta con decenas de geoportales internos y una serie de visores de mapas públicos, donde consultar información relevante para los ciudadanos y visitantes del municipio.

Con este post vamos a iniciar una serie de artículos en los que os iremos presentando distintas Infraestructuras de Datos Espaciales implantadas con gvSIG Online, que se está convirtiendo en la solución de referencia para IDEs con software libre.

Vamos a repasar solo algunos de los geoportales públicos:

- Turismo: Es el geoportal principal que recopila información relativa al turismo. Agrupa un extenso conjunto de información como oferta hotelera, puntos y zona de Wi-Fi, gastronomía, museos, rutas y sendas turísticas, playas,…

- Movilidad y transporte: Permite consultar información relativa a movilidad como estacionamientos, carriles bici, líneas de autobús, puntos de carga eléctrica,…

- Deportes: Este geoportal está orientado a mostrar los recorridos de diferentes eventos deportivos como la Volta a peu a Cullera, el triatlón, la San Silvestre,…

- Agricultura y actividades cinegéticas: Contiene información de usos del suelo, bancos de tierras,…

- Servicios municipales: Visor de mapas con información de dotaciones municipales como centros sanitarios, bibliotecas, instalaciones deportivas, centros de enseñanza,…

- Playas: ahora que se acerca la temporada estival aquí se puede encontrar información tan interesante como playas accesibles, infraestructuras, banderas azules…

- Urbanismo: el inicio de la IDE, con toda la información típica de este tipo de geoportales relativa al urbanismo (calificación y clasificación) y catastro. Es interesante destacar que tiene normativa urbanística asociada a los elementos geográficos.

- Y más geoportales públicos: infraestructuras, medio ambiente y cambio climático, gestión de residuos, ciudadanía, arqueología, sanidad, fiestas y comercio. Y siguen creciendo…

La dirección de la página principal de la IDE de Cullera es:

El principal contenido de la IDE, además de los servicios de mapas, son sus geoportales. En el siguiente enlace se pueden visualizar todos los visores de mapas actualmente disponibles:

También es interesante hacer referencia a que gvSIG Online permite generar “Vistas compartidas” que no son más que URLs que permiten compartir una configuración concreta de un geoportal, en la que hay una serie de capas visibles o invisibles por defecto e, incluso, un determinado encuadre (zona o territorio del municipio visible al entrar).

Desde la web de turismo del Ayuntamiento de Cullera, accesible en el enlace [https:]] hay vistas compartidas en distintas secciones de esta web: playas, rutas naturales, monumentos… todas estas vistas compartidas enlazan con distintas configuraciones del geoportal de Turismo que forma parte de la IDE.

Por último, para acabar, indicar que entre las mejoras previstas para los próximos meses está la integración con el gestor de expedientes (SEGEX de Sedipualba) y la incorporación de gvSIG Mapps como app para toma de datos en campo.

-

19:48

19:48 GeoSolutions: GeoSolutions presence at FOSS4G 2023 in Prizren (Kosovo)

sur Planet OSGeoYou must be logged into the site to view this content.

-

10:11

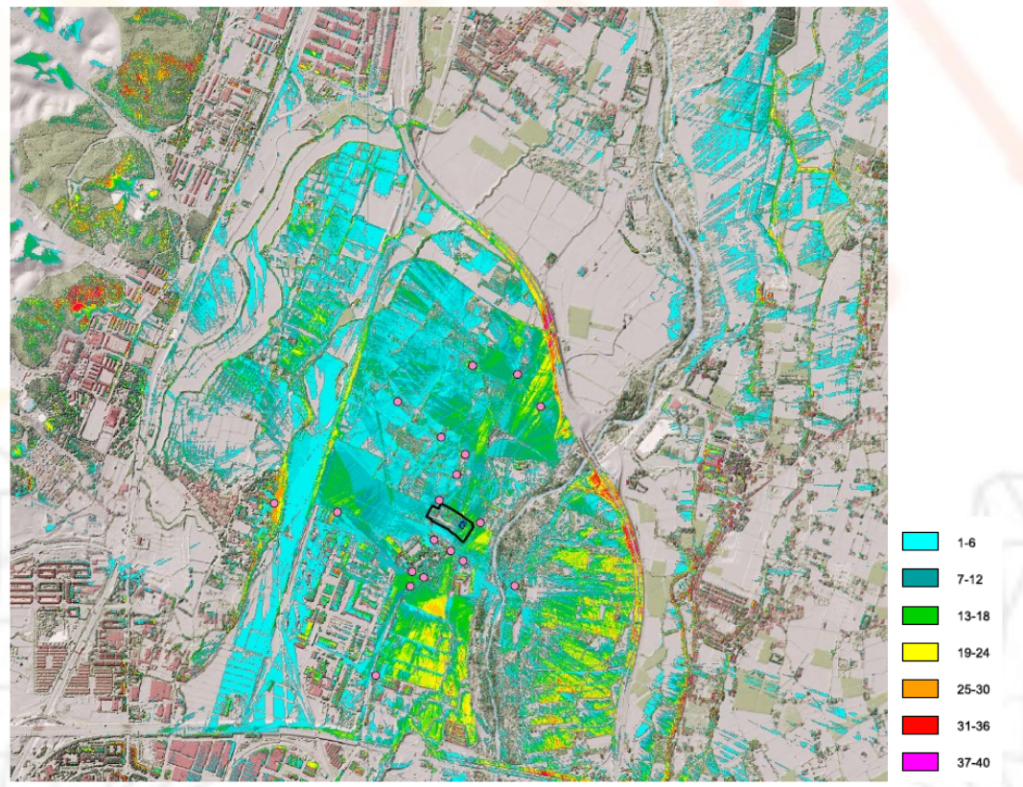

10:11 gvSIG Team: gvSIG Desktop aplicado al riesgo de impacto paisajístico

sur Planet OSGeo

Hoy os traemos una presentación de la empresa Geoscan, una consultora con muchos años de experiencia en áreas como la de la ingeniería geológica y el medio ambiente. En este caso nos muestran el uso de gvSIG Desktop para analizar el impacto paisajístico de las instalaciones de una empresa de producción y comercialización de semillas.

-

9:42

9:42 OPENGIS.ch: Unterstützung für WMTS im QGIS Swiss Locator

sur Planet OSGeo

Das QGIS swiss locator Plugin erleichtert in der Schweiz vielen Anwendern das Leben dadurch, dass es die umfangreichen Geodaten von swisstopo und opendata.swiss zugänglich macht. Darunter ein breites Angebot an GIS Layern, aber auch Objektinformationen und eine Ortsnamensuche.

Dank eines Förderprojektes der Anwendergruppe Schweiz durfte OPENGIS.ch ihr Plugin um eine zusätzliche Funktionalität erweitern. Dieses Mal mit der Integration von WMTS als Datenquelle, eine ziemlich coole Sache. Doch was ist eigentlich der Unterschied zwischen WMS und WMTS?

WMS vs. WMTSZuerst zu den Gemeinsamkeiten: Beide Protokolle – WMS und WMTS – sind dazu geeignet, Kartenbilder von einem Server zu einem Client zu übertragen. Dabei werden Rasterdaten, also Pixel, übertragen. Ausserdem werden dabei gerenderte Bilder übertragen, also keine Rohdaten. Diese sind dadurch für die Präsentation geeignet, im Browser, im Desktop GIS oder für einen PDF Export.

Der Unterschied liegt im T. Das T steht für “Tiled”, oder auf Deutsch “gekachelt”. Bei einem WMS (ohne Kachelung) können beliebige Bildausschnitte angefragt werden. Bei einem WMTS werden die Daten in einem genau vordefinierten Gitternetz — als Kacheln — ausgeliefert.

Der Hauptvorteil von WMTS liegt in dieser Standardisierung auf einem Gitternetz. Dadurch können diese Kacheln zwischengespeichert (also gecached) werden. Dies kann auf dem Server geschehen, der bereits alle Kacheln vorberechnen kann und bei einer Anfrage direkt eine Datei zurückschicken kann, ohne ein Bild neu berechnen zu müssen. Es erlaubt aber auch ein clientseitiges Caching, das heisst der Browser – oder im Fall von Swiss Locator QGIS – kann jede Kachel einfach wiederverwenden, ganz ohne den Server nochmals zu kontaktieren. Dadurch kann die Reaktionszeit enorm gesteigert werden und flott mit Applikationen gearbeitet werden.

Warum also noch WMS verwenden?

Auch das hat natürlich seine Vorteile. Der WMS kann optimierte Bilder ausliefern für genau eine Abfrage. Er kann Beispielsweise alle Beschriftungen optimal platzieren, so dass diese nicht am Kartenrand abgeschnitten sind, bei Kacheln mit den vielen Rändern ist das schwieriger. Ein WMS kann auch verschiedene abgefragte Layer mit Effekten kombinieren, Blending-Modi sind eine mächtige Möglichkeit, um visuell ansprechende Karten zu erzeugen. Weiter kann ein WMS auch in beliebigen Auflösungen arbeiten (DPI), was dazu führt, dass Schriften und Symbole auf jedem Display in einer angenehmen Grösse angezeigt werden, währenddem das Kartenbild selber scharf bleibt. Dasselbe gilt natürlich auch für einen PDF Export.

Ein WMS hat zudem auch die Eigenschaft, dass im Normalfall kein Caching geschieht. Bei einer dahinterliegenden Datenbank wird immer der aktuelle Datenstand ausgeliefert. Das kann auch gewünscht sein, zum Beispiel soll nicht zufälligerweise noch der AV-Datensatz von gestern ausgeliefert werden.

Dies bedingt jedoch immer, dass der Server das auch entsprechend umsetzt. Bei den von swisstopo via map.geo.admin.ch publizierten Karten ist die Schriftgrösse auch bei WMS fix ins Kartenbild integriert und kann nicht vom Server noch angepasst werden.

Im Falle von QGIS Swiss Locator geht es oft darum, Hintergrundkarten zu laden, z.B. Orthofotos oder Landeskarten zur Orientierung. Daneben natürlich oft auch auch weitere Daten, von eher statischer Natur. In diesem Szenario kommen die Vorteile von WMTS bestmöglich zum tragen. Und deshalb möchten wir der QGIS Anwendergruppe Schweiz im Namen von allen Schweizer QGIS Anwender dafür danken, diese Umsetzung ermöglicht zu haben!

Der QGIS Swiss Locator ist das schweizer Taschenmesser von QGIS. Fehlt dir ein Werkzeug, das du gerne integrieren würdest? Schreib uns einen Kommentar!

-

13:00

13:00 Lutra consulting: Webinar: Processing LiDAR data in QGIS 3.32

sur Planet OSGeoJoin this webinar to learn more about the new features in QGIS to process LiDAR data:

Date: Monday, June 26, 2023 at 14:00 GMT

Duration: 30 minutes + 15 minutes Q&A session

Speaker: Martin Dobias, CTO at Lutra Consulting with more than 15 years of QGIS development experience

Description

Point clouds are an increasingly popular data type thanks to the decreasing cost of their acquisition through lidar surveys and photogrammetry. On top of that, more and more national mapping agencies release high resolution point cloud data (spanning large areas and consisting of billions of points), unlocking many new use cases.

This webinar will summarize the latest QGIS release 3.32 and the addition of tools for point cloud analysis right from QGIS Processing toolbox: clip, filter, merge, export to raster, extract boundaries and more - all backed by PDAL library that already ships with QGIS, without having to rely on third party proprietary software.

This work was made possible by the generous donations to our crowdfunding.

-

10:01

10:01 gvSIG Team: Control de versiones y edición de cartografía multiusuario con software libre

sur Planet OSGeo

Uno de los desarrollos más avanzados que han llegado a gvSIG Desktop es su control de versiones, VCSGIS, y que lo ha convertido en una herramienta fundamental para resolver las problemáticas de edición avanzada a las que el software libre no tenía respuesta.

En esta presentación podréis conocer qué es VCSGIS y todo lo que permite hacer

-

19:48

19:48 GeoSolutions: GeoSolutions at WAGIS 2023 in Tacoma, WA 14-15 June

sur Planet OSGeoYou must be logged into the site to view this content.

-

9:56

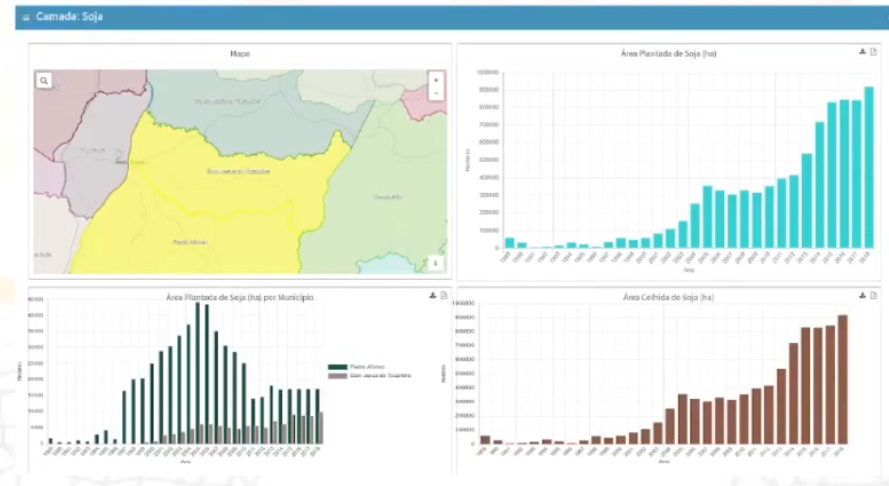

9:56 gvSIG Team: Plataforma de información geográfica del Estado de Tocantins, Brasil

sur Planet OSGeo

Os traemos la presentación de otro interesante proyecto de implantación de gvSIG Online, en este caso en el Estado de Tocantins (Brasil) y que ha permitido publicar numerosas capas de información geográfica, estructurada en diversos geoportales.

De este proyecto, además, destacamos el desarrollo de herramientas en gvSIG Online para la generación de dashboards o cuadros de mandos.

-

18:00

18:00 Lutra consulting: Virtual Point Clouds (VPC)

sur Planet OSGeoAs a part of our crowdfunding campaign we have introduced a new method to handle a large number of point cloud files. In this article, we delve into the technical details of the new format, rationale behind our choice and how you can create, view and process virtual point cloud files.

RationaleLidar surveys of larger areas are often multi-terabyte datasets with many billions of points. Having such large datasets represented as a single point cloud file is not practical due to the difficulties of storage, transfer, display and analysis. Point cloud data are therefore typically stored and distributed split into square tiles (e.g. 1km x 1km), each tile having a more manageable file size (e.g. ~200 MB when compressed).

Tiling of data solves the problems with size of data, but it introduces issues when processing or viewing an area of interest that does not fit entirely into a single tile. Users need to develop workflows that take into account multiple tiles and special care needs to be taken to deal with data near edges of tiles to avoid unwanted artefacts in outputs. Similarly, when viewing point cloud data, it becomes cumbersome to load many individual files and apply the same symbology.

Here is an example of several point cloud tiles loaded in QGIS. Each tile is styled based on min/max Z values of the tile, creating visible artefacts on tile edges. The styling has to be adjusted for each layer separately:

Virtual Point Clouds

In the GIS world, many users are familiar with the concept of virtual rasters. A virtual raster is a file that simply references other raster files with actual data. In this way, GIS software then treats the whole dataset comprising many files as a single raster layer, making the display and analysis of all the rasters listed in the virtual file much easier.

Borrowing the concept of virtual rasters from GDAL, we have introduced a new file format that references other point cloud files - and we started to call it virtual point cloud (VPC). Software supporting virtual point clouds handles the whole tiled dataset as a single data source.

At the core, a virtual point cloud file is a simple JSON file with .vpc extension, containing references to actual data files (e.g. LAS/LAZ or COPC files) and additional metadata extracted from the files. Even though it is possible to write VPC files by hand, it is strongly recommended to create them using an automated tool as described later in this post.

On a more technical level, a virtual point cloud file is based on the increasingly popular STAC specification (the whole file is a STAC API ItemCollection). For more details, please refer to the VPC specification that also contains best practices and optional extensions (such as overviews).

Virtual Point Clouds in QGISWe have added support for virtual point clouds in QGIS 3.32 (released in June 2023) thanks to the many organisations and individuals who contributed to our last year’s joint crowdfunding with North Road and Hobu. The support in QGIS consists of three parts:

- Create virtual point clouds from a list of individual files

- Load virtual point clouds as a single map layer

- Run processing algorithms using virtual point clouds

Those who prefer using command line tools, PDAL wrench includes a build_vpc command to create virtual point clouds, and all the other PDAL wrench commands support virtual point clouds as the input.

Using Virtual Point CloudsIn this tutorial, we are going to generate a VPC using the new Processing algorithm, load it in QGIS and then generate a DTM from terrain class. You will need QGIS 3.32 or later for this. For the purpose of this example, we are using the LiDAR data provided by the IGN France data hub.

In QGIS, open the Processing toolbox panel, search for the Build virtual point cloud (VPC) algorithm ((located in the Point cloud data management group):

VPC algorithm in the Processing toolbox

In the algorithm’s window, you can add point cloud layers already loaded in QGIS or alternatively point it to a folder containing your LAZ/LAS files. It is recommended to also check the optional parameters:

-

Calculate boundary polygons - QGIS will be able to show the exact boundaries of data (rather than just rectangular extent)

-

Calculate statistics - will help QGIS to understand ranges of values of various attributes

-

Build overview point cloud - will also generate a single “thinned” point cloud of all your input data (using only every 1000th point from original data). The overview point cloud will be created next to the VPC file - for example, for mydata.vpc, the overview point cloud would be named mydata-overview.copc.laz

VPC algorithm inputs, outputs and options

After you set the output file and start the process, you should end up with a single VPC file referencing all your data. If you leave the optional parameters unchecked, the VPC file will be built very quickly as the algorithm will only read metadata of input files. With any of the optional parameters set, the algorithm will read all points which can take some time.

Now you can load the VPC file in QGIS as any other layer - using QGIS browser, Data sources dialog in QGIS or by doing drag&drop from a file browser. After loading a VPC in QGIS, the 2D canvas will show boundaries of individual files - and as you zoom in, the actual point cloud data will be shown. Here, a VPC loaded together with the overview point cloud:

Virtual point cloud (thinned version) generated by the VPC algorithm

Zooming in QGIS in 2D map with elevation shading - initially showing just the overview point, later replaced by the actual dense point cloud:

VPC output on 2D: displaying details when zooming in

In addition to 2D maps, you can view the VPC in a 3D map windows too:

If the input files for VPCs are not COPC files, QGIS will currently only show their boundaries in 2D and 3D views, but processing algorithms will work fine. It is however possible to use the Create COPC algorithm to batch convert LAS/LAZ files to COPC files, and then load VPC with COPC files.

It is also worth noting that VPCs also work with input data that is not tiled - for example, in some cases the data are distributed as flightlines (with lots of overlaps between files). While this is handled fine by QGIS, for the best performance it is generally recommended to first tile such datasets (using the Tile algorithm) before doing further display and analysis.

Processing Data with Virtual Point CloudsNow that we have the VPC generated, we can run other processing algorithms. For this example, we are going to convert the ground class of the point cloud to a digital terrain model (DTM) raster. In the QGIS Processing toolbox, search for Export to raster algorithm (in the Point cloud conversion group):

VPC layer can be used as an input to the point cloud processing algorithm

This will use the Z values from the VPC layer and generate a terrain raster based on a user defined resolution. The algorithm will process the tiles in parallel, taking care of edge artefacts (at the edges, it will read data also from the neighbouring tiles). The output of this algorithm will look like this:

Converting a VPC layer to a DTM

The output raster contains holes where there were no points classified as ground. If needed for your use case, you can fill the holes using Fill nodata algorithm from GDAL in the Processing toolbox and create a smooth terrain model for your input Virtual Point Cloud layer:

Filling the holes in the DTM

Virtual point clouds can be used also for any other algorithms in the point cloud processing toolbox. For more information about the newly introduced algorithms, please see our previous blog post.

All of the point cloud algorithms also allow setting filtering extent, so even with a very large VPC, it is possible to run algorithms directly on a small region of interest without having to create temporary point cloud files. Our recommendation is to have input data ready in COPC format, as this format provides more efficient access to data when spatial filtering is used.

Streaming Data from Remote Sources with VPCsOne of the very useful features of VPCs is that they work not only with local files, but they can also reference data hosted on remote HTTP servers. Paired with COPCs, point cloud data can be streamed to QGIS for viewing and/or processing - that means QGIS will only download small portions of data of a virtual point cloud, rather than having to download all data before they could be viewed or analysed.

Using IGN’s lidar data provided as COPC files, we have built a small virtual point cloud ign-chambery.vpc referencing 16 km2 of data (nearly 700 million points). This VPC file can be loaded in QGIS and used for 2D/3D visualisation, elevation profiles and processing, with QGIS handling data requests to the server as necessary. Processing algorithms only take a couple of seconds if the selected area of interest is small (make sure to set the “Cropping extent” parameter of algorithms).

All this greatly simplifies data access to point clouds:

-

Data producers can use very simple infrastructure - a server hosting static COPC files together with a single VPC file referencing those COPC files.

-

Users can use QGIS to view and process point cloud data as a single map layer, with no need to download large amounts of data, QGIS (and PDAL) taking care of streaming data as needed.

We are very excited about the opportunities that virtual point clouds are bringing to users, especially when combined with COPC format and access from remote servers!

Thanks again to all contributors to our crowdfunding campaign - without their generous support, this work would not have been possible.

Contact us if you would like to add more features in QGIS to handle, analyse or visualise lidar data.

-

17:00

17:00 Dr. Jeff de La Beaujardiere receives OGC Lifetime Achievement Award

sur Open Geospatial Consortium (OGC)The Open Geospatial Consortium (OGC) is excited to announce that Dr. Jeff de La Beaujardiere has been selected as the latest recipient of the OGC Lifetime Achievement Award. The announcement was made last night during the Executive Dinner in the U.S. Space & Rocket Center at the 126th OGC Member Meeting in GeoHuntsville, AL.

Jeff has been selected for the award due to his long standing leadership, commitment, and support for the advancement and uptake of standards used for the dissemination of Earth Science information.

“I’m so happy that Jeff has been selected to receive the OGC Lifetime Achievement Award,” said OGC CEO, Dr. Nadine Alameh. “Jeff is more than a champion for standards, more than an OGC Gardels award winner, and more than the WMS editor and promoter: Jeff is a role model for many of us in geospatial circles, and has directly and indirectly influenced generations of interoperability enthusiasts to collaborate, to innovate, and to solve critical problems related to our Earth. From OGC and myself, I offer our congratulations and thank Jeff for his technical work – and for being such an inspiration to so many!”

For more than 25 years, Jeff’s support of open standards and OGC’s FAIR mission has improved access to Earth science information for countless users and decision-makers around the globe. Since 1995, Jeff has focused on improving public access to scientific data by pushing for it to be discoverable, accessible, documented, interoperable, citable, curated for long-term preservation, and reusable by the broader scientific community, external users, and decision-makers.

In the OGC community, Jeff is best known as the Editor of the OGC Web Map Service (WMS) Specification: a joint OGC/ISO Standard that now supports access to millions of datasets worldwide. OGC WMS was the first in the OGC Web Services suite of Standards and is the most downloaded Standard from OGC. But most importantly, the OGC WMS Standard truly revolutionized how geospatial data is shared and accessed over the web.

Jeff was also a major contributor to other OGC Standards, including the OGC Web Services Architecture, the OGC Web Map Context, OGC Web Terrain Service, and OGC Web Services Common.

Jeff’s journey with Standards – and his engagement with OGC – started back in 1998 when NASA was leading the effort to implement the Digital Earth program. At that time, Jeff championed interoperability standards as fundamental to realizing the Digital Earth vision. As part of his journey, he has provided leadership to the Geospatial Applications and Interoperability (GAI) Working Group of the U.S. Federal Geographic Data Committee and to the OGC Technical Committee.

In 2002 and 2003, Jeff served as Portal Manager for Geospatial One-Stop, a federal electronic government initiative. He led a team of experts in defining the requirements, architecture, and competitive solicitation for a Portal based on open standards and led an OGC interoperability initiative in developing and demonstrating a working implementation. This was a fast-paced, high-stakes effort involving many companies and agencies building on what today is the OGC Collaborative Solution & Innovation Program.

Jeff has received several awards for his leadership and impact in the many communities that he has participated in throughout his career, including the 2013 OGC Kenneth D. Gardels Award, the 2023 ESIP President’s Award, and the 2003 Falkenberg Award at AGU which honors “a scientist under 45 years of age who has contributed to the quality of life, economic opportunities, and stewardship of the planet through the use of Earth science information and to the public awareness of the importance of understanding our planet.”

With this lifetime achievement award, OGC recognizes and celebrates Jeff’s lifetime of service, and his steadfast support of FAIR geospatial information for the benefit of open science, and society.

The post Dr. Jeff de La Beaujardiere receives OGC Lifetime Achievement Award appeared first on Open Geospatial Consortium.

-

11:35

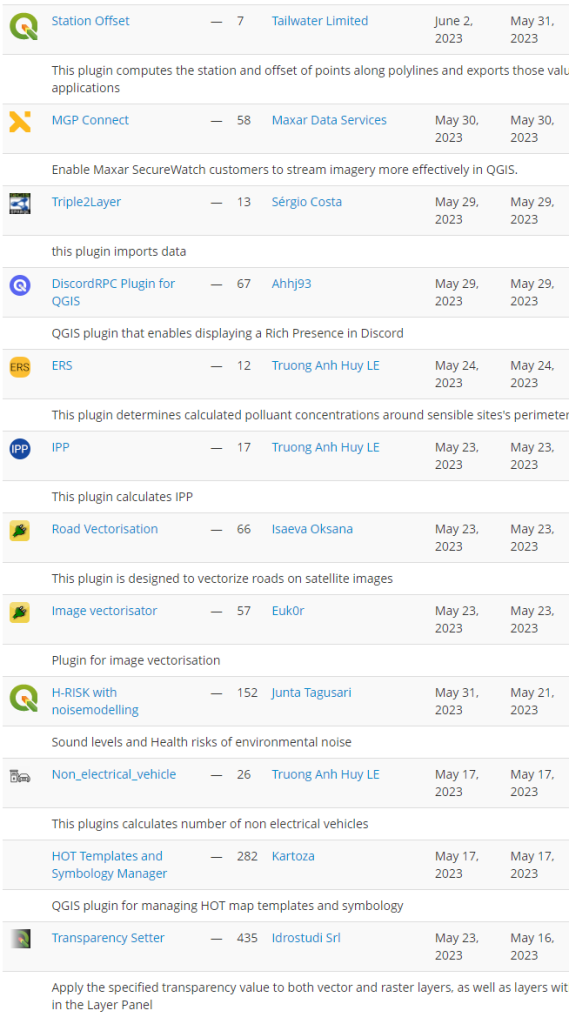

11:35 QGIS Blog: Plugin Update May 2023

sur Planet OSGeoIn May 22 new plugins that have been published in the QGIS plugin repository.

Here’s the quick overview in reverse chronological order. If any of the names or short descriptions piques your interest, you can find the direct link to the plugin page in the table below the screenshot.

Station Offset This plugin computes the station and offset of points along polylines and exports those values to csv for other applications MGP Connect Enable Maxar SecureWatch customers to stream imagery more effectively in QGIS. Triple2Layer this plugin imports data DiscordRPC Plugin for QGIS QGIS plugin that enables displaying a Rich Presence in Discord ERS This plugin determines calculated polluant concentrations around sensible sites’s perimeters IPP This plugin calculates IPP Road Vectorisation This plugin is designed to vectorize roads on satellite images Image vectorisator Plugin for image vectorisation H-RISK with noisemodelling Sound levels and Health risks of environmental noise Non_electrical_vehicle This plugins calculates number of non electrical vehicles HOT Templates and Symbology Manager QGIS plugin for managing HOT map templates and symbology Transparency Setter Apply the specified transparency value to both vector and raster layers, as well as layers within the selected groups in the Layer Panel DBGI Creates geopackages that match the requirements for the DBGI project StyleLoadSave Load or Save active vector layer style PixelCalculator Interactively calculate the mean value of selected pixels of a raster layer. GISTDA sphere basemap A plugin for adding base map layers from GISTDA sphere platform ( [https:]] ). Adjust Style Adjust the style of a map with a few clicks instead of altering every single symbol (and symbol layer) for many layers, categories or a number of label rules. A quick way to adjust the symbology of all layers (or selected layers) consistantly, to check out how different colors / stroke widths / fonts work for a project, and to save and load styles of all layers – or even to apply styles to another project. With one click, it allows to: adjust color of all symbols (including color ramps and any number of symbol layers) and labels using the HSV color model (rotate hue, change saturation and value); change line thickness (i.e. stroke width of all symbols / symbol borders); change font size of all labels; replace a font family used in labels with another font family; save / load the styles of all layers at once into/from a given folder. APLS This plugin performs Average Path Length Similarity qaequilibrae Transportation modeling toolbox for QGIS QGPT Agent QGPT Agent is LLM Assistant that uses openai GPT model to automate QGIS processes FuzzyJoinTables Join tables using min Damerau-Levenshtein distance Chandrayaan-2 IIRS Generates reflectance from Radiance data of Imaging Infrared Spectrometer sensor of Chandrayaan 2 -

9:59

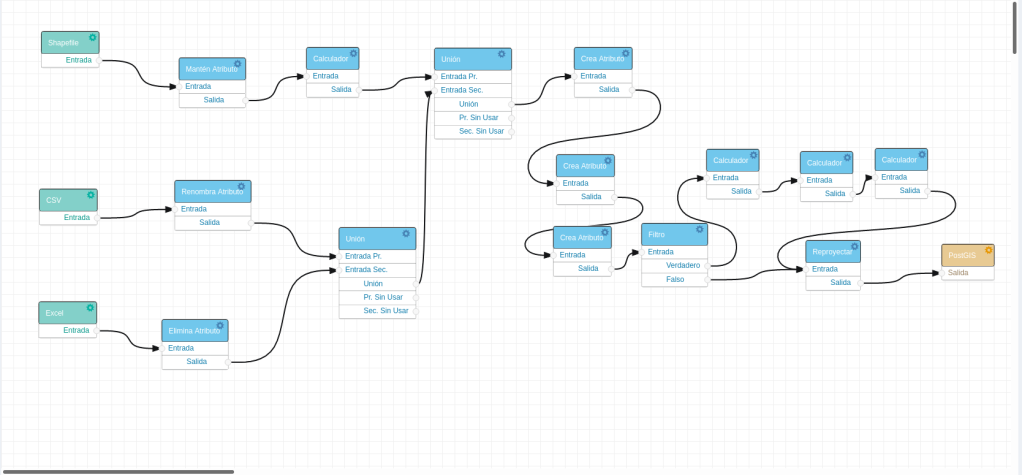

9:59 gvSIG Team: GeoETL de la plataforma gvSIG Online: Automatizar transformaciones de datos

sur Planet OSGeo

Una mejora considerable de gvSIG Online respecto a otros productos del mercado es su ETL. Gracias a su ETL, gvSIG Online podrá realizar integraciones con otras fuentes de datos de forma ágil y sin necesidad de desarrollo.

ETL es un plugin que se utiliza para automatizar tareas de transformaciones de datos, ya sean repetitivas o no, de manera que no sea necesario la manipulación de los datos a través de código. De esta manera, cualquier usuario es capaz de hacer una manipulación de los datos (geométricamente o no) o una homogeneización de datos que provengan de diferentes orígenes y formatos.

Esto es posible gracias a un canvas que representará gráficamente el proceso de transformación de los datos de una manera fácil e intuitiva.

Si queréis conocer todo el potencia de GeoETL, no os perdáis el vídeo:

-

10:02

10:02 gvSIG Team: Conociendo gvSIG Mapps

sur Planet OSGeo

Todo el mundo conoce gvSIG Desktop, el origen de el catálogo de soluciones que denominamos Suite gvSIG. Cada vez más organizaciones de todo el mundo están implantando gvSIG Online como su plataforma de gestión de datos espaciales y geoportales. Y, aunque menos conocido, crece el número de entidades que usan gvSIG Mapps… bien como app para toma de datos en campo, bien como apps desarrolladas con su framework.

¿Queréis saber más? Os contamos qué es realmente gvSIG Mapps:

-

20:36

Book Review: Hands-On Azure Digital Twins: A practical guide to building distributed IoT solutions

sur James Fee GIS BlogFinished reading: Hands-On Azure Digital Twins: A practical guide to building distributed IoT solutions by Alexander Meijers

I’ve been working with Digital Twins for almost 10 years and as simple as the concept is, ontologies get in the way of implementations. The big deal is how can you implement, how does one actually create a digital twin and deploy it to your users.

Let’s be honest though, the Azure Digital Twin service/product is complex and requires a ton of work. It isn’t an upload CAD drawing and connect some data sources. In this case Meijers does a great job of walking through how to get started. But it isn’t for beginners, you’ll need to have previous experience with Azure Cloud services, Microsoft Visual Studio and the ability to debug code. But if you’ve got even a general understand of this, the walk throughs are detailed enough to learn the idiosyncrasies of the Azure Digital Twin process.

The book does take you through the process of understanding what an Azure Digital Twin model is, how to upload them, developing relationships between models and how to query them. After you have an understanding on this, Meijers dives into connecting the model to services, updating the Azure Digital Twin models and then connecting to Microsoft Azure Maps to view the model on maps. Finally he showcases how these Digital Twins can become smart buildings which is the hopeful outcome of doing all the work.

The book has a lot of code examples and ability to download it all from a Github repository. Knowledge of JSON and JavaScript, Python and .NET or Java is probably required. BUT, even if you don’t know how to code, this book is a good introduction to Azure Digital Twins. While there are pages of code examples, Meijers does a good job of explaining the how and why you would use Azure Digital Twins. If you’re interesting in how you can use a hosted Digital Twin service that is managed by a cloud service, this is a great resource.

I felt like I knew Azure Digital Twins before reading this book, but it taught me a lot about how and why Microsoft did what they did with the service. Many aspects that caused me to scratch my head became clearer to me and I felt like this book gave me additional background that I didn’t have before. This book requires an understanding of programming but after finishing it I felt like Meijers’ ability to describe the process outside of code makes the book well worth it to anyone who wants to understand the concept and architecture of Azure Digital Twins.

Thoroughly enjoyed the book.

-

0:59

A GIS Degree

sur James Fee GIS BlogMy son decided to change majors from biodesign to GIS. I had a short moment when I almost told him not to bring all this on himself but then thought differently. I could use my years of experience to help him get the perfect degree in GIS and get a great job and still do what he wants.

He’s one semester into the program so he really hasn’t taken too many classes. There has been the typical Esri, SPSS and Google Maps discussion, but nothing getting into the weeds. Plus he’s taking Geography courses as well so he’s got that going for him. Since he’s at Arizona State University, he’s going through the same program as I did, but it’s a bit different. When I was at ASU, Planning was in the Architectural College. Now it’s tied with Geography in a new School of Geographical Sciences & Urban Planning.

I have to be honest, this is smart, I started my GIS career working for a planning department at a large city. The other thing I noticed is a ton of my professors are still teaching. I mean how awesome is that? I suddenly don’t feel so old anymore.

I’ve stayed out of his classes for the past semester in hopes that he can form his own thoughts on GIS and its applicability. I probably will continue to help him focus on where to spend his electives (more Computer Science and less History of the German Empire 1894-1910). He’s such a smart kid, I know he’s going to do a great job and he was one who spent time in that Esri UC Kids Fair back when I used to go to the User Conference. Now he could be getting paid to use Esri software or whatever tool best accomplishes his goals.

I plan to show him the Safe FME Minecraft Reader/Writer.

-

0:59

A GIS Degree

sur James Fee GIS BlogMy son decided to change majors from biodesign to GIS. I had a short moment when I almost told him not to bring all this on himself but then thought differently. I could use my years of experience to help him get the perfect degree in GIS and get a great job and still do what he wants.

He’s one semester into the program so he really hasn’t taken too many classes. There has been the typical Esri, SPSS and Google Maps discussion, but nothing getting into the weeds. Plus he’s taking Geography courses as well so he’s got that going for him. Since he’s at Arizona State University, he’s going through the same program as I did, but it’s a bit different. When I was at ASU, Planning was in the Architectural College. Now it’s tied with Geography in a new School of Geographical Sciences & Urban Planning.

I have to be honest, this is smart, I started my GIS career working for a planning department at a large city. The other thing I noticed is a ton of my professors are still teaching. I mean how awesome is that? I suddenly don’t feel so old anymore.

I’ve stayed out of his classes for the past semester in hopes that he can form his own thoughts on GIS and its applicability. I probably will continue to help him focus on where to spend his electives (more Computer Science and less History of the German Empire 1894-1910). He’s such a smart kid, I know he’s going to do a great job and he was one who spent time in that Esri UC Kids Fair back when I used to go to the User Conference. Now he could be getting paid to use Esri software or whatever tool best accomplishes his goals.

I plan to show him the Safe FME Minecraft Reader/Writer.

-

21:15

GIS and Monitors

sur James Fee GIS BlogIf there is one constant in my GIS career, it is my interest in the monitor I’m using. Since the days of being happy for a “flat screen” Trinitron monitor to now with curved flat screens, so much has changed. My first GIS Analyst position probably had the worst monitor in the history of monitors. I can’t recall the name but it had a refresh rate that was probably comparable what was seen in the 1960s. It didn’t have great color balance either, so I ended up printing out a color swatch pattern from ArcInfo and taped it on my wall so I could know what color was what.

I stared for years at this monitor. No wonder I need reading glasses now!

I stared for years at this monitor. No wonder I need reading glasses now!

Eventually I moved up in the world where I no longer got hand-me-down hardware and I started to get my first new equipment. The company I worked for at the time shifted between Dell and HP for hardware, but generally it was dual 21″ Trinitron CRTs. For those who are too young to remember, they were the size of a small car and put off enough heat and radiation to probably shorten my life by 10 year. Yet, I could finally count on them being color corrected by hardware/software and not feel like I was color blind.

It wasn’t sexy but it had a cool look to it. You could drop it flat to write on it like a table.

It wasn’t sexy but it had a cool look to it. You could drop it flat to write on it like a table.

Over 11 years ago, I was given a Wacom DTU-2231 to test. You can read more about it on that link but it was quite the monitor. I guess the biggest change between now and then is how little that technology took off. I guess if you asked me right after you read that post in 2010 what we’d be using in 2020, I would have said such technology would be everywhere. Yet we don’t see stylus based monitor much at all.

These days my primary monitor is a LG UltraFine 24″ 4k. I pair it with another 24″ 4K monitor that I’ve had for years. Off to the other side is a generic Dell 24″ monitor my company provided. I find this setup works well for me, gone are the days where I had ArcCatalog and ArcMap open in two different monitors. Alas two of the monitors are devoted to Outlook and WebEx Teams, just a sign of my current work load.

I’ve always felt that GIS people care more about monitors than most. A developer might be more interested in a Spotify plugin for their IDE, but a GIS Analyst care most about the biggest, brightest and crispest monitor they can get their hands on. I don’t always use FME Workbench these days, but when I do, it is full screen on the most beautiful monitor I can have. Seems perfect to me.

-

21:15

GIS and Monitors

sur James Fee GIS BlogIf there is one constant in my GIS career, it is my interest in the monitor I’m using. Since the days of being happy for a “flat screen” Trinitron monitor to now with curved flat screens, so much has changed. My first GIS Analyst position probably had the worst monitor in the history of monitors. I can’t recall the name but it had a refresh rate that was probably comparable what was seen in the 1960s. It didn’t have great color balance either, so I ended up printing out a color swatch pattern from ArcInfo and taped it on my wall so I could know what color was what.

I stared for years at this monitor. No wonder I need reading glasses now!

I stared for years at this monitor. No wonder I need reading glasses now!

Eventually I moved up in the world where I no longer got hand-me-down hardware and I started to get my first new equipment. The company I worked for at the time shifted between Dell and HP for hardware, but generally it was dual 21″ Trinitron CRTs. For those who are too young to remember, they were the size of a small car and put off enough heat and radiation to probably shorten my life by 10 year. Yet, I could finally count on them being color corrected by hardware/software and not feel like I was color blind.

It wasn’t sexy but it had a cool look to it. You could drop it flat to write on it like a table.

It wasn’t sexy but it had a cool look to it. You could drop it flat to write on it like a table.

Over 11 years ago, I was given a Wacom DTU-2231 to test. You can read more about it on that link but it was quite the monitor. I guess the biggest change between now and then is how little that technology took off. I guess if you asked me right after you read that post in 2010 what we’d be using in 2020, I would have said such technology would be everywhere. Yet we don’t see stylus based monitor much at all.

These days my primary monitor is a LG UltraFine 24″ 4k. I pair it with another 24″ 4K monitor that I’ve had for years. Off to the other side is a generic Dell 24″ monitor my company provided. I find this setup works well for me, gone are the days where I had ArcCatalog and ArcMap open in two different monitors. Alas two of the monitors are devoted to Outlook and WebEx Teams, just a sign of my current work load.

I’ve always felt that GIS people care more about monitors than most. A developer might be more interested in a Spotify plugin for their IDE, but a GIS Analyst care most about the biggest, brightest and crispest monitor they can get their hands on. I don’t always use FME Workbench these days, but when I do, it is full screen on the most beautiful monitor I can have. Seems perfect to me.

-

19:00

Are Conferences Important Anymore?

sur James Fee GIS BlogHey SOTM is going on, didn’t even know. The last SOTM I went to was in 2013 which was a blast. But I have to be honest, not only did this slip my mind, none of my feeds highlighted it to me. Not only that, apparently Esri is having a conference soon. (wait for me to go ask Google when it is) OK, they are having it next week. I used to be the person who went to as much as I could, either through attending or invited to keynote. The last Esri UC I went to was in 2015, 6 years ago. As I said SOTM was in 2013. FOSS4G, 2011. I had to look up, the last conference that had any GIS in it was the 2018 Barcelona Smart City Expo.

So with the world opening back up, or maybe not given whatever greek letter variant we are dealing with right now, I’ve started to think about what I might want to attend and the subject matter. At the end of the day, I feel like I got more value out of the conversations outside the convention center than inside. So probably where I see a good subset of smart people hanging out. That’s why those old GeoWeb conferences that Ron Lake put on were so amazing. Meeting a ton of smart people and enjoying the conversations, rather than reading Powerpoint slides in a darkly lit room.

Hopefully we can get back to that, just need to keep my eye out.

-

19:00

Are Conferences Important Anymore?

sur James Fee GIS BlogHey SOTM is going on, didn’t even know. The last SOTM I went to was in 2013 which was a blast. But I have to be honest, not only did this slip my mind, none of my feeds highlighted it to me. Not only that, apparently Esri is having a conference soon. (wait for me to go ask Google when it is) OK, they are having it next week. I used to be the person who went to as much as I could, either through attending or invited to keynote. The last Esri UC I went to was in 2015, 6 years ago. As I said SOTM was in 2013. FOSS4G, 2011. I had to look up, the last conference that had any GIS in it was the 2018 Barcelona Smart City Expo.

So with the world opening back up, or maybe not given whatever greek letter variant we are dealing with right now, I’ve started to think about what I might want to attend and the subject matter. At the end of the day, I feel like I got more value out of the conversations outside the convention center than inside. So probably where I see a good subset of smart people hanging out. That’s why those old GeoWeb conferences that Ron Lake put on were so amazing. Meeting a ton of smart people and enjoying the conversations, rather than reading Powerpoint slides in a darkly lit room.

Hopefully we can get back to that, just need to keep my eye out.

-

19:57

Unreal and Unity are the new Browsers

sur James Fee GIS BlogSomeone asked me why I hadn’t commented on Cesium and Unreal getting together. Honestly , no reason. This is big news honestly. HERE, where I work, is teaming up with Unity to bring the Unity SDK and the HERE SDK to automotive applications. I talk about how we used Mapbox Unity SDK at Cityzenith (though I have no clue if they still are). Google and Esri have them too. In fact both Unreal and Unity marketplaces are littered with data sources you can plug in.

HERE Maps with Unity

HERE Maps with Unity

This is getting at the core of what these two platforms could be. Back in the day we had two browsers, Firefox and Internet Explorer 6. Inside each we had many choices of mapping platforms to use. From Google and Bing to Mapquest and Esri. In the end that competition to make the best API/SDK for a mapping environment drove a ton of innovation. What Google Maps looks like and does in 2021 vs 2005 is amazing.

This brings up the key as to what I see happening here. We’ll see the mapping companies (or companies that have mapping APIs) deliver key updates to these SDK (which today are pretty limited in scope) because they have to stay relevant. Not that web mapping is going away at any point, but true 3D world and true Digital Twins require power that browsers cannot provide even in 2021. So this rush to become the Google Maps of 3D engines is real and will be fun to watch.

Interesting in that Google is an also-ran in the 3D engine space, so there is so much opportunity for the players who have invested and continue to invest in these markets without Google throwing unlimited R&D dollars against it. Of course it only takes on press release to change all that so don’t bet against Google.

-

19:57

Unreal and Unity are the new Browsers

sur James Fee GIS BlogSomeone asked me why I hadn’t commented on Cesium and Unreal getting together. Honestly , no reason. This is big news honestly. HERE, where I work, is teaming up with Unity to bring the Unity SDK and the HERE SDK to automotive applications. I talk about how we used Mapbox Unity SDK at Cityzenith (though I have no clue if they still are). Google and Esri have them too. In fact both Unreal and Unity marketplaces are littered with data sources you can plug in.

HERE Maps with Unity

HERE Maps with Unity

This is getting at the core of what these two platforms could be. Back in the day we had two browsers, Firefox and Internet Explorer 6. Inside each we had many choices of mapping platforms to use. From Google and Bing to Mapquest and Esri. In the end that competition to make the best API/SDK for a mapping environment drove a ton of innovation. What Google Maps looks like and does in 2021 vs 2005 is amazing.

This brings up the key as to what I see happening here. We’ll see the mapping companies (or companies that have mapping APIs) deliver key updates to these SDK (which today are pretty limited in scope) because they have to stay relevant. Not that web mapping is going away at any point, but true 3D world and true Digital Twins require power that browsers cannot provide even in 2021. So this rush to become the Google Maps of 3D engines is real and will be fun to watch.

Interesting in that Google is an also-ran in the 3D engine space, so there is so much opportunity for the players who have invested and continue to invest in these markets without Google throwing unlimited R&D dollars against it. Of course it only takes on press release to change all that so don’t bet against Google.

-

15:00

Arrays in GeoJSON

sur James Fee GIS BlogSo my last post was very positive. I figured out how to relate the teams that share a stadium with the stadium itself. This was important because I wanted to eliminate the redundant points that were on top of each other. For those who don’t recall, I have an example in this gist:

Now I mentioned that there were issues displaying this in GIS applications and was promptly told I was doing this incorrectly:

An array of <any data type> is not the same as a JSON object consisting of an array of JSON objects. If it would have been the first, I'd have pointed you (again) to QGIS and this widget trick [https:]] .

— Stefan Keller (@sfkeller) April 4, 2021If you click on that tweet you’ll see basically that you can’t do it the way I want and I have to go back to the way I was doing it before:

Unfortunately, the beat way is to denormalise. Redundant location in many team points.

— Alex Leith (@alexgleith) April 4, 2021I had a conversation with Bill Dollins about it and he sums it up susinctly:

I get it, but “Do it this way because that’s what the software can handle” is an unsatisfying answer.

So I’m stuck, I honestly don’t care if QGIS can read the data, because it can. It just isn’t optimal. What I do care about is an organized dataset in GeoJSON. So my question that I can’t get a definitive answer, “is the array I have above valid GeoJSON code?”. From what I’ve seen, yes. But nobody wants to go on record as saying absolutely. I could say, hell with it I’m moving forward but I don’t want to go down a dead end road.

-

15:00

Arrays in GeoJSON

sur James Fee GIS BlogSo my last post was very positive. I figured out how to relate the teams that share a stadium with the stadium itself. This was important because I wanted to eliminate the redundant points that were on top of each other. For those who don’t recall, I have an example in this gist:

Now I mentioned that there were issues displaying this in GIS applications and was promptly told I was doing this incorrectly:

An array of <any data type> is not the same as a JSON object consisting of an array of JSON objects. If it would have been the first, I'd have pointed you (again) to QGIS and this widget trick [https:]] .

— Stefan Keller (@sfkeller) April 4, 2021If you click on that tweet you’ll see basically that you can’t do it the way I want and I have to go back to the way I was doing it before:

Unfortunately, the beat way is to denormalise. Redundant location in many team points.

— Alex Leith (@alexgleith) April 4, 2021I had a conversation with Bill Dollins about it and he sums it up susinctly:

I get it, but “Do it this way because that’s what the software can handle” is an unsatisfying answer.

So I’m stuck, I honestly don’t care if QGIS can read the data, because it can. It just isn’t optimal. What I do care about is an organized dataset in GeoJSON. So my question that I can’t get a definitive answer, “is the array I have above valid GeoJSON code?”. From what I’ve seen, yes. But nobody wants to go on record as saying absolutely. I could say, hell with it I’m moving forward but I don’t want to go down a dead end road.

-

23:42

GeoJSON Ballparks as JSON

sur James Fee GIS BlogIn a way it is good that Sean Gillies doesn’t follow me anymore. Because I can hear his voice in my head as I was trying to do something really stupid with the project. But Sheldon helps frame what I should be doing with what I was doing:

tables? what the? add , teams:[{name:"the name", otherprop: …}, {name:…}] to each item in the ballparks array and get that relational db BS out of your brain

— Sheldon (@tooshel) April 2, 2021Exactly! What the hell? Why was I trying to do something so stupid when the while point of this project is baseball ballparks in GeoJSON. Here is the problem in a nutshell and how I solved it. First off, let us simply the problem down to just one ballpark. Salt River Fields at Talking Stick is the Spring Training facility for both the Arizona Diamondbacks and the Colorado Rockies. Not only that, but there are Fall League and Rookie League teams playing there. Probably even more that I still haven’t researched. Anyway, GeoJSON Ballparks looks like this today when you just want to see that one stadium.

Let’s just say I backed myself in this corner by starting by only having MLB ballparks, none of which at the time of the project were shared between teams.

Let’s just say I backed myself in this corner by starting by only having MLB ballparks, none of which at the time of the project were shared between teams.

It’s a mess right? Overlapping points, so many opportunities to screw up names. So my old school thought was just create a one-to-many relationship between the GeoJSON points and some external table. Madness! Seriously, what was I thinking? Sheldon is right, I should be doing a JSON array for the teams. Look how much nicer it all looks when I do this!

Look how nice that all is? So easy to read and it keeps the focus on the ballparks.

Look how nice that all is? So easy to read and it keeps the focus on the ballparks.

As I said in the earlier blog post.

The problem now is so many teams, especially in spring training, minor leagues and fall ball, share stadiums, that in GeoJSON-Ballparks, you end up with multiple dots on top of each other. No one-to-many relationship that should happen.”

The project had pivoted in a way I hadn’t anticipated back in 2014 and it was a sure a mess to maintain. So now I can focus on fixing the project with the Minor League Baseball realignment that went on this year and get an updated dataset in Github very soon.

One outcome of doing this nested array is that many GIS tools don’t understand how to display the data. Take a look at geojson.io:

geojson.io compresses the array into one big JSON-formatted string. QGIS and Github do this also. It’s an issue that I’m willing to live with. Bill Dollins shared the GeoJSON spec with me to prove the way I’m doing is correct:

3.2. Feature Object A Feature object represents a spatially bounded thing. Every Feature object is a GeoJSON object no matter where it occurs in a GeoJSON text. o A Feature object has a "type" member with the value "Feature". o A Feature object has a member with the name "geometry". The value of the geometry member SHALL be either a Geometry object as defined above or, in the case that the Feature is unlocated, a JSON null value. o A Feature object has a member with the name "properties". The value of the properties member is an object (any JSON object or a JSON null value).ANY JSON OBJECT! So formatting the files this way is correct and the way it should be done. I’m going to push forward on cleaning up GeoJSON Ballparks and let the GIS tools try and catch up.

-

23:15

GeoJSON Ballparks and MLB Minor League Realignment

sur James Fee GIS Blog**UPDATE** – See the plan.

Boy, where to start? First, for those who haven’t been following, this happened over the winter.

Major League Baseball announced on Friday (February 12, 2021) a new plan for affiliated baseball, with 120 Minor League clubs officially agreeing to join the new Professional Development League (PDL). A full list of Major League teams and their new affiliates, one for each level of full-season ball, along with a complex league (Gulf Coast and Arizona) team, can be found below.

Minor League Baseball

Minor League Baseball

What does that mean? Well for GeoJSON Ballparks basically every minor league team is having a modification to it. At a minimum, the old minor league names have changed. Take the Pacific Coast League that existed for over 118 years is now part of Triple-A West which couldn’t be a more boring name. All up and down the minor leagues, the names now just reflect the level of minor league the teams are. And some teams have moved from AAA to Single A and all around.

I usually wait until Spring Training is just about over to update the minor league teams but this year it almost makes zero sense. I’ve sort of backed myself into a spatial problem, unintended when I started. Basically, the project initially was just MLB teams and their ballparks. The key to that is that the teams drove the dataset, not the ballparks even though the title of the project clearly said it was. As long as nobody shared a ballpark, this worked out great. The problem now is so many teams, especially in spring training, minor leagues and fall ball, share stadiums, that in GeoJSON-Ballparks, you end up with multiple dots on top of each other. No one-to-many relationship that should happen.

So, I’m going to use this minor league realignment to fix what I should have fixed years ago. There will be two files in this dataset moving forward. One GeoJSON file of the locations of a ballpark and then a CSV (or other format) file containing the teams. Then we’ll just do the old fashioned relate between the two and the world is better again.

I’m going to fork GeoJSON-Ballparks into a new project and right the wrongs I have done against good spatial data management. I’m finally ready to play centerfield!

-

18:46

I’m Here at HERE

sur James Fee GIS BlogPièce jointe: [télécharger]

Last Tuesday I started at HERE Technologies with the Professional Services group in the Americas. I’ve probably used HERE and their legacy companies data and services for most of my career so this is a really cool opportunity to work with a mobile data company.

I’m really excited about working with some of their latest data products including Premier 3D Cities (I can’t escape Digital Twins).

Digital Twins at HERE -

19:36

Digital Twins and Unreal Engine

sur James Fee GIS BlogI’ve had a ton of experience with Unity and Digital Twins but I have been paying attention to Unreal Engine. I think the open nature of Unity is probably more suited for the current Digital Twin market, but competition is so important for innovation. This project where Unreal Engine was used to create a digital clone of Adelaide is striking but the article just leaves me wanting for so much more.

A huge city environment results in a hefty 3D model. Having strategies in place to ease the load on your workstation is essential. “Twinmotion does not currently support dynamic loading of the level of detail, so in the case of Adelaide, we used high-resolution 3D model tiles over the CBD and merged them together,” says Marre. “We then merged a ring of low-resolution tiles around the CBD and used the lower level of detail tiles the further away we are from the CBD.”

Well, that’s how we did it at Cityzenith. Tiles are the only way to have the detail one needs in these 3D worlds and one that geospatial practitioners are very used to dealing with their slippy maps. The eye-candy that one sees in that Adelaide project is amazing. Of course, scaling one city out is hard enough but doing so across a country or the globe is another. Still, this is an amazing start.

Seeing Epic take Twinmotion and scale it out this way is very exciting because as you can see from that video above, it really does look photorealistic.

But this gets at the core of where Digital Twins have failed. It is so very easy to do the above, crate an amazing looking model of a city, and drape imagery across it. It is a very different beast to actually create a Digital Twin where these buildings are not only linked up to external IoT devices and services but they should import BIM models and generalize as needed. They do so some rudimentary analysis of shadows which is somewhat interesting, but this kind of stuff is so easy to do and there are so many tools to do it that all this effort to create a photorealistic city seems wasted.

I think users would trade photorealistic cities for detailed IoT services integration but I will watch Aerometrex continue to develop this out. Digital Twins are still stuck in sharing videos on Vimeo and YouTube, trying to create some amazing realistic city when all people want is visualization and analysis of IoT data. That said, Aerometrex has done an amazing job building this view.

-

22:01

Moving Towards a Digital Twin Ecosystem

sur James Fee GIS BlogSmart Cities really start to become valuable when they integrate with Digital Twins. Smart Cities do really well with transportation networks and adjusting when things happen. Take, for example, construction on an important Interstate highway that connects the city core with the suburbs causes backups and a smart city can adjust traffic lights, rail, and other modes of transportation to help adjudicate the problems. This works really well because the transportation system talk to each other and decisions can be made to refocus commutes toward other modes of transportation or other routes. But unfortunately, Digital Twins don’t do a great job talking to Smart Cities.

Photo by Victor Garcia on Unsplash

Photo by Victor Garcia on Unsplash

A few months ago I talked about Digital Twins and messaging. The idea that:

Digital twins require connectivity to work. A digital twin without messaging is just a hollow shell, it might as well be a PDF or a JPG. But connecting all the infrastructure of the real world up to a digital twin replicates the real world in a virtual environment. Networks collect data and store it in databases all over the place, sometimes these are SQL-based such as Postgres or Oracle, and other times they are simple as SQLite or flat-file text files. But data should be treated as messages back and forth between clients.

This was in the context of a Digital Twin talking to services that might not be hardware-based, but the idea stands up for how and why a Digital Twin should be messaging the Smart City at large. Whatever benefits a Digital Twin gains from an ecosystem that collects and analyzes data for decision-making those benefits become multiplied when those systems connect to other Digital Twins. But think outside a group of Digital Twins and the benefit of the Smart City when all these buildings are talking to each other and the city to make better decisions about energy use, transportation, and other shared infrastructure across the city or even the region (where multiple Smart Cities talk to each other).

When all these buildings talk to each other, they can help a city plan, grow and evolve into a clean city.

When all these buildings talk to each other, they can help a city plan, grow and evolve into a clean city.

What we don’t have is a common data environment (CDE) that cities can use. We have seen data sharing on a small scale in developments but not on a city-wide or regional scale. To do this we need to agree on model standards that allow not only Digital Twins to talk to each other (Something open like Bentley’s iTwin.js) and share ontologies. Then we need that Smart City CDE where data is shared, stored, and analyzed at a large scale.