Vous pouvez lire le billet sur le blog La Minute pour plus d'informations sur les RSS !

Canaux

3759 éléments (18 non lus) dans 55 canaux

Dans la presse

(1 non lus)

Dans la presse

(1 non lus)

-

Décryptagéo, l'information géographique

Décryptagéo, l'information géographique

-

Cybergeo

Cybergeo

-

Revue Internationale de Géomatique (RIG)

Revue Internationale de Géomatique (RIG)

-

SIGMAG & SIGTV.FR - Un autre regard sur la géomatique

(1 non lus)

SIGMAG & SIGTV.FR - Un autre regard sur la géomatique

(1 non lus) -

Mappemonde

Mappemonde

Du côté des éditeurs

(1 non lus)

Du côté des éditeurs

(1 non lus)

-

Imagerie Géospatiale

-

Toute l’actualité des Geoservices de l'IGN

Toute l’actualité des Geoservices de l'IGN

-

arcOrama, un blog sur les SIG, ceux d ESRI en particulier (1 non lus)

-

arcOpole - Actualités du Programme

arcOpole - Actualités du Programme

-

Géoclip, le générateur d'observatoires cartographiques

-

Blog GEOCONCEPT FR

Blog GEOCONCEPT FR

Toile géomatique francophone

(16 non lus)

Toile géomatique francophone

(16 non lus)

-

Géoblogs (GeoRezo.net)

-

Conseil national de l'information géolocalisée

Conseil national de l'information géolocalisée

-

Geotribu

(1 non lus)

Geotribu

(1 non lus) -

Les cafés géographiques

(3 non lus)

Les cafés géographiques

(3 non lus) -

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

UrbaLine (le blog d'Aline sur l'urba, la géomatique, et l'habitat)

-

Icem7

Icem7

-

Séries temporelles (CESBIO)

(2 non lus)

Séries temporelles (CESBIO)

(2 non lus) -

Datafoncier, données pour les territoires (Cerema)

Datafoncier, données pour les territoires (Cerema)

-

Cartes et figures du monde

(3 non lus)

Cartes et figures du monde

(3 non lus) -

SIGEA: actualités des SIG pour l'enseignement agricole

SIGEA: actualités des SIG pour l'enseignement agricole

-

Data and GIS tips

Data and GIS tips

-

Neogeo Technologies

(2 non lus)

Neogeo Technologies

(2 non lus) -

ReLucBlog

ReLucBlog

-

L'Atelier de Cartographie

L'Atelier de Cartographie

-

My Geomatic

-

archeomatic (le blog d'un archéologue à l’INRAP)

archeomatic (le blog d'un archéologue à l’INRAP)

-

Cartographies numériques

(5 non lus)

Cartographies numériques

(5 non lus) -

Veille cartographie

Veille cartographie

-

Makina Corpus

-

Oslandia

Oslandia

-

Camptocamp

Camptocamp

-

Carnet (neo)cartographique

Carnet (neo)cartographique

-

Le blog de Geomatys

Le blog de Geomatys

-

GEOMATIQUE

GEOMATIQUE

-

Geomatick

Geomatick

-

CartONG (actualités)

CartONG (actualités)

Séries temporelles (CESBIO) (2 non lus)

-

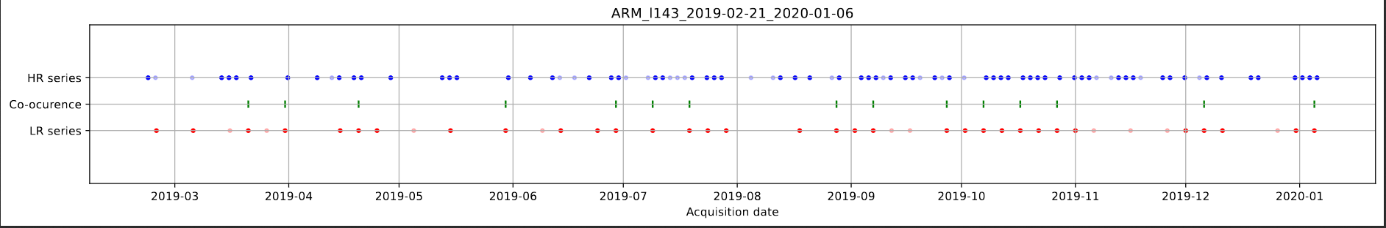

15:01

15:01 Let’s ask Copernicus to keep S2A operational after S2C launch

sur Séries temporelles (CESBIO) The launch of Sentinel-2C (S2C) is scheduled on the 4th of September 2024, next week ! After 3 months of commissioning phase, I have understood that S2C will replace S2A, to fulfill the Sentinel-2 mission together with with S2B. S2B will later be replaced by S2D. The current plans are to keep S2A as a redundant satellite, in case something happens to one of the two other satellites. It seems likely S2A will not be used to acquire images once S2C is operational, although a few of us still hope.

The launch of Sentinel-2C (S2C) is scheduled on the 4th of September 2024, next week ! After 3 months of commissioning phase, I have understood that S2C will replace S2A, to fulfill the Sentinel-2 mission together with with S2B. S2B will later be replaced by S2D. The current plans are to keep S2A as a redundant satellite, in case something happens to one of the two other satellites. It seems likely S2A will not be used to acquire images once S2C is operational, although a few of us still hope.I guess the decision not to keep S2A operational would be for cost reasons. The operation costs are high : although I have no information, I guess these costs are on the order of magnitude of one or two dozens of millions per year. My reference is the SPOT4 (Take5) experiment which cost ~1 M€ for a few months with a limited amount of data. In the case of S2, the data volumes to download, process and distribute are huge, and ESA would also need to monitor data quality, have a tighter orbit and attitude control… Anyway, it is much less than the cost of a satellite (Sentinel-2B’s price was a few hundreds of M€).

I am very proud Europe was able to build the Sentinel-2 mission with a revisit of 5 days and 20 years of operations ahead of the first launch. But we could do even better for a limited cost. I write this blog post to try to convince scientific, public and private users that 5 days revisit is not enough. We should therefore try to set-up a campaign to ask ESA/UE to reconsider the decision to end S2A imaging operations when S2C is operational. Help, feedback and ideas are welcome !

Revisit needs Correlation of start of season determination as a function of the cloud free observation frequency in Germany. « 2018 was a hot and dry year with exceptionally low precipitation and cloud cover ». From Kowalski et al, 2020 : [https:]]

Correlation of start of season determination as a function of the cloud free observation frequency in Germany. « 2018 was a hot and dry year with exceptionally low precipitation and cloud cover ». From Kowalski et al, 2020 : [https:]]

As an example, here are the cloud free revisit needs expressed by the vegetation monitoring community.

- Land cover classification : a clear observation per month

- Biomass/Yield estimation : a clear observation per fortnight

- Phenology (start and end of season, flowering…) : a clear observation per week

Waters, especially coastal waters, tend to change even quicker, with the additional drawback to be affected by the reflection of the sun on water, on one third to one half of the images.

Very recently, several papers have studied the biases due to the insufficient and irregular observations from space optical missions.

5 days revisit is not enough- Bayle, A., Gascoin, S., Berner, L.T. and Choler, P. (2024), Landsat-based greening trends in alpine ecosystems are inflated by multidecadal increases in summer observations. Ecography e07394. [https:]]

(due to the increase of Landsat Frequency, part of the observe greening of mountains is in fact due to the increase of the revisit frequency, which is not frequent enough).

- Langhorst, T., Andreadis, K. M., & Allen, G. H. (2024). Global cloud biases in optical satellite remote sensing of rivers. Geophysical Research Letters, 51, e2024GL110085. [https:]]

The global average cloud cover is 70%, with of course large variations across seasons and location. It means that on average, for a given pixel, two observations out of three are cloudy. But if you need to observe a large area simultaneously cloud free, it is even more difficult. I don’t have statistics on that, but roughly only 10% of Sentinel-2 products on 100×100 km² are fully cloud free.

Due to the fact that the great majority of images have clouds, our users have asked for cloud free periodic syntheses. At the THEIA land data center, we produce every month surface reflectances syntheses (named « Level3A » products). Our WASP processor computes a weighted average of all the cloud free surface reflectances found during 46 days (from 23 days before to 23 days after the synthesis date). When no cloud free data is available, we raise a flag, and provide one of the cloudy reflectance values acquired during the period. These regions appear in white (but don’t confuse them with snow in mountains).

Please keep in mind that the syntheses use 46 days, and not 30 days. They do not meet any of the needs explained above,. The 46 days duration was tuned to have less than 10% of pixels always cloudy on average per synthesis. Here are some examples.

South-Western EUOver western Europe, the top left image shows a summer drought period, with a reduced amount of always cloudy pixels. However, some are visible in the East of Belgium/Netherlands. The images in winter have large region with no cloud free data, and even in April 2023, there is a remaining cloudy region in the east of France. You might have noticed that the remaining regions often have a trapezoidal shape. It is explained by the fact that Sentinel-2 swaths overlap, and it is of course more likely to miss cloud free pixels where we have two observations every 5 days, than where we have only one observation. More data are available at THEIA : [maps.theia-land.fr]

Germany: July 2022 (Drought in Europe) December 2022

January 2022 (a dry January ) April 2023

South-Western Europe is blessed by a nice weather (when it’s not too hot), but Germany has a lot of clouds. Our colleagues form DLR also process Level3A syntheses with our tools. Here are some examples. You may also see by yourselves at this address : [https:]

NorwayApril 2023 November 2023

Norway cartographic agency (Kartverket) also uses our softwares MAJA and WASP to produce countrywide syntheses, but they had to change the parameters to obtain a correct result. Summer syntheses are produced each year, but they often need a manual post-processing to enhance the results where some clouds are remaining. This is despite the orbits fully overlap in Norway.

Consequences

[https:]The main consequence of persistent cloudiness on S2 images is that our observations are quite far from the expressed needs, except in the sunniest countries. As a result, studies and retrievals are not as accurate as they could be, biases and noise appear. In my sense, the main issue is the fact that persistent clouds on images introduce a random unreliability, that prevents to provide an operational service.

S2 quicklook thumbnails in June 2019 on Toulouse tile (31TCJ)

S2 quicklook thumbnails in June 2019 on Toulouse tile (31TCJ)

One of the most acute cases in my memory happened in June 2019, where our region near Toulouse suffered a long and early heat wave, but clouds were present every 5 days in the morning, when S2A or S2B were acquiring data. During a heat wave, It must have been very hard to explain local customers that the product didn’t work because of clouds.

As a result, insufficient revisit hampers the development of services based on optical images.

There are some ways around, using Landsat 8 and 9 (but resolution is 30m), Sentinel-1 (but SAR provides a completely different information), Planet (but only 4 bands, very expensive if you need to work at country scale, and not the same quality). Moreover, Planet uses Sentinel-2 a lot to improve its products. A better availability of S2 data would also improve the data quality of planet data and the soon to be launched Earth Daily constellation.

Dear EU, please keep S2A operational !That’s why it would be a pity not to go on using S2A after S2C commissioning phase. With 3 satellites, S2A passing for instance two days after S2B, we could produce cloud free syntheses every 20 days, based on 30 days of data only. We would be closer to the needs, and would reduce a lot the unreliability of S2 services.

Of course, it means an increase of data volume and processing costs, but it is only a few % every year of the cost of one satellite. If it is really too much, let’s do it over Europe only, as S2 is funded by Europe. Maybe EU fears that if we ask for it, users of the other Sentinel missions will also ask for it. But S1 does not have clouds (and S1B has been lost) and S3 already has a daily overpass. There are therefore reasons to only operate a third satellite for the S2 mission.

If you agree or disagree with me, or if you have more arguments to provide, please add a comment to this page !

-

18:55

18:55 Premiers MNT LidarHD

sur Séries temporelles (CESBIO)Pièce jointe: [télécharger]

L’IGN communique actuellement sur la mise à disposition des premiers MNT dérivés des nuages de points LiDAR HD.

[Thread LiDAR HD

] Vous les attendiez

] Vous les attendiez  Les premiers modèles numériques #LiDARHD sont maintenant disponibles et accessibles à tous en #opendata

Les premiers modèles numériques #LiDARHD sont maintenant disponibles et accessibles à tous en #opendata

La Charente-Maritime (bloc FK), le Nord (KA) et le Pas-de-Calais (JA) sont les premiers… pic.twitter.com/1UFOZabImE

La Charente-Maritime (bloc FK), le Nord (KA) et le Pas-de-Calais (JA) sont les premiers… pic.twitter.com/1UFOZabImE— IGN France (@IGNFrance) July 10, 2024

A mon avis, cet exemple est mal choisi puisqu’un MNT de la citadelle de Gravelines de qualité équivalente était déjà disponible dans le RGE ALTI® 1m en libre accès depuis le 1er janvier 2021. Sur ce secteur les métadonnées du RGE ALTI® indiquent que la source est un LiDAR de densité d’acquisition à 1 points au m². On pouvait donc déjà voir ces fortifications dans mon interface de visualisation du RGEALTI.

Comparaison des MNT RGE ALTI® 1m et LiDAR HD sur le fort de Gravelines

Comparaison des MNT RGE ALTI® 1m et LiDAR HD sur le fort de Gravelines

En effet, le RGE ALTI® est un composite de plusieurs sources de données… La qualité du produit final est très variable ! En l’occurrence Gravelines était déjà couvert en lidar comme le reste du littoral.

Sources du RGE ALTI® (oui sans la légende) : corrélation (en général), lidar (littoral, rivières et villes) ou radar (montagnes). Pour en savoir plus consulter l’annexe B de ce document [https:]]

Sources du RGE ALTI® (oui sans la légende) : corrélation (en général), lidar (littoral, rivières et villes) ou radar (montagnes). Pour en savoir plus consulter l’annexe B de ce document [https:]]

Pour le littoral, le nouveau MNT LiDAR HD présente un intérêt plus marquant là où la surface terrestre a changé récemment. On peut ainsi comparer les MNT RGE ALTI® et LiDAR HD pour observer l’évolution des dunes de la Slack près de Wimereux. Malheureusement je ne connais pas les dates d’acquisition de ces deux produits.

document.createElement('video'); [https:]]Mais le véritable intérêt du MNT LiDAR HD se situe plutôt dans les zones où la source des données pour construire le RGE ALTI® n’était pas du lidar. Par exemple dans le département du Pas-de-Calais se trouve la plus grande carrière de France, les Carrières du Boulonnais.

Comparaison des MNT RGE ALTI® 1m et LiDAR HD sur les Carrières du Boulonnais

Comparaison des MNT RGE ALTI® 1m et LiDAR HD sur les Carrières du Boulonnais

Vivement la mise à disposition des autres régions de France !

Photo en-tête : Zoom sur la partie centrale de la carrière de Ferques (Pas de Calais) par Pierre Thomas. Source : Planet Terre [https:]]

-

2:27

2:27 Crue du Vénéon : que nous apprennent les images satellites ?

sur Séries temporelles (CESBIO)Pièce jointe: [télécharger]

Le 21 juin 2024, la crue torrentielle du Vénéon et de son affluent le torrent des Étançons a dévasté le hameau de la Bérarde dans le massif des Écrins. Cette crue a résulté des fortes pluies et de la fonte de la neige, et a peut-être été aggravée par la vidange d’un petit lac supra-glaciaire.

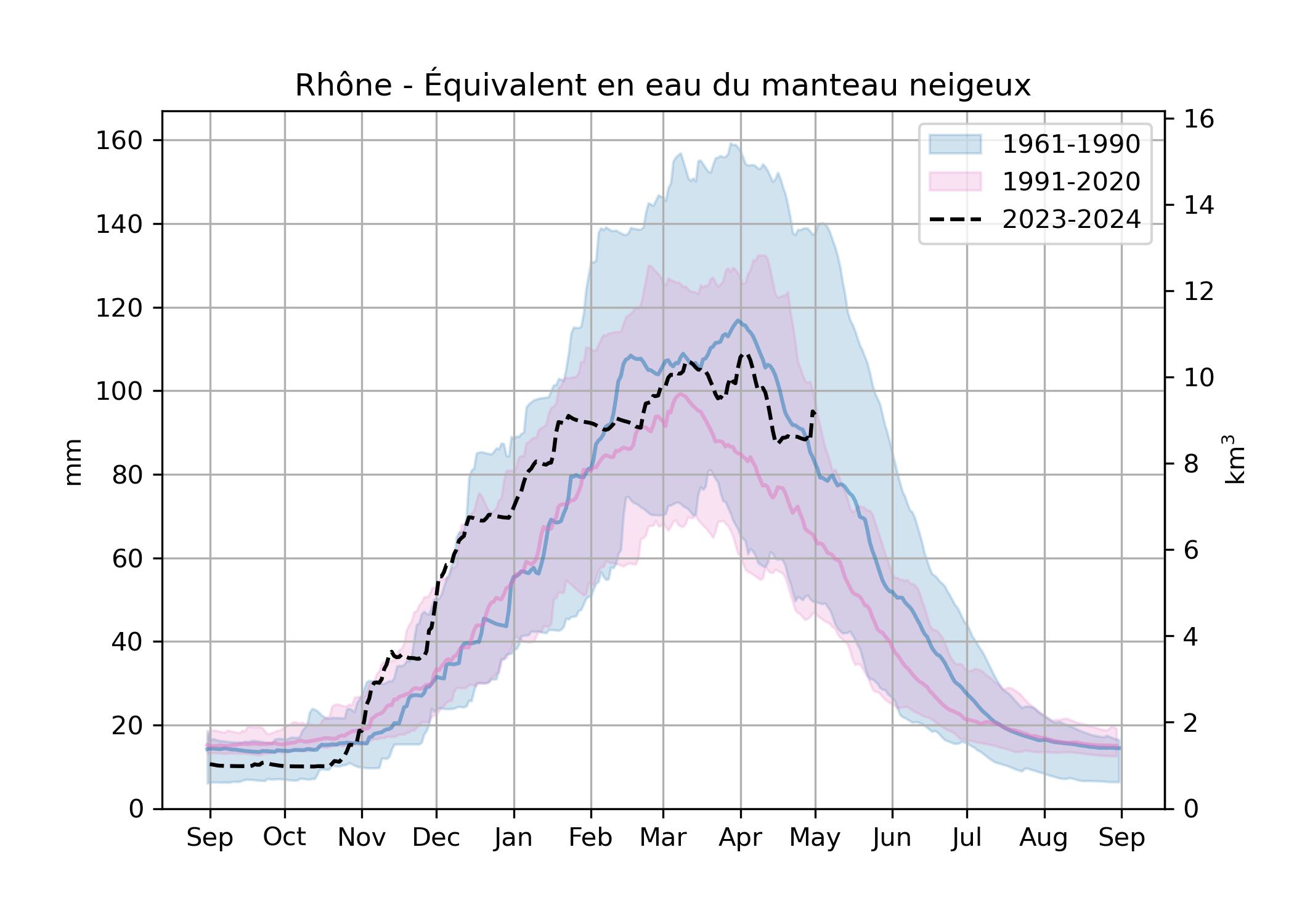

L’année en cours est particulièrement excédentaire en neige dans les Alpes, en particulier à haute altitude. Le stock de neige dans le bassin du Rhône au printemps 2024 est bien supérieur à la normale des trente dernière années mais proche de celle des années 1961-1990. Or une telle crue ne s’est pas produite depuis la moitié du 20e siècle au moins à la Bérarde. Que s’est-il passé ?

Le secteur est bien instrumenté avec une station hydrométrique sur le Vénéon à 3 km en aval de la Bérarde (1581 m) et une station météorologique située à 6 km de route près du bourg de Saint-Christophe-en-Oisans (1564 m).

Séries horaires de précipitations et de débit entre le 19 juin 00h et le 21 juin 18h TU.

Séries horaires de précipitations et de débit entre le 19 juin 00h et le 21 juin 18h TU.

Le cumul de précipitation mesuré entre le 19 et le 21 juin est considérable, précisément 100 mm en 34 heures. J’ai calculé le bassin versant du Vénéon à cette station hydrométrique Saint-Christophe-en-Oisans à partir du modèle numérique de terrain RGE ALTI® 5m fourni par l’IGN. Sa superficie est de 104 km², le volume d’eau reçu pendant ces 34 heures est donc 10 millions de mètres cube, soit 84 m³/s. On constate que le débit de crue s’approchait de 80 m³/s avant que l’enregistrement ne cesse. Il est probable que ces 100 mm de pluie intense expliquent l’essentiel de la crue. Malheureusement la série de débit est interrompue et à ce jour il est impossible de fermer le bilan hydrologique. Peut-on en savoir plus sur la contribution du manteau neigeux à partir des images satellitaires ?

Bassin versant du Vénéon à la station hydrométrique de St-Christophe-en-Oisans (marqueur rouge) et la station météorologique associée (marqueur bleu).

Bassin versant du Vénéon à la station hydrométrique de St-Christophe-en-Oisans (marqueur rouge) et la station météorologique associée (marqueur bleu).

Ce bassin draine des zones de haute montagne dont l’antécime de la Barre des Ecrins qui culmine à plus de 4000 m d’altitude. Les fluctuations diurnes de débit indiquent que la fonte des neiges avait commencé à alimenter le Vénéon depuis le début du mois de juin (la taux de fonte est en grande partie contrôlé par l’énergie solaire donc il suit le rythme des journées).

Série journalière des précipitations et série horaire du débit du Vénéon à St-Christophe-en-Oisans. La série de débit est interrompue à partir du 21 juin sans doute à cause des dégâts causés par la crue.

Série journalière des précipitations et série horaire du débit du Vénéon à St-Christophe-en-Oisans. La série de débit est interrompue à partir du 21 juin sans doute à cause des dégâts causés par la crue.

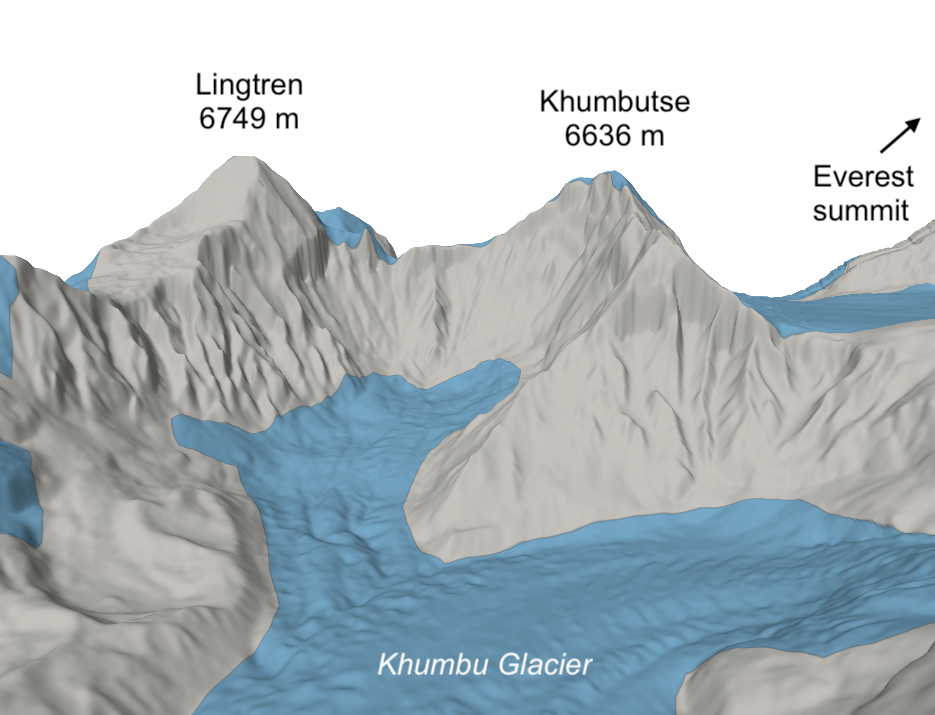

Les images Sentinel-2 avant et après la crue montrent que le manteau neigeux a bien réduit entre les deux dates car sa limite basse remonte en altitude. Un autre détail intéressant est le changement de couleur : les zones enneigées blanches de haute altitude ont disparu, ce qui suggère que la fonte a eu lieu jusqu’aux plus hauts sommets du bassin versant. En effet, les poussières sahariennes ont été déposées à la fin du mois de mars 2024.

[https:]]On peut analyser l’évolution de l’enneigement à partir des cartes de neige Theia ou Copernicus. Voici par exemple celle du 2 juillet 2024.

Une façon de résumer l’enneigement et de s’affranchir des nuages est de calculer la « ligne de neige », c’est-à-dire l’altitude qui délimite en moyenne la partie basse de l’enneigement. Pour la calculer j’utilise l’algorithme de Kraj?í et al. (2014) qui minimise la somme de la surface enneigée sous la ligne de neige et de la surface non-enneigée au dessus de la ligne de neige. Dans cet exemple on obtient 2740 m.

Les cartes de neige sont disponibles depuis 2015 (Landsat 8, Sentinel-2). La série complète sur le bassin versant du Vénéon à St Christophe permet de voir la remontée de la ligne de neige entre avril (01/04 = jour 91) et août (01/08 = jour 213).

L’année 2024 se distingue par une limite d’enneigement plus basse que les huit années précédentes.

Si on superpose cette ligne de neige avec l’isotherme 0°C donnée par ERA5 on constate que l’intégralité du bassin versant était sous l’iso 0°C quelques jours avant la crue, ce qui suggère (1) qu’il a plu à très haute altitude (2) qu’il y a eu un apport de fonte, d’autant que le manteau neigeux avait déjà été bien réchauffé au début du mois de juin.

Des images satellites à très haute résolution (Pléiades Neo) ont été acquises dans le cadre de la CIEST2. Ces images permettent de voir les traces des pluies abondantes sur la neige lessivée et des avalanches de neige humide autour du glacier de Bonne Pierre.

Image satellite du manteau neigeux sur le glacier de Bonne Pierre (Pléiades Neo 04 juillet 2024, composition colorée)

Image satellite du manteau neigeux sur le glacier de Bonne Pierre (Pléiades Neo 04 juillet 2024, composition colorée)

Image satellite du manteau neigeux sur le glacier de Bonne Pierre (Pléiades Neo 04 juillet 2024, panchromatique)

Image satellite du manteau neigeux sur le glacier de Bonne Pierre (Pléiades Neo 04 juillet 2024, panchromatique)

En conclusion, les données disponibles montrent cette crue est une crue de pluie sur neige (Rain-On-Snow). On trouve tous les ingrédients favorables à ce type de crue :

- une pluie intense ;

- un manteau neigeux particulièrement étendu avec une ligne de neige basse pour la saison ;

- un manteau neigeux déjà en régime de fonte ou proche (isothermal, ripe snowpack) ;

- un apport de chaleur pendant l’évènement, ici une atmosphère chaude et humide qui a dû émettre de forte quantités de rayonnement thermique.

Les taux de fonte peuvent dépasser 20 mm/jour dans les zones de montagne. Cet apport de fonte doit être pondéré par la fraction enneigée du bassin. Le 17 juin 60% de la surface du bassin était enneigée donc on peut estimer une contribution potentiellement de l’ordre de 15 mm/jour pendant la crue à comparer aux 100 mm de pluie mesurés à St-Christophe pendant l’épisode. Donc le manteau neigeux a pu augmenter significativement l’apport d’eau liquide dans ce bassin versant. Néanmoins, d’autres facteurs aggravants sont à considérer :

- une augmentation du taux de précipitation par effet orographique : les précipitations mesurés à St-Christophe à 1564 m sont probablement sous-estimées à l’échelle du bassin versant de la Bérarde qui s’étend jusqu’à 4086 m (Pic Lory) ;

- la saturation des sols et des nappes d’eau souterraines (y compris le thermokarst glaciaire) suite à un printemps bien arrosé et une fonte des neiges en cours depuis plusieurs semaines.

Ces évènements Rain-On-Snow sont bien étudiés aux USA où ils sont connus pour déclencher des crues dévastatrices (comme le débordement du lac Oroville en Californie). Plusieurs études montrent que le changement climatique augmente le risque de crue Rain-On-Snow en haute montagne [1, 2].

Les images Pléiades Neo étant des prises stéréo, elles devraient également permettre aux géomorphologues de calculer les volume de sédiments charriés lors de cette crue exceptionnelle.

Mise à jour 15/07/2024. Le SYMBHI nous apprend que « Le débit du Vénéon a atteint 200 m3/s (source EDF) à plan du Lac (St Christophe en Oisans) ». Cela ferait une lame d’eau horaire de 7 mm, ce qui reste compatible avec les mesures du pluviomètres de St Christophe (on observe 3h avec des taux supérieurs à 8 mm/h).

-

9:52

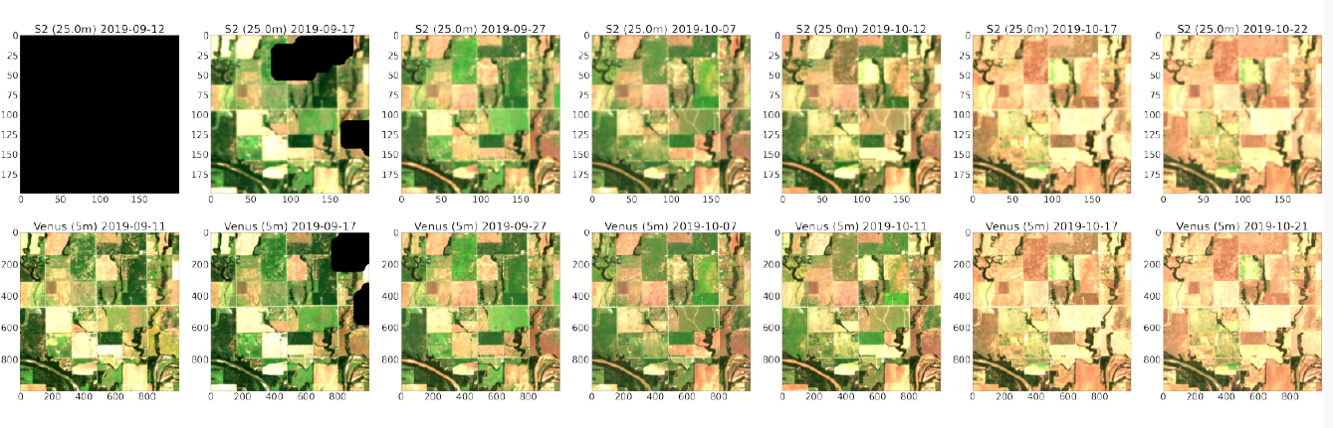

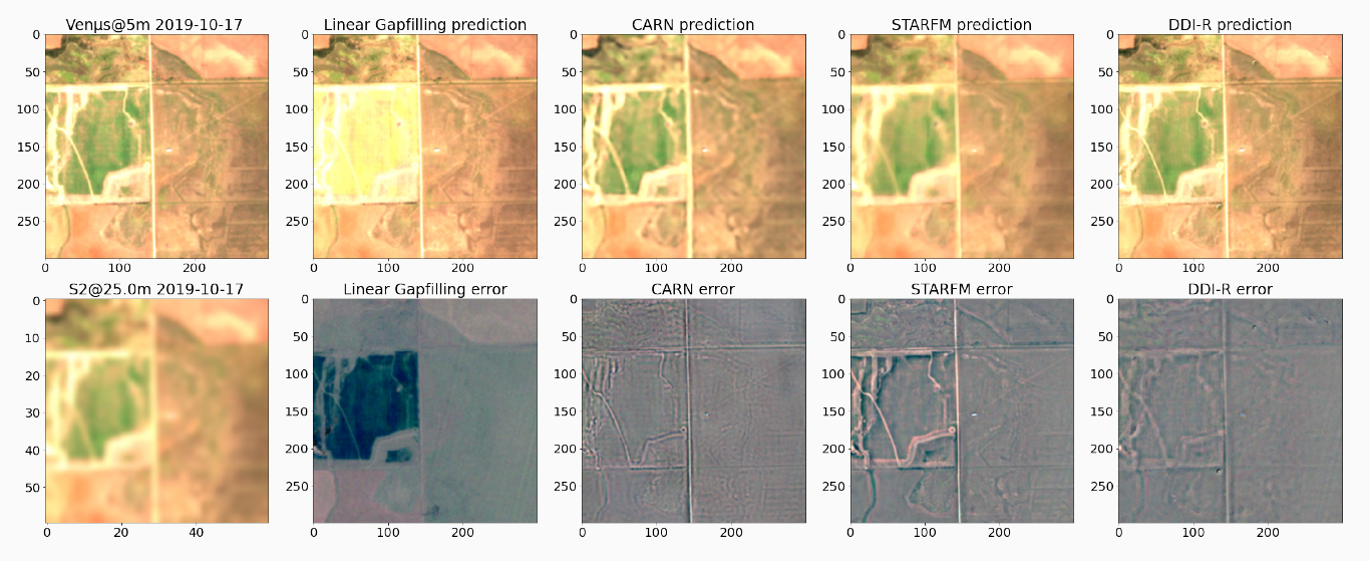

9:52 Fin de la phase d’acquisitions d’images de VENµS

sur Séries temporelles (CESBIO)C’est avec une certaine tristesse mais aussi beaucoup de fierté que je vous rappelle que la phase opérationnelle de VENµS se terminera fin juillet après 7 ans de bon travail. La phase d’acquisition actuelle (VM5) s’arrêtera le 12 juillet. Les semaines restantes seront consacrées à quelques expériences techniques (les acquisitions au-dessus d’Israël se poursuivront jusqu’à fin juillet), puis, nos collègues israéliens videront les réservoirs en abaissant l’orbite, « passiveront » le satellite, puis laisseront les hautes couches de l’atmosphère réduire sa vitesse et abaisser son altitude avant de brûler dans l’atmosphère dans quelques années.

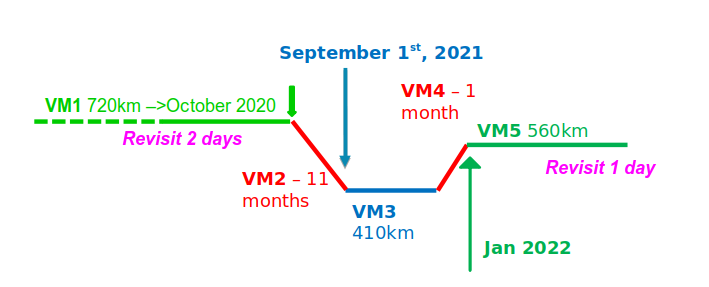

Les agences spatiales de France (CNES) et d’Israêl (ISA) ont lancé le micro-satellite VENµS en août 2017, et pour un micro-satellite, il a eu une vie assez particulière ! VENµS a d’abord été injecté en orbite à 720 km d’altitude. Il y est resté 3 ans (phase 1 de la mission VENµS, VM1), puis son orbite a été abaissée à 400 km (VM2), il y a été maintenu quelques mois (VM3), avant d’être remonté (VM4) à 560 km (VM5) où il est resté deux ans et demi.

VENµS avait en effet deux missions :

- tester un moteur à propulsion ionique et démontrer qu’il était capable de changer d’orbite et même de maintenir le satellite à 400 km d’altitude et de compenser le freinage atmosphérique dû aux couches les plus élevées de l’atmosphère terrestre

- prendre des images répétitives de sites sélectionnés à haute résolution (4 à 5 m), avec des revisites fréquentes (1 ou 2 jours), avec 12 bandes spectrales fines, et un instrument de haute qualité.

Les deux phases VM1 et VM5 ont été utilisées pour observer environ 100 sites (différents sites pour chaque phase), avec une revisite de deux jours pendant VM1, et d’un jour pour certains sites pendant VM5. Toutes les images ont été traitées au niveau 1C et au niveau 2A. En ce qui concerne VM5, un retraitement complet sera effectué fin 2024 afin d’avoir un jeu de données cohérent, avec les derniers paramètres de correction géométrique et radiométrique mis à jour, et les mêmes versions des chaines de traitement, pendant toute la durée de vie du VENµS. le même retraitement avait été effectué après la fin de VM1 en 2022.

Tableau de bord actuel du site web de distribution du CNES, avec le nombre de produits disponibles. Seules les images avec une proportion suffisante de pixels sans nuages sont fournies, et les statistiques ne tiennent pas compte des produits sur Israël distribués par l’université Ben Gurion du Neguev.

Tableau de bord actuel du site web de distribution du CNES, avec le nombre de produits disponibles. Seules les images avec une proportion suffisante de pixels sans nuages sont fournies, et les statistiques ne tiennent pas compte des produits sur Israël distribués par l’université Ben Gurion du Neguev.

Bien que l’impact scientifique de VENµS n’ait pas été à la hauteur des espérances initiales en raison de son lancement tardif (VENµS devait démontrer la puissance des observations optiques multitemporelles, mais a finalement été lancé après Sentinel-2), il nous a néanmoins incités à préparer intensivement l’arrivée des observations de Sentinel-2. Le développement de nombreuses méthodes telles que celles des processeurs MAJA, WASP,et Iota2 ont été motivées par l’existence du projet VENµS.

De plus, les données acquises par VENµS vont rester disponibles [https:] et nous espérons que sa combinaison unique de résolution (4m) et de revisite (1 jour) avec 12 bandes, sera encore utile pour plusieurs années à venir. Notre petit satellite est de plus en plus connu dans la communauté de l’apprentissage profond, puisqu’il a été utilisé pour construire le jeu de données Sen2VENµS afin d’apprendre à améliorer la résolution de Sentinel-2, ou pour tester les méthodes de fusion de données entre Sentinel-2 et VENµS.

Par ce billet, nous souhaitons remercier toutes les équipes en France et en Israël qui ont contribué à la décision, au financement, à la construction, à l’exploitation et au traitement des données de ce satellite. La liste des personnes ayant apporté une contribution significative serait trop longue (des centaines), et le risque d’oublier quelqu’un serait trop élevé, aussi nous ne citerons que Gerard Dedieu and Arnon Karnieli, les PI français et israélien initiaux (Arnon est toujours PI), qui ont consacré de nombreuses années de leur carrière à la réussite de ce petit satellite.

Rédigé par Olivier Hagolle (CNES/CESBIO) avec l’aide d’Arnon Karnieli (BGU)

PS : La fin du VM5 a été une période difficile avec un satellite vieillissant qui a largement dépassé sa durée de vie nominale de 3 ans avec un objectif fixé à 5 ans. Une proportion non négligeable des tentatives d’acquisition a échoué, et certains sites n’ont pas produit les séries temporelles attendues. Nous nous excusons si la collecte de données n’a pas été à la hauteur de vos attentes. Cependant, notre collection de données comprend plus de 82 000 produits, 56 000 pour VM1 et 26 000 pour VM5, ce qui nous permet de disposer de nombreuses et belles séries de produits prêts à l’emploi (Analysis Ready Data).

-

12:05

12:05 The end of VENµS imaging phase

sur Séries temporelles (CESBIO)It is with some sadness but also a lot of pride that I remind you that the VENµS operational phase will end at the end of July after 7 years of good work. The current acquisition phase (VM5) will stop on the 12th of July. The remaining weeks will be devoted to a few technical experiments (acquisitions over Israel will go on until end of July), and then, our Israeli colleagues will empty the tanks by lowering the orbit, passivate the satellite, and then let the higher layers of the atmosphere reduce its speed and lower its altitude before burning in the atmosphere in a few years.

The French and Israeli space agencies (CNES and ISA) launched the VENµS micro-satellite in August 2017, and for a micro-satellite, it has had quite a special life ! VENµS was first injected into orbit at 720 km altitude. It stayed there for 3 years (VENµS Mission 1 phase, VM1), then its orbit was lowered to 400 km (VM2), it was maintained there for a few months (VM3), before being raised again (VM4) to 560 km (VM5) where it stayed for two and a half years.

VENµS indeed had two missions :

- test a ionic propulsion engine and verify it was able to change orbits and even maintain the satellite at 400 km altitude with all the atmospheric drag due to the highest levels of the earth atmosphere

- take repetitive images of selected sites at a high resolution (4 to 5 m), frequent revisit (1 or 2 days), with 12 thin spectral bands, and a high-quality instrument

The two phases VM1 and VM5 were used to observe around 100 sites (different sites for each phase), with a revisit of two days during VM1, and one day for some sites during VM5. All the images have been processed to Level 1C and Level 2A. Regarding VM5, a full reprocessing will be done at the end of 2024 to have a consistent data set with the latest updated geometric and radiometric correction parameters, and the same updated versions of software during the whole life of VENµS. The same reprocessing had been done after the end of VM1 in 2022.

Current dashboard of CNES distribution website, with the number of available products. Only images with a sufficient proportion of cloud free pixels are produced, and the statistics do not account for the products over Israel distributed at BGU.

Current dashboard of CNES distribution website, with the number of available products. Only images with a sufficient proportion of cloud free pixels are produced, and the statistics do not account for the products over Israel distributed at BGU.

Although VENµS’ scientific impact was not as high as expected due to its late launch (VENµS was meant to demonstrate the power of multi-temporal optical observations, but was finally launched after Sentinel-2), it nevertheless incited us to prepare the arrival of Sentinel-2 observations intensively. The development of many methods such as those in MAJA, WASP, Iota2 or LIS processors were motivated by the existence of the VENµS project.

Moreover, the data acquired by VENµS are here to stay ( [https:]] ), and we hope its unique combination of resolution (4m) and revisit (1 day) with 12 bands, will still be useful for several years to come. Our little satellite is getting very well known in the deep learning community, as it has been used to build the Sen2VENµS data set for learning to improve the resolution of Sentinel-2, or to test data fusion methods between Sentinel-2 and VENµS.

With this post, we would like to thank all the teams in France and Israel who contributed to the decision, funding, building, exploitation and data processing of this satellite. The list of persons who brought a meaningful contribution would be too long (hundreds), and the risk of forgetting someone will to too high, so we will only cite Gerard Dedieu and Arnon Karnieli, the initial French and Israeli PIs (Arnon is still PI), who devoted many years of their career to the success of this little satellite.

Written by VENµS PI: Olivier Hagolle (CNES/CESBIO) with the help of Arnon Karnieli (BGU)

PS : The end of VM5 was a difficult period with an aging satellite that was well over its nominal life of 3 tears with a goal set to 5 years. A non-negligible proportion of the attempted acquisitions failed, and some sites did not yield the expected time series. We apologize if the data collection was not as good as your expectations. However, our data collection includes more than 82,000 products, 56,000 for VM1, and 26,000 for VM5, bringing much analysis-ready data

-

0:23

0:23 Evolution récente du manteau neigeux dans les Pyrénées Orientales

sur Séries temporelles (CESBIO)Après que j’ai publié l’état actuel du stock de neige dans les bassins du Rhône et de la Garonne, certains internautes m’ont suggéré de répéter l’analyse pour les fleuves côtiers des Pyrénées Orientales qui subissent une sécheresse depuis le printemps 2022. Voici le résultat pour la région formée par l’agrégation des bassins du Tech et de la Têt (total 2095 km2).

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt jusqu’au 1er mai 2024 (modèle SIM2, Météo-France)

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt jusqu’au 1er mai 2024 (modèle SIM2, Météo-France)

C’est la deuxième année consécutive de fort déficit en neige sur ces bassins.

Evolution de l’équivalent en eau du manteau neigeux au cours des deux dernières années hydrologiques dans les bassins du Tech et de la Têt.

L’année en cours — et donc la précédente — sont loin des normales de 1991-2020 et a fortiori de 1961-1990 sur ces bassins.

Evolution de l’équivalent en eau du manteau neigeux au cours des deux dernières années hydrologiques dans les bassins du Tech et de la Têt.

L’année en cours — et donc la précédente — sont loin des normales de 1991-2020 et a fortiori de 1961-1990 sur ces bassins.

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt. Les enveloppes correspondent aux percentiles 10-90 et les traits pleins à la médiane.

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt. Les enveloppes correspondent aux percentiles 10-90 et les traits pleins à la médiane.

L’enveloppe autour de la médiane est large car le climat méditerranéen est caractérisé par un forte variabilité naturelle. Néanmoins on voit aussi un signal clair vers une fonte plus précoce entre ces deux périodes (même graphe ci-dessous sans les enveloppes de percentiles).

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt (médianes de chaque période).

Evolution de l’équivalent en eau du manteau neigeux dans les bassins du Tech et de la Têt (médianes de chaque période).

Le graphe ci-dessus montre bien le concept « slower melt in a warmer world » : dans un climat plus chaud, la fonte démarre plus tôt au printemps à un moment où l’énergie solaire disponible pour la fonte est plus faible. Les taux de fonte sont donc plus faibles (le taux de fonte est donné par la pente de la courbe).

Je réalise ces analyses à partir des simulations du modèle opérationnel SIM2 désormais mises à disposition pour tous par Météo-France. Ce modèle est forcé par des données météorologiques in situ et donne de très bons résultats quand on le compare aux séries temporelles de surfaces enneigées obtenues par satellite sur les Pyrénées (voir ce post). Néanmoins, la résolution spatiale du modèle SIM2 est assez faible (8 km) ce qui peut causer des erreurs dans la modélisation de la neige à une échelle plus locale, même si le modèle utilise une paramétrisation sous-maille pour prendre en compte l’effet du relief au premier ordre. Il faudrait donc l’évaluer plus en détail sur les Pyrénées Orientales pour vérifier ces conclusions compte-tenu des enjeux importants associés au manteau neigeux dans cette région pour l’agriculture, l’hydroélectricité et les écosystèmes.

Photo : versant oriental du Massif du Canigou au printemps 2019 (Damusmedia, CC BY-SA 4.0, via Wikimedia Commons)

-

14:29

14:29 Sentinel-2 Enhance button: 5-meters resolution for 10 bands at your fingertips

sur Séries temporelles (CESBIO)Enhance button is a very common movies trope, where a character scrolls through some video footage or photos and asks a computer to enhance its resolution to an insane level of details, enabling solving crime mysteries and conspiracies of all sort with clues that were invisible in the original image. While this meme has been frequently parodied and mocked for defiling science, it did not stop researchers and engineers of our deep-learning era to harness the power of both GPUs and automatic differentiation into pushing foward performances of Single Image Super-Resolution (SISR), which is the scientific name of the Enhance button.

Of course, the remote sensing community wants its enhance button too! In the frame of the EVOLAND project, CESBIO has developed and released under Apache 2.0 sentinel2_superresolution, a tool that takes as input a L1C or L2A (Theia format) Sentinel-2 product and outputs 10 of the most useful bands (namely B2, B3, B4, B5, B6, B7, B8, B8A, B11, B12) at 5-meter resolution. It is very simple to use:

$ sentinel2_superesolution -v -i SENTINEL2A_20200808-105933-164_L2A_T31TCG_C_V2-2/ -o results/ -roi 300160.000 4590400.000 304000.000 4594240.000

Et voilà!

Red Edge band composition (B7, B6, B5) of a detail of Sentinel-2 image of 2020.08.08 of tile 31TCG, alternating between bicubic zoom to 5-meters and output of sentinel2_superresolution. Beware that gif format alters the original image color quantization.

Backstory

Red Edge band composition (B7, B6, B5) of a detail of Sentinel-2 image of 2020.08.08 of tile 31TCG, alternating between bicubic zoom to 5-meters and output of sentinel2_superresolution. Beware that gif format alters the original image color quantization.

Backstory

Here at CESBIO, we released in 2022 the Sen2Venµs dataset, tailored for the training of SISR models that bring common bands of Venµs and Sentinel-2 at 5-meter resolution, that we gathered during a phase-0 study for the Sentinel-HR mission (add link). In 2022, we then worked together with Thales and MEOSS in the frame of one year ITT project for ESA, starting to explore the possibilities of the Sen2Venµs dataset for the super-resolution of Sentinel-2 with good performances. In 2023, we then joined the consortium of the EVOLAND project, a HORIZON Europe project from the European Commission aiming at designing future and enhanced Copernicus products for 2030, based on new missions, new data and new algorithms. CESBIO is responsible for the improved resolution sub-task, aiming at providing methods to improve the spatial, temporal and spectral resolution of satellite data for downstream product prototypes.

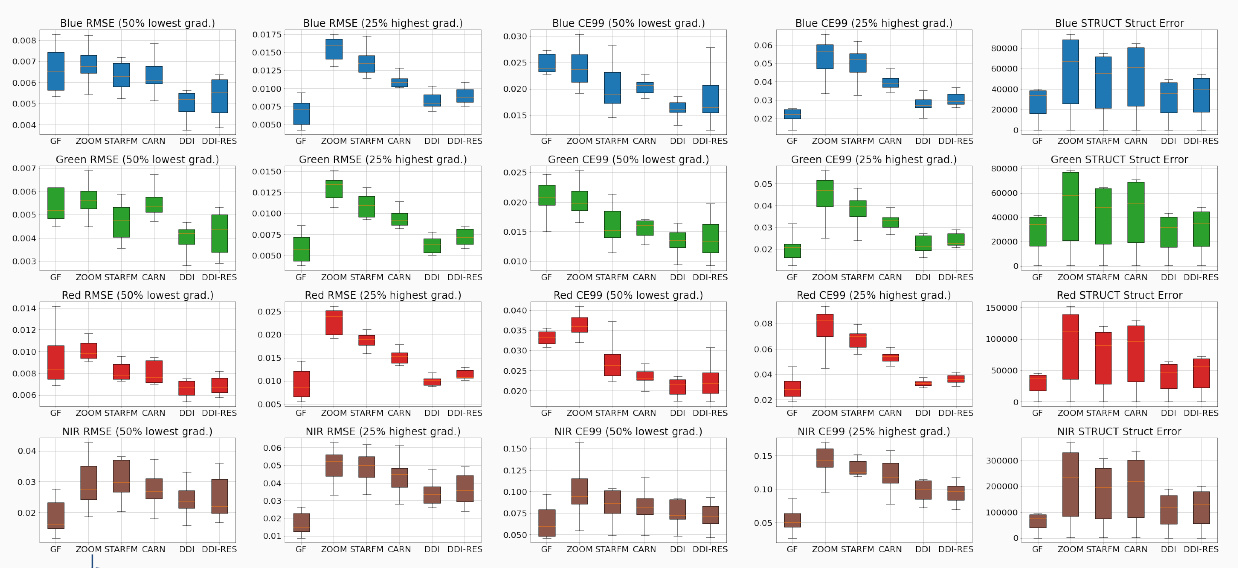

How it worksIf SISR is to be part of the processing of future Copernicus products, it will be applied to tenths of thousands of products, and therefore the computational cost should be as limited as possible. We therefore selected a lightweight and relatively shallow network known as Cascading Residual Network (CARN), and parameterized it with as few blocks as possible in order to reduce its processing cost (selected model only has 2.5M parameters).The network has been trained to jointly process all 10 bands at once, up-sampled at 10-meter resolution. Training is achieved by using the Sen2Venµs dataset complemented with B11 and B12 patches. In order to workaround the lack of 5-meter Venµs reference for B11 and B12, training makes use of two loss terms, one at full resolution for all bands except B11 and B12, and the other specifically targeted at B11 and B12 and operating at lower resolution through Wald protocol.

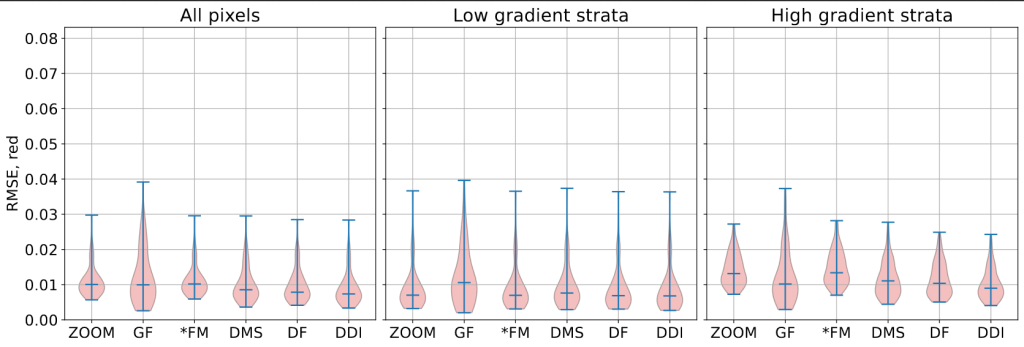

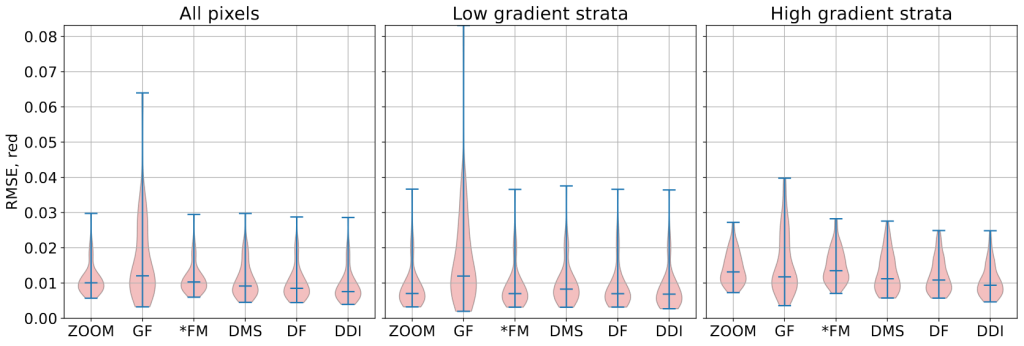

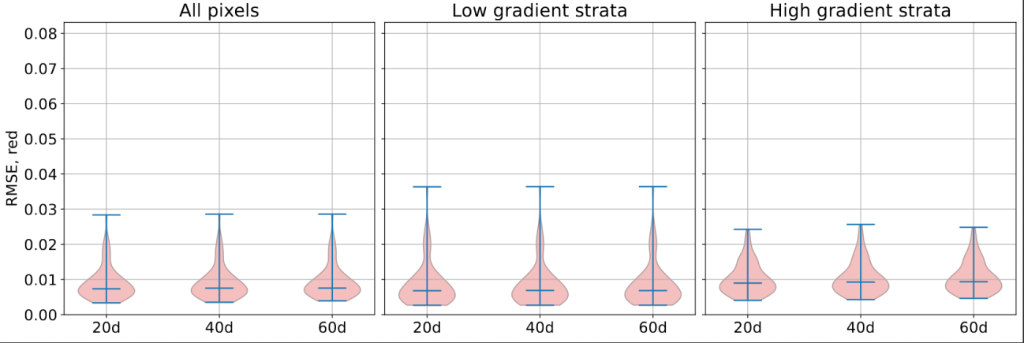

The network is pre-trained with simulated data (from Venµs reference patches) for 20 epochs. We found that this pre-training already gives consistent results with respect to pre-training on real data, as shown in the following figure.

Comparison between different pre-training method. From left to right: bicubic up-sampling, L1 loss on real data, L1 loss on separated high and low spatial frequencies of real data, L1 loss on simulated data, and Venµs reference.

Comparison between different pre-training method. From left to right: bicubic up-sampling, L1 loss on real data, L1 loss on separated high and low spatial frequencies of real data, L1 loss on simulated data, and Venµs reference.

The best network from pre-training is then fine-tuned with adversarial (GAN) training for 10 additional epochs while monitoring the BRISQUE score on the validation set to select best model. This fine-tuning results in minor quality improvement, interestingly mostly benefiting the 20-meter bands, as shown in the following figure:

Comparison between pre-trained and fine-tuned models.

Performances assessment

Comparison between pre-trained and fine-tuned models.

Performances assessment

Assessing performances of SISR network trained with the Sen2Venµs dataset is a challenging task, as already identified in the earlier work with Thalès and MEOSS, because the dataset has a lot of residual geometric and radiometric discrepancies between both satellite images which impairs traditional IQ metrics such as PSNR and SSIM. We first measure the radiometric consistency of each band with respect to the input Sentinel-2 image, over our testing set. Here we can observe that all bands have a RMSE below 0.005 for the training on simulated data (red bars), whereas training with L1 loss on real data or even with the more advanced HR/LR loss incur radiometric distortion.

Radiometric consistency with respect to input Sentinel-2 images

Radiometric consistency with respect to input Sentinel-2 images

The next and more difficult question is how much high resolution details are actually injected by the algorithm. The first thing we can do is to measure the RMSE on high spatial frequency content of the signal. Since this measure will be very sensitive to geometric distortion, we do it on simulated data. This highlights a moderate improvement for 10-meter bands and a large improvement for 20-meter band, which is consistent with the visual assessment.

RMSE on high spatial frequencies measured on simulated data from the testing set.

RMSE on high spatial frequencies measured on simulated data from the testing set.

Finally we can have a look at what happens in Fourier domain. We can observe that the super-resolved image populates Fourier domain in twice the extent of the initial signal, which shows that super-resolution actually restores higher spatial frequencies with respect to bicubic up-sampling.

From left to right: FFT transform of bicubic-upsampled B7, super-resolved B7, and difference between both (red means higher FFT magnitude on super-resolved image).

Push the enhance button!

From left to right: FFT transform of bicubic-upsampled B7, super-resolved B7, and difference between both (red means higher FFT magnitude on super-resolved image).

Push the enhance button!

The sentinel2_superresolution can easily be installed with pip and should be straightforward to use. We have made the following estimates for processing time for a whole Sentinel-2 product, which is even achievable without a GPU !

CPU (1 core) CPU (8 cores) GPU (A100) L1C 6 hours 1 hour 6 minutes L2A 5 hours 50 minutes 5 minutes In the frame of EVOLAND, we are also working on getting the Sentinel-2 super-resolution algorithm running in OpenEO, and open source code for that should be released very soon. Stay tuned!

We are looking forward to see how this tool will be used by the downstream product prototypes of EVOLAND. Along with Thalès and MEOSS, we already demonstrated a clear interest for the Water Bodies Detection task, especially with the super-resolution of the SWIR bands (B11 and B12). We are also planning to integrate the tool as an on-demand processing in GEODES. Feedback is also welcome.

CreditsThis work was partly performed using HPC resources from GENCI-IDRIS (Grant 2023-AD010114835)

This work was partly performed using HPC resources from CNES.

-

16:39

16:39 Training deep neural networks for Satellite Image Time Series with no labeled data

sur Séries temporelles (CESBIO)The results presented in this blog are based on the published work : I.Dumeur, S.Valero, J.Inglada « Self-supervised spatio-temporal representation learning of Satellite Image Time Series » in IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, doi: 10.1109/JSTARS.2024.3358066.

In this paper, we describe a self-supervised learning method to train a deep neural network to extract meaningful spatio-temporal representation of Satellite Image Time Series (SITS). The code associated to this article is also available.This work is part of the PhD conducted by Iris Dumeur and supervised by Silvia Valero and Jordi Inglada. In the last few years, the CESBIO team has developed machine learning models which exploit Satellite Image Time Series (SITS). For instance, the blog « End-to-end learning for land cover classification using irregular and unaligned satellite image time series » presents a novel classification method based on Stochastic Variational Gaussian Processes.

Context and IntroductionWith the recent launch of numerous Earth observation satellites, such as Sentinel 2, a large amount of remote sensing data is available. For example, the Sentinel 2 mission acquires images with high spatial resolution (10 m), short temporal revisit (5 days), and wide coverage. These data can be exploited under the form of Satellite Image Time Series (SITS), which are 4-dimensional objects with temporal, spectral and spatial dimensions. In addition, SITS provide critical information for Earth monitoring tasks such as land use classification, agricultural management, climate change or disaster monitoring.

In addition, due to SITS specific acquisition conditions, SITS are irregular and have varying temporal sizes. Indeed, as detailed in this blog, the areas located on different orbital paths of the satellite have different acquisition dates and have a different revisit frequencies, causing respectively the unalignment and irregularity of SITS. Finally, Sentinel 2 SITS are affected by different meteorological conditions (clouds, haze, fog, or cloud shadow). Therefore, pixels within a SITS may be corrupted. Although validity masks are provided, incorrectly acquired pixels may be wrongly detected. In short, the development of models adapted to SITS requires to:- Utilize the 4D temporal, spectral, and spatial information

- Deal with SITS irregularity and unalignement

- Ignore wrongly detected cloudy pixels

Moreover, while Deep Learning (DL) approaches have shown great performances in remote sensing tasks, these models are data greedy. In addition, building large labeled datasets is costly. Therefore, the training of DL models on large geographic and temporal scales is constrained by the scarcity of labels. Moreover, self-supervised learning has achieved amazing performance in other domains, such as image processing or natural language processing. Self-supervised learning is a branch of unsupervised learning in which the model is trained on a task generated by the data. In other words, the labels needed to supervise the task are generated thanks to the data. For example, in natural language processing, as illustrated in the following image, one common self-supervised pre-training task consists in training the model to recover masked words.

Example of a Masked Language Model self-supervised training task (read upward)

Self-supervised learning can be used to pre-train a model on a large unlabeled dataset. Notably, during pre-training, the model learns representations of the input data, which are then used by a decoder to perform the self-supervised task. In a second phase, these latent representations can be used for various supervised tasks, denoted downstream tasks. In this case, as illustrated in the following image, a downstream classifier is trained on top of the latent representations generated by the pre-trained model.

Description of the link between self-supervised pre-training and supervised downstream task

When the self-supervised pre-training is successful:

- The pre-trained model provides latent representations that are relevant for a various set of downstream tasks

- If the downstream task lacks of labeled data to train a Deep Neural Network (DNN) from scratch, loading a pre-trained model is expected to improve the performance.

Considering all of the above, we propose a new method, named U-BARN (Unet-Bert spAtio-temporal Representation eNcoder).

We present two main contributions:- A new spatio-temporal architecture to exploit the spatial, spectral and temporal dimensions of SITS. This architecture is able to handle irregular and unaligned annual time series.

- A self-supervised pre-training strategy suitable for SITS

Then, the quality of the representations is assessed on two different downstream tasks: crop and land cover segmentation. Due to the specific pre-training strategy, cloud masks are not required for the downstream tasks.

Method U-BARN architectureAs described in the previous image, U-BARN is a spectro-spatio-temporal architecture that is composed of two successive blocks:

- Patch embedding : which is composed of a spatial-spectral encoder (a Unet) that processes independently each image of the SITS. No temporal features are extracted in this block.

- Temporal Transformer : which processes pixel-level time series of pseudo-spectral features. No further spatial features are extracted in this block.

The details of the U-BARN architecture are given in the full paper. We have used a Transformer to process the temporal dimension, as it enables to process irregular and unaligned time series while being highly parallelizable. Lastly, the latent representation provided U-BARN has the same temporal dimension as the input SITS.

Self-supervised pre-training strategyInspired by self-supervised learning techniques developed in natural language processing, we propose to train the model to reconstruct corrupted images from the time series. As shown in the next figure, during pre-training, a decoder is trained to rebuild corrupted inputs from the latent representation. The way images are corrupted is detailed on the full paper.

A reconstruction loss is solely computed on corrupted images. Additionally, to avoid training the model to reconstruct incorrect values, a validity mask is used in the loss. If the pixel has incorrect acquisition conditions, the pixel is not used in the loss. We want to emphasize that the validity mask is only used in the loss reconstruction. Therefore, the validity mask is not needed for the supervised downstream tasks. Lastly, an important pre-training parameter is the masking rate, i.e., the number of corrupted images in the time series. Increasing the number of corrupted image, complicate the pre-training task.

Experimental setup DatasetsThree Sentinel 2 L2A datasets constituted of annual SITS are used:

- A large scale unlabeled dataset to pre-train U-BARN with the previously defined self-supervised learning strategy. This dataset contains data from 2016 to 2019 over 14 S2 tiles in France. The constructed unlabeled dataset is shared on zenodo : 10.5281/zenodo.7891924.

- Two labeled datasets are used to assess the quality of the pre-training. We perform crop (PASTIS) and land cover (MultiSenGE) segmentation.

Description of the S2 data-sets used for pretext and downstream tasks. The unlabeled data-set for pre-training is composed of two disjoint data-sets: training (tiles in blue) and validation (tiles in red). S2 tiles in the labeled data-sets are shown in green and black respectively for PASTIS and MultiSenGE.

Description of the S2 data-sets used for pretext and downstream tasks. The unlabeled data-set for pre-training is composed of two disjoint data-sets: training (tiles in blue) and validation (tiles in red). S2 tiles in the labeled data-sets are shown in green and black respectively for PASTIS and MultiSenGE.

In all three datasets, the Sentinel 2 products are processed to L2A with MAJA. For these data-sets, only the four 10 m and the six 20 m resolution bands of S2 are used.

Experimental setupThe conducted experiments are summarized in the following illustration.

Illustration of the conducted experiments. The loop means that the SITS encoder weights are updated during the downstream task. The red crossed-out loop indicates that the weights are frozen during the downstream task.

Illustration of the conducted experiments. The loop means that the SITS encoder weights are updated during the downstream task. The red crossed-out loop indicates that the weights are frozen during the downstream task.

In the downstream tasks, the representations provided by U-BARN are fed to a shallow classifier to perform segmentation. The proposed shallow classifier architecture is able to process input with varying temporal sizes. We consider two possible ways to use the pre-trained U-BARN:

– Frozen U-BARN: U-BARNFR corresponds to the pre-trained U-BARN whose weights are frozen during the downstream tasks. In this configuration, the number of trainable parameters is greatly reduced during the downstream task.

– Fine-tuned U-BARN: U-BARNFT is the pre-trained U-BARN whose weights are the starting points for training the downstream tasks.

To evaluate the quality of the pre-training, we integrate two baselines:

– FC-SC: We feed the shallow classifier (SC) with features from a channel-wise fully connected (FC) layer. Although the FC layer is trained during the downstream task, if the U-BARN representations are meaningful, we expect U-BARNFR to outperform this configuration.

– U-BARNe2e: The fully supervised framework U-BARNe2e, where the model is trained from scratch on the downstream task (end-to-end (e2e)). When enough labelled data are provided, we expect U-BARNe2e to outperform U-BARNFR . The fully-supervised architecture is compared to another well known fully-supervised spectro-spatio-temporal architecture on SITS: U-TAE.

Results Results of the two downstream segmentation tasks

Segmentation tasks performances on PASTIS and MultiSenGE. The F1 score is averaged per class.Model Nber of trainable weights F1 PASTIS F1 MSENGE FC-SC 14547 0.509 0.323 U-BARN-FR 13843 0.618 0.356 U-BARN-FT 1122323 0.816 0.506 U-BARN-e2e 1122323 0.820 0.492 U-TAE 1086969 0.803 0.426

First, as expected, U-BARNFR outperforms FC-SC, showing that the features extracted by U-BARN are meaningful for both segmentation tasks. Second, we observe that in the MultiSenGE land cover segmentation task, the fine-tuned configuration (U-BARNFT) outperforms the fully-supervised one (U-BARNe2e). Nevertheless, when working on the full PASTIS labeled dataset, in contrast to MultiSENGE, we observe no gain from fine-tuning compared to the fully supervised framework on PASTIS. We assume that there may be enough labeled data for PASTIS task, to pre-train the model from scratch. Third, the results show that the newly proposed architecture is consistent with the existing baseline: the performance of the fully supervised U-BARN is slightly higher than that of U-TAE. Labeled data scarcity simulationWe have conducted a second experiment where the number of labeled data is greatly reduced on PASTIS. As expected, with a decrease in the number of labeled data, the models’ performances drop. Nevertheless, the drop in performance is different for the pre-trained architecture U-BARNFT, and the two fully-supervised architectures U-BARNe2eand U-TAE. Indeed, we observe that when the number of labeled data is small, fine-tuning greatly improves the performance. This experiment highlights the benefit of self-supervised pre-training in configuration when labeled data is lacking.

The F1 and mIoU as a function of the number of training data. NSITS is the number of SITS of PASTIS labelled dataset used to train the various configurations. The lower NSits, the less information provided to train the downstream task. When NSITS equals 150, this is approximately 13% of the labeled dataset. Supplementary results

Investigation of the masking rate influence, training and inference time as well as detailed segmentation performances are available in the full paper.

Conclusion and perspectivesWe have proposed a novel method for learning self-supervised representations of SITS. First, the proposed architecture’s performance is consistent with the U-TAE competitive architecture. Moreover, our results show that the pre-training strategy is efficient in extracting meaningful representations for crop as well as land cover segmentation tasks.

Nevertheless, the proposed method suffers from several limitations:

- The proposed architecture only processes annual SITS.

- The proposed architecture is less computationally efficient compared to the U-TAE, and further research should be done to reduce the number of operations in our architecture.

- The temporal dimension of the learned representation is the same as the input time series. In the case of irregularly sampled time series, the classifier in the downstream task must be able to handle this type of data.

Lastly, future work will focus on producing fixed-dimensional representations of irregular and unaligned SITS. Additionally, we intend to use other downstream tasks and integrate other modalities, such as Sentinel 1 SITS.

AcknowledgementsThis work is supported by the DeepChange project under the grant agreement ANR-DeepChange CE23. We would like to thank CNES for the provision of its high performance computing (HPC) infrastructure to run the experiments presented in this paper and the associated help.

-

10:12

10:12 How do we use Remote Sensing data at CESBIO ?

sur Séries temporelles (CESBIO)Several data access centres are being renovated at CNES, ESA, and their first versions often lack some of the features we need. Together with colleagues from CESBIO, we have made a presentation of the way we use remote sensing (RS) data: here is a text version of this presentation.

Of course, there are as many ways of using the data as there are users, but we can find some recurring patterns in all CESBIO users. What about you ? How do you use RS data? Please specify that in the article’s comments. There are certainly other modes of use than ours, just as effective.

What users are we ? CESBIO RS users in front of the lab

CESBIO RS users in front of the lab

At CESBIO, or among the laboratories we work with, we have different types of users:

- Scientists with high skills in computer science, capable of developing their applications and managing the scaling-up of these processors over large territories

- Non-coding specialist scientists, but able to write scripts, who are interested only in one or more AOIs, possibly over several years and with multiple sensors, who need help with scaling up.

- Scientists who are uncomfortable with coding, or who no longer have the time (did you recognize me?), and who prefer already coded tools.

Finally, in general, we rarely work as on the first illustration of the post, and some of us take pride at never looking at the images (but I know they are lying).

Which data ?At CESBIO, we are observing vegetation using satellites, and we need a high enough resolution to access the agricultural plots, but we are also interested in large territories and their evolution. The Copernicus data fit our needs, in particular Sentinel-1 and -2, and later Trishna, LSTM, ROSE-L or CHIME will be very useful to us. These are global data, with a strong revisit and a good resolution. They volume is huge, and often distributed by granules covering fairly small territories.

Some of us also use lower resolution global observations, such as SMOS, VIIRS, Sentinel-3, Grace, and if in general resolution is lower, the revisit frequency increases, and the volume remains high.

We also need auxiliary data, such as weather data (analysis, forecasts, atmospheric components), field and validation data…

Land cover maps of France, annually processed at CNES with CESBIO’s support, using a year of Sentinel-2, for THEIA land data center.

How do we use them ?

Land cover maps of France, annually processed at CNES with CESBIO’s support, using a year of Sentinel-2, for THEIA land data center.

How do we use them ?

- The data we use often have a global coverage and frequent revisits. We almost never use a single image, we deal with large regions, and often whole years.

- As researchers, we experiment, modify and improve our processors, which never work at first. We develop our own processing tools, so the data is processed many times until we are satisfied with the results

- We sometimes develop interesting processors (yes, we do), and in this case we need to test their scalability to process slightly larger geographical areas..

- Machine learning methods often require the use of randomly distributed patches in different landscapes. In the learning phase, we do not need to use whole images.

Deforested surfaces on Guyana plateau(left), and South East Asia, made by processing all Sentinel-1 data acquired since 2017, in the framework TropiSCO project.

Deforested surfaces on Guyana plateau(left), and South East Asia, made by processing all Sentinel-1 data acquired since 2017, in the framework TropiSCO project.

Data download

It’s true that the trend is to process data close to the source, on remote servers (the Cloud), but downloading is still often necessary, for example when computing resources close to the data are limited or expensive.

Given the quantities of data we use, it is absolutely impossible to download our data by clicking on each of them. We therefore make very little use of interactive data search interfaces, which are mainly useful for data discovery. Some distribution centers provide APIs (Rest, STAC), suitable for some users, but they require to spend time understanding these tools, coding and maintaining them, as the interfaces change. Providing validated, command-line download tools is therefore very necessary, and often overlooked by data providers. For example, we have provided download tools (Peps_download, Theia_download, Sentinel_download, Landsat_download) for several servers, but we had largely underestimated the burden of documentation, maintenance and answering questions, since these tools have been successful. In our opinion, it’s up to the distribution centers to provide them, not up to the users.

Patches from the Sen2VENµS dataset which provides pairs of Sentinel-2 and VENµS data acquired almost simultaneously, to train or validate Sentinel-2 super resolution methods.

Patches from the Sen2VENµS dataset which provides pairs of Sentinel-2 and VENµS data acquired almost simultaneously, to train or validate Sentinel-2 super resolution methods.

Automatic learning is often based on small patches randomly selected from the products. To save transfer time, it would therefore be useful for download tools to be able to select the area of interest, dates and spectral bands. For this, storing data in a web-optimized format, such as Cloud Optimized Geotiff (COG), would be very useful.

Some of us need to cross-reference databases, for example to track simultaneous acquisitions between different satellites, often on different servers, taking into account cloud cover or camera angles, for example. An API opening up access to this information when querying the database is therefore very useful, with as few limitations as possible in terms of performance and number of accesses.

On demand processing

In the same way as for downloads, some sites offer on-demand processing. For example, launch an atmospheric correction or a super-resolution tool. Again, if we use them, it won’t be to run them on a single image, but to process large quantities of data. We therefore need to access this processing from the command line or by having a python API accessible on the server where the data is located.

Cloud computing

Processing data on the cloud saves download time, as the output of processing is often smaller than the input (for example, a land cover map produced from a year’s worth of Sentinel-2 data). However, this presents a number of difficulties, and we’d like to see the task made easier.

From one cloud to another, the tools for automating processing, opening virtual machines and launching processes may differ. If the data we need is on different clouds, or if we want to be able to move our processing from one cloud to another, we need to learn the API protocols specific to these clouds, and adapt them from one cloud to another. This is not efficient.

Our work almost always begins with the creation of data cubes, whose dimensions are spatial coordinates, time, spectral bands or any other useful information. The current format of Sentinel-2 data, for example, is a data cube, with a granularity by dates. However, it may be practical to make data cubes larger or smaller than the 100 x 100 km² data tiles. The use of an API that generates these data cubes on the fly, and allows you to apply processing to them, is therefore very interesting. This is the case with the OpenEO library. It’s not the only API of its kind, but it’s well done and has the good taste of being free software.

Access to various clouds through the OpenEO APU (from r-spatial blog)

Access to various clouds through the OpenEO APU (from r-spatial blog)

To be able to use data distributed across several cloud servers, OpenEO library needs to be installed on the server side of these clouds. This is how OpenEO came up with the notion of data federation. Datacubes can be generated in parallel on several clouds, with each cloud preparing the part of the datacube for which it owns the data. Participating in this federation therefore also gives visibility to the data available on each cloud.

We kindly urged CNES to install it, and CNES has added it to the GEODES road map and started a « proof of concept » study :).

Help… Help…

Finding information on all these solutions requires a great deal of researches, but should not be the main focus of researchers. What we really need is information, tutorials (but please not video tutorials, which take so much time to find the information you need) and announcements to anticipate changes and improvements. All this is costly and not always included in priorities.

ConclusionsOur colleagues who are developing the Geodes server at CNES seem to have understood our needs, and are preparing a data catalog, data server, a Virtual Research Environment, an information site, python download scripts and are working hard to implement Open EO on our cluster (which requires solving some technical issues apparently). Of course, it takes time, but we should get a lot of improvements compared to PEPS.

The Copernicus dataspace is a bit ahead of us in using all these technologies, but to my knowledge, a good download tool is still missing.

Beta version of Geodes interface and information portal, which will be available in a few weeks.

Thanks !This post is the result of many discussions with my colleagues, with precious inputs provided by Sylvain Ferrant, Julien Michel Emmanuelle Sarrazin and Jordi Inglada at CESBIO.

-

14:59

14:59 L’utilisation des données de télédétection au CESBIO

sur Séries temporelles (CESBIO)Plusieurs centres d’accès aux données sont en train d’être renouvelés au CNES, à l’ESA, et il manque souvent dans les premières versions des caractéristiques dont nous aurions besoin. Avec des collègues du CESBIO, nous avons fait une présentation au CNES de la manière dont nous utilisons les données. Voici une version écrite de cette présentation.

Bien évidemment, il y a autant de manière d’utiliser les données qu’il y a d’utilisateurs, mais nous pouvons cependant trouver quelques motifs récurrents chez tous les utilisateurs du CESBIO.

Et vous, comment utilisez vous vos données ? N’hésitez pas à préciser dans les commentaires de l’article. Il y a certainement d’autres modes d’utilisation que les nôtres, tout aussi efficaces.

Quels utilisateurs ? Les utilisateurs de données du CESBIO devant le laboratoire

Les utilisateurs de données du CESBIO devant le laboratoire

Au CESBIO ou chez nos proches collègues, nous avons différents types d’utilisateurs :

- Des scientifiques compétents en informatique, capables de développer leurs applications et de gérer le passage à l’échelle de ces traitements sur de grands territoires

- Des scientifiques non spécialistes de codage, mais capables d’écrire des scripts, qui s’intéressent uniquement à une ou plusieurs AOI, éventuellement sur plusieurs années et avec plusieurs capteurs, qu ont besoin d’aide pour le passage à l’échelle

- Des scientifiques peu à l’aise avec le codage, ou qui n’en ont plus le temps (vous m’avez reconnu ?), et qui préfèrent des outils où l’on utilise des lignes de commandes déjà toute prêtes, voire même où l’on clique.

Finalement, nous travaillons rarement comme le montre l’illustration en entête de cet article, et quelques uns d’entre nous sont fiers d’affirmer ne jamais regarder les images (mais je sais qu’il mentent).

Quelles données ?Au CESBIO, nous observons la végétation par satellite, nous avons donc besoin d’une assez haute résolution pour accéder aux parcelles agricoles, mais nous nous intéressons aussi à de larges territoires, et à leur évolution. Les données Copernicus, et notamment Sentinel-1 et -2, et plus tard Trishna, LSTM, ROSE-L ou CHIME nous seront très utiles. Il s’agit de données globales, avec une forte revisite, une bonne résolution. Elles sont donc très volumineuses, et distribuées par granules couvrant des territoires assez réduits.

Certains d’entre nous utilisent des observations globales, comme SMOS, VIIRS, Sentinel-3, Grace, et si en général leur résolution est inférieure, la fréquence de revisite augmente, et le volume reste élevé.

Nous avons aussi besoin de données auxiliaires, comme des données météo (analyses, prévisions, composants atmosphériques), des données de terrain et de validation…

Cartes d’occupation des sols sur la France, produites annuellement au CNES avec le support du CESBIO, avec une année de données Sentinel-2, pour le compte de THEIA.

Comment utilisons nous ces données ?

Cartes d’occupation des sols sur la France, produites annuellement au CNES avec le support du CESBIO, avec une année de données Sentinel-2, pour le compte de THEIA.

Comment utilisons nous ces données ?

- les données que nous utilisons ont souvent une couverture globale et une revisite fréquente. Nous n’utilisons quasiment jamais une seule image, nous traitons de grandes régions, et souvent des années entières.

- nous sommes chercheurs, nous tâtonnons, modifions et améliorons nos traitements qui ne marchent jamais du premier coup. Nous développons nos outils de traitement, et les données sont donc traitées à de nombreuses reprises, jusqu’à ce que nous soyons satisfaits des résultats.

- il nous arrive de mettre au point des chaines de traitement intéressantes (si, si ), et nous avons dans ce cas besoin de tester le passage à l’échelle de ces traitements pour traiter des zones géographiques un peu plus étendues.

- les méthodes d’apprentissage automatique nécessitent souvent l’utilisation de vignettes réparties aléatoirement dans des paysages différents. Dans la phase d’apprentissage, nous n’avons pas besoin d’utiliser des images entières

- les données spatiales sont aussi utilisées à des fins pédagogiques, dans les cours et travaux dirigés de nos collègues enseignants chercheurs, ou à des fins de démonstration, pour mettre en évidence le potentiel d’applications des satellites, par exemple sur ce blog

Cartes de surface déforestées sur le plateau des Guyanes, et en Asie du Sud-Est, réalisées avec le traitement de toutes les données Sentinel-1 depuis 2017, dans le cadre du projet TropiSCO.

Cartes de surface déforestées sur le plateau des Guyanes, et en Asie du Sud-Est, réalisées avec le traitement de toutes les données Sentinel-1 depuis 2017, dans le cadre du projet TropiSCO.

Téléchargement des donnéesCertes, la mode est au traitement proche de la donnée, sur des serveurs à distance (le Cloud), mais le téléchargement reste souvent nécessaire, quand par exemple les ressources de calcul à proximité des données sont limitées, ou payantes et onéreuses.

Vues les quantités de données que nous utilisons, il n’est absolument pas envisageable de télécharger les données en cliquant. Nous utilisons donc très peu les interfaces interactives de recherche des données, elles ne nous sont utiles que pour la découverte des données. Certains centres de distribution fournissent des API (Rest, STAC), qui conviennent à certains utilisateurs, mais elles nécessitent de dépenser du temps à comprendre ces interfaces, à les coder et les maintenir, car les interfaces changent. Fournir des outils de téléchargement validés, utilisables en lignes de commandes, est donc très important, et souvent oublié par les fournisseurs de données. Nous avons par exemple fourni des outils de téléchargement (Peps_download, Theia_download, Sentinel_download, Landsat_download), mais nous avions largement sous-estimé la charge de documentation, maintenance et de réponse aux questions, ces outils ayant rencontré du succès. A notre avis, c’est aux centres de diffusion de les fournir, ce n’est pas le rôle des utilisateurs.

Vignettes du jeu de données Sen2VENµS qui associe des données Sentinel-2 et des données VENµS acquises au cours de la même journée, pour entrainer ou valider des méthodes de super-résolution appliquées à Sentinel-2.

Vignettes du jeu de données Sen2VENµS qui associe des données Sentinel-2 et des données VENµS acquises au cours de la même journée, pour entrainer ou valider des méthodes de super-résolution appliquées à Sentinel-2.

Les apprentissages automatiques sont souvent réalisés à partir de vignettes de petite taille sélectionnées aléatoirement dans les produits. pour économiser du temps de transfert, il serait donc utile que les outils de téléchargement permettent de sélectionner la zone d’intérêt, les dates et les bandes spectrales. Pour celà, le stockage des données en un format optimisé pour le web, comme le Cloud Optimised Geotiff (COG), serait bien utile.

Certains d’entre nous ont besoin de croiser des bases de données, par exemple pour repérer des acquisitions simultanées entre différents satellites, souvent sur des serveurs différents, en prenant en compte par exemple la couverture nuageuse ou les angles de prise de vue. Une API ouvrant l’accès à ces informations lors de requêtes à la base de données est donc très utile, avec le moins de limitations possibles en termes de performances et de nombres d’accès.

Traitement à la demandeDe la même manière que pour les téléchargements, certains sites proposent de lancer des traitements à la demande. Par exemple, lancer une correction atmosphérique, ou un outil de super-résolution. La encore, si nous les utilisons, ce ne sera pas pour les faire tourner sur une seule image, mais pour traiter de grandes quantités de données. Nous avons donc besoin d’accéder à ces traitements en ligne de commande ou en lançant des scripts sur le serveur où se trouvent les données.

Calcul proche des données

Traiter les données sur le cloud permet d’économiser le temps de téléchargement, les données en sortie des traitements étant souvent moins volumineuses que celles en entrée (par exemple, une carte d’occupation des sols produite à partir d’une année de données Sentinel-2). Cela présente cependant de nombreuses difficultés, et nous aimerions que l’on nous facilite la tâche.

D’un cloud à l’autre, les outils pour automatiser les traitements, ouvrir des machines virtuelles, lancer des processus peuvent différer. Si les données dont nous avons besoin sont sur des clouds différents, ou si nous souhaitons pouvoir déplacer nos traitements d’un cloud à l’autre, nous avons besoin d’apprendre les protocoles API propres à ces clouds, et de les adapter quand nous en changeons. Ce n’est pas efficace.

Nos travaux commencent presque toujours par la constitution de cubes de données, dont les dimensions sont les coordonnées spatiales, le temps, les bandes spectrales ou des informations diverses. Le format actuel des données Sentinel-2 peut être vu comme un cube de données, avec une granularité par date. Cependant, il peut-être pratique de réaliser des cubes de données plus grands ou plus petits que les tuiles de 110 x 110 km² de données. L’utilisation d’une API qui génère ces cubes de données à la volée, et permet de leur appliquer des traitements est donc très intéressante. C’est le cas de la librairie OpenEO. Ce n’est pas la seule API de ce genre, mais elle est bien faite et a le bon gout d’être un logiciel libre.

Accès à différents clouds au travers de l’API OpenEO (à partir d’un article de blog de r-spatial)

Accès à différents clouds au travers de l’API OpenEO (à partir d’un article de blog de r-spatial)

Pour pouvoir utiliser des données réparties sur plusieurs clouds, OpenEO doit être installée côté serveur sur ces clouds. OpenEO utilise donc la notion de fédération de données. La génération des datacubes peut-être réalisée en parallèle sur plusieurs clouds, chaque cloud préparant la partie du datacube dont il possède les données. Pour un centre de distribution de données, participer à cette fédération donne donc aussi de la visibilité qu’il met à disposition.

Nous avons quelque peu insisté auprès du CNES pour que ce soit mis en place, et le CNES a intégré cette demande a sa feuille de route et a lancé une étude du type « preuve de concept »

De l’aide… de l’aide… .

.